AWS Bedrock vs PremAI: Which Generative AI Platform Fits Your Enterprise?

Bedrock vs PremAI for enterprise generative AI. We compare foundation models, deployment, costs vs OpenAI, and sovereign AI so you can pick the right platform.

Most enterprise teams picking a generative AI platform start with the same Google search. "AWS Bedrock vs [something]." And most of the results they find compare Bedrock to other cloud providers like Azure or Google Vertex AI.

That comparison misses the point.

The real decision for a growing number of organizations isn't "which cloud API should I use." It's whether you should use a managed cloud API at all, or own the entire AI stack yourself.

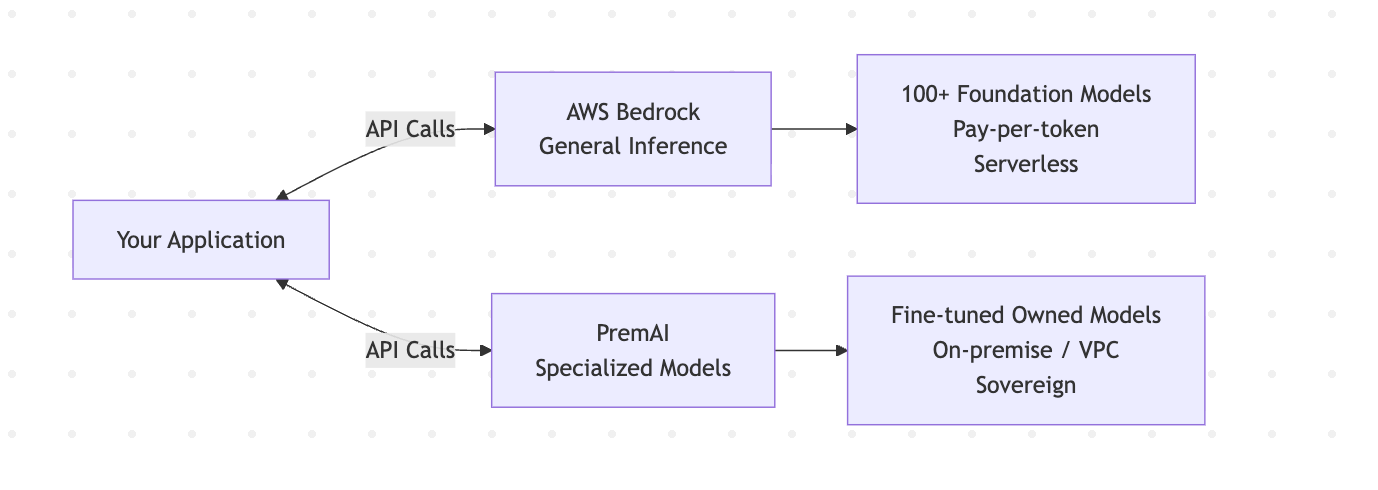

Amazon Bedrock and PremAI represent these two different approaches. Bedrock gives you a fully managed API layer with access to 100+ foundation models inside AWS. PremAI gives you a sovereign AI platform where you own the models, control the data, and deploy on your own infrastructure.

This guide breaks down the actual differences. Cost, fine-tuning, data sovereignty, deployment, and specific use cases where each platform makes more sense. No marketing fluff, just the information you need to make a good call.

What Amazon Bedrock Actually Does (And Where It Stops)

Amazon Bedrock is a fully managed serverless service that gives you API access to foundation models from multiple providers. It's not a model. It's not a training platform. It's an access layer.

Through a single API, you get models from Anthropic (Claude family), Meta (Llama 3), Mistral, Cohere, Stability AI, and Amazon's own Titan models. You don't manage any infrastructure. AWS handles the compute, scaling, and availability behind the scenes.

That simplicity is the product.

What Bedrock includes:

- Foundation model access: 100+ models via a unified API, including text, image, and embedding models

- Knowledge Bases: Managed RAG (retrieval augmented generation) with automatic vector storage, chunking, and retrieval

- Guardrails: Content filters to block harmful content, PII detection, and topic-level controls

- Agents: Build AI agents on AWS that can call APIs, query databases, and execute multi-step workflows through AgentCore

- Bedrock Studio: A visual workspace for teams to experiment with models, prompts, and configurations before going to production

- Model evaluation: Compare model outputs side-by-side with Bedrock evaluation tools

- Cross-region inference: Route requests across multiple AWS regions for redundancy

Bedrock plugs directly into the broader AWS services stack. IAM for access control. CloudWatch for monitoring. S3 for storage. Lambda for serverless functions. SageMaker for the full ML lifecycle when you need it.

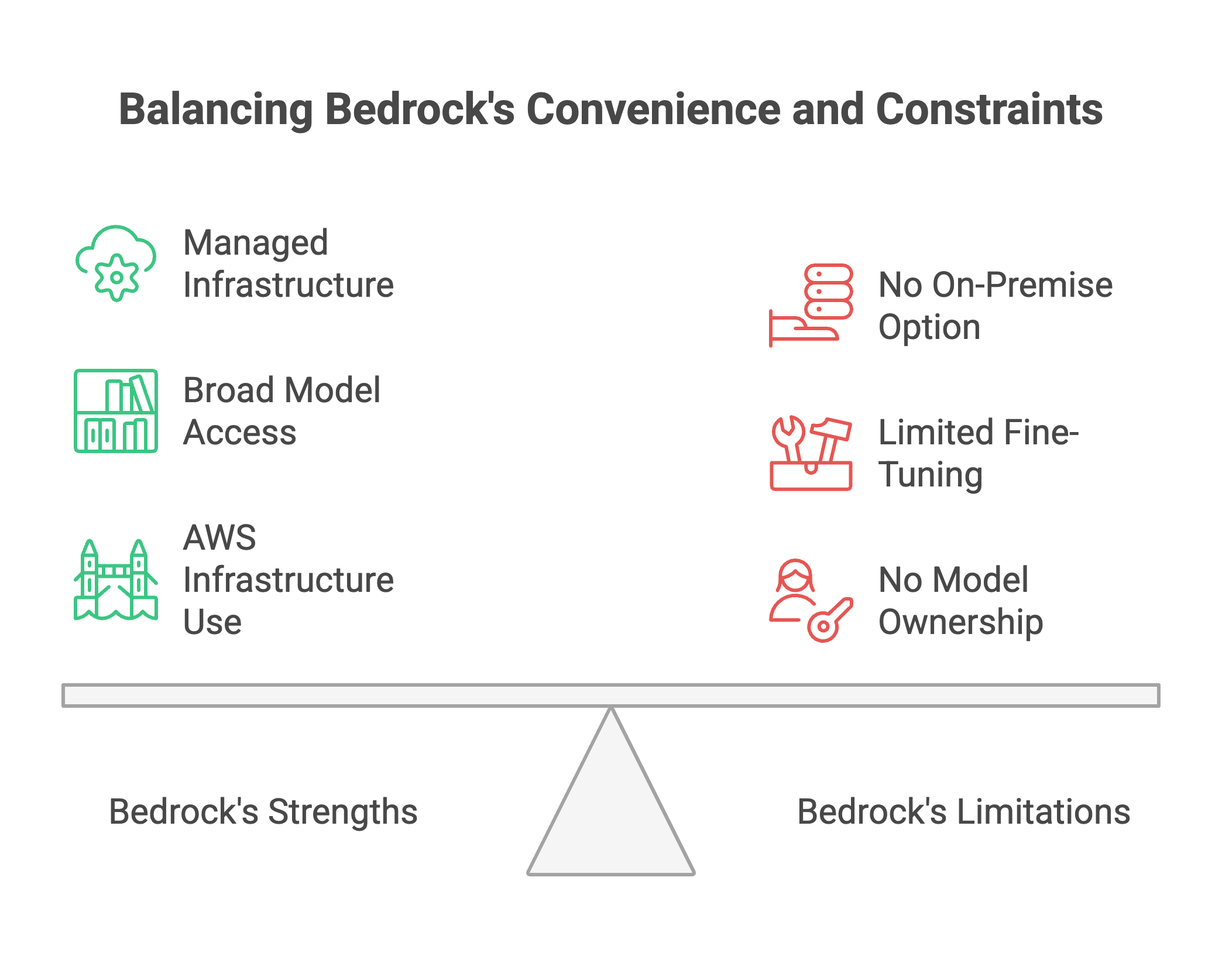

Where Bedrock stops:

There's no on-premise deployment option (yet, with caveats we'll cover later).

You can't download model weights. Custom fine-tuned models only run inside Bedrock with provisioned throughput. And the model selection for fine-tuning is narrower than what's available for inference. You get to use Amazon's AI infrastructure, but you don't own any of it.

For teams that are already deep in AWS and want fast access to generative AI capabilities, Bedrock makes that straightforward. The question is whether "fast access" is enough for what you're building.

What PremAI Actually Does (And Where It Stops)

PremAI is a sovereign AI platform.

The core idea: you own everything. The models, the data, the deployment environment.

Where Bedrock gives you an API to call someone else's models on someone else's infrastructure, PremAI gives you tools to build production-ready AI models that run on your terms. The flagship product is Prem Studio, which handles the full workflow from raw data to deployed custom model.

What PremAI includes:

- Datasets module: Drag-and-drop data upload (JSONL, PDF, TXT, DOCX) with automatic PII redaction and synthetic data augmentation

- Autonomous fine-tuning: 30+ base models including Llama, Mistral, Qwen, Gemma. The system handles hyperparameter optimization, experiment tracking, and runs up to 6 concurrent experiments

- Knowledge distillation: Create smaller, faster specialized models from larger teacher models. 10x smaller, 10x faster inference

- Evaluations: LLM-as-a-judge scoring, side-by-side model comparisons, and custom evaluation rubrics

- Deployment options: On-premise, AWS VPC, hybrid cloud, or via AWS Marketplace

- Swiss jurisdiction: Operates under the Federal Act on Data Protection (FADP). SOC 2, GDPR, HIPAA compliant. Cryptographic verification for every interaction

Where PremAI stops:

It's not a serverless model marketplace. You won't get instant access to 100+ foundation models through a single API call. PremAI doesn't replace the convenience of Bedrock for quick prototyping or teams that just need inference on a standard model.

The platform is built for organizations that need specialized, owned AI capabilities, not general-purpose model access.

The Prem API is expanding, but it's not trying to be a Bedrock clone with more models. It's solving a different problem.

The Real Cost Comparison: Amazon Bedrock vs PremAI vs OpenAI

Cost is where most comparisons fall apart. The sticker prices look straightforward. The actual bills don't.

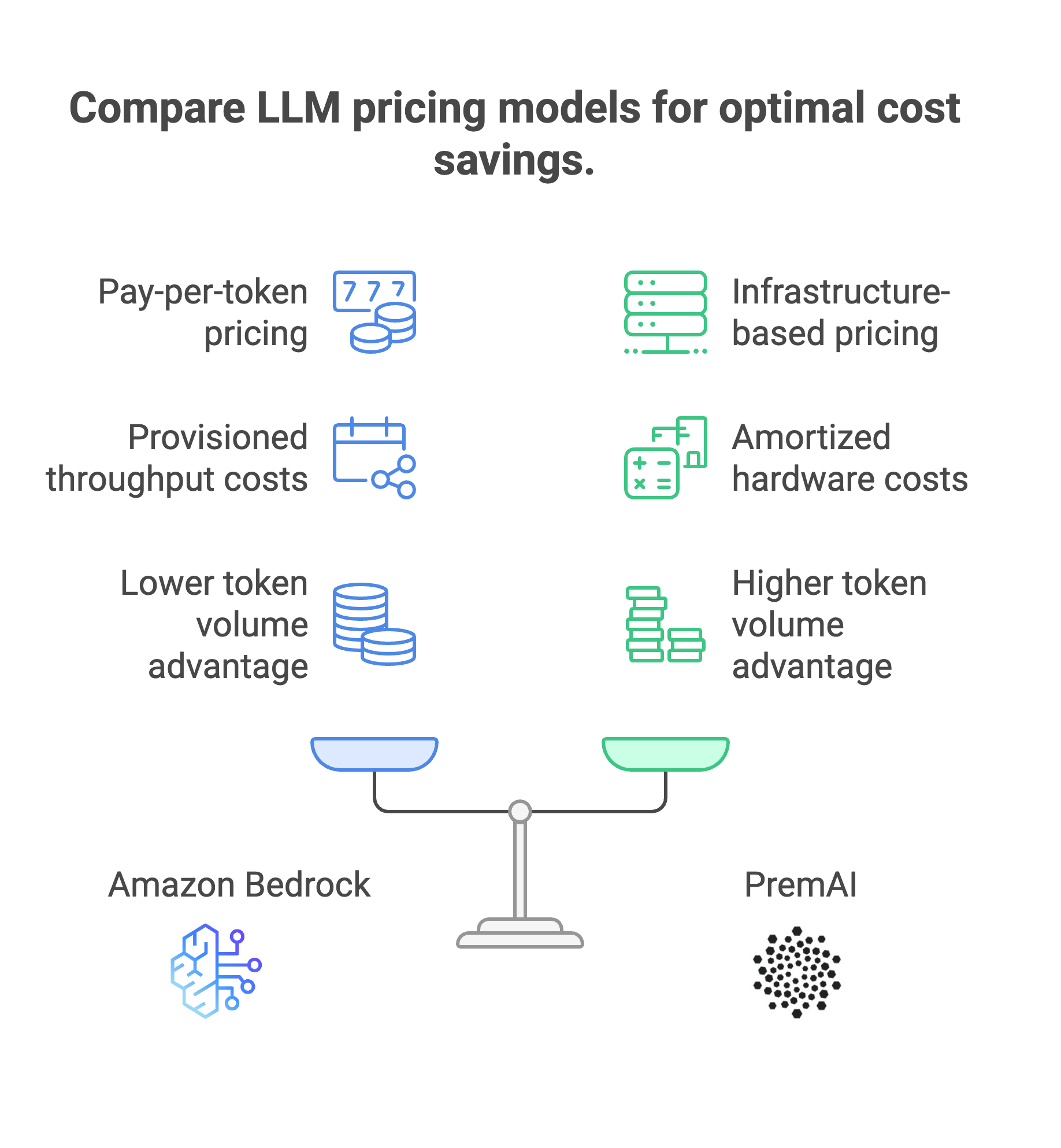

Bedrock's pricing model:

Bedrock uses pay-per-token pricing for on-demand inference.

Quick example with Anthropic Claude Sonnet: $3 per million input tokens, $15 per million output tokens. Output tokens cost 5x more than input, which catches teams off guard when their applications are generating long responses.

But the token price isn't your total cost. Most teams miss the surrounding AWS services that Bedrock requires:

That last line is the one that stings.

If you fine-tune a model on Bedrock, you can't run inference on it using on-demand pricing. You need provisioned throughput. That's a fixed monthly commitment regardless of how much you actually use the model.

Costs of OpenAI comparison:

Many teams evaluating Bedrock are migrating from OpenAI. The OpenAI to Bedrock move often promises savings through multi-model routing (using cheaper models for simple tasks, expensive ones for complex tasks).

But when you factor in AWS service overhead, the savings can be thinner than expected. Teams processing under 50M tokens/month sometimes find the costs roughly equivalent.

PremAI's pricing model:

PremAI uses infrastructure-based pricing, not per-token fees. You pay for compute resources, not individual API calls. The cost difference at scale is dramatic.

Cost comparison at different token volumes:

Estimates based on mixed model usage (mid-tier models). Bedrock estimates include core service overhead. PremAI estimates assume amortized hardware costs over 24 months. Actual costs vary by model selection and use case.

The crossover point: organizations processing 500M+ tokens per month typically see 50-70% cost reduction with PremAI's on-premise deployment. Breakeven on hardware investment happens around 12-18 months for most enterprise workloads.

For lower volumes, Bedrock's pay-per-use model avoids upfront investment. That's a real advantage for teams still experimenting or running variable workloads.

Fine-Tuning and Model Customization: Where the Difference Gets Real

If you only need inference (send prompt, get response), both platforms work. The gap shows up when you need custom models trained on your data.

1. Bedrock fine-tuning:

Bedrock supports fine-tuning on a subset of its available models.

Amazon Titan, Meta Llama, and Cohere Command are the primary options. You upload training data, configure basic parameters, and Bedrock handles the rest.

The limitations show up fast:

- Limited hyperparameter control compared to running your own training

- Fine-tuned custom models require provisioned throughput (~$15K/month) for inference

- No access to model weights. Your custom model lives inside Bedrock, period

- Reinforcement fine-tuning (RFT) launched in late 2025 with 66% accuracy gains, but currently only supports Amazon Nova 2 Lite

Bedrock also offers model distillation within its ecosystem, but the target models are limited to what's available on the platform.

2. PremAI fine-tuning:

PremAI's autonomous fine-tuning system takes a different approach.

The platform handles the ML complexity so you don't need a dedicated ML team.

The workflow: Collect → Clean → Augment with synthetic data → Fine-tune → Evaluate → Deploy

Specific capabilities:

- 30+ base language models to choose from (Llama, Mistral, Qwen, Gemma, DeepSeek)

- Autonomous hyperparameter optimization across up to 6 concurrent experiments

- Built-in PII redaction before data touches any model

- Knowledge distillation to create specialized reasoning models (SRMs) that are 10x smaller

- LLM-as-a-judge evaluation with custom rubrics

- Download model checkpoints and deploy anywhere: vLLM, Ollama, on-premise, cloud

That last point matters more than it seems. With Bedrock, your custom model is locked to AWS. With PremAI, you get portable model weights. Deploy them on your own servers. Move them between cloud providers. Run them on edge devices if needed.

Fine-tuning comparison:

For RAG use cases, Bedrock Knowledge Bases handle retrieval augmented generation natively with managed vector storage. PremAI pairs fine-tuned models with your own RAG stack, using tools like LlamaIndex or custom retrieval pipelines for tighter control over how context gets injected.

The choice depends on what you're building. Quick LoRA fine-tune on Titan for a chatbot? Bedrock handles that. Building a production-grade specialized reasoning model for regulatory compliance processing? That's PremAI territory.

Data Sovereignty: "Private" Means Different Things

Both platforms claim strong security. Both are technically correct. But they mean very different things by "private."

Amazon Bedrock's privacy model:

Bedrock keeps your data within your chosen AWS region. Your prompts and completions are encrypted in transit and at rest. AWS does not use your data to train or improve base models. Model providers (Anthropic, Meta, etc.) don't see your data either.

You get VPC isolation, IAM-based access control, and AWS PrivateLink to keep traffic off the public internet. Compliance certifications include SOC, HIPAA, GDPR, ISO 27001, and FedRAMP High.

This is a strong security setup. For many organizations, it's sufficient.

Where the gap appears?

Your data still processes on shared AWS infrastructure, even if it's logically isolated. You trust AWS to enforce the boundaries. There are no cryptographic proofs that your data wasn't accessed, copied, or retained somewhere in the pipeline.

For a SaaS startup? Probably fine. For a European bank processing customer financial data under strict regulatory oversight? That "trust us" model gets harder to sell to compliance teams.

AWS recognized this gap. At re:Invent 2025, they announced AWS AI Factories, which bring Bedrock capabilities to on-premise hardware. But early reports suggest multi-year commitments and significant minimum spend. It's a step toward sovereignty, not a complete answer yet.

PremAI's privacy model:

PremAI operates on a "don't trust, verify" architecture. The platform provides cryptographic proofs for every interaction: hardware-signed attestations that your data was processed as promised.

On-premise deployment means data never leaves your infrastructure. Not temporarily, not for processing, not for logging. Zero data retention is verified, not just promised.

Operating under Swiss jurisdiction (FADP) adds another layer. Swiss data protection law is among the strictest globally, and Prem AI maintains SOC 2, GDPR, and HIPAA compliance on top of that.

The platform also handles personally identifiable information redaction natively in the data pipeline, before your data ever reaches a model for fine-tuning.

Sovereignty comparison:

For organizations in finance, healthcare, government, or any sector with strict data residency requirements. A private AI platform that processes data on-premise with cryptographic proof is a fundamentally different security posture than a cloud API with strong access controls.

Deployment and AI Infrastructure: Serverless vs. Sovereign

The deployment experience shapes how your team interacts with AI daily.

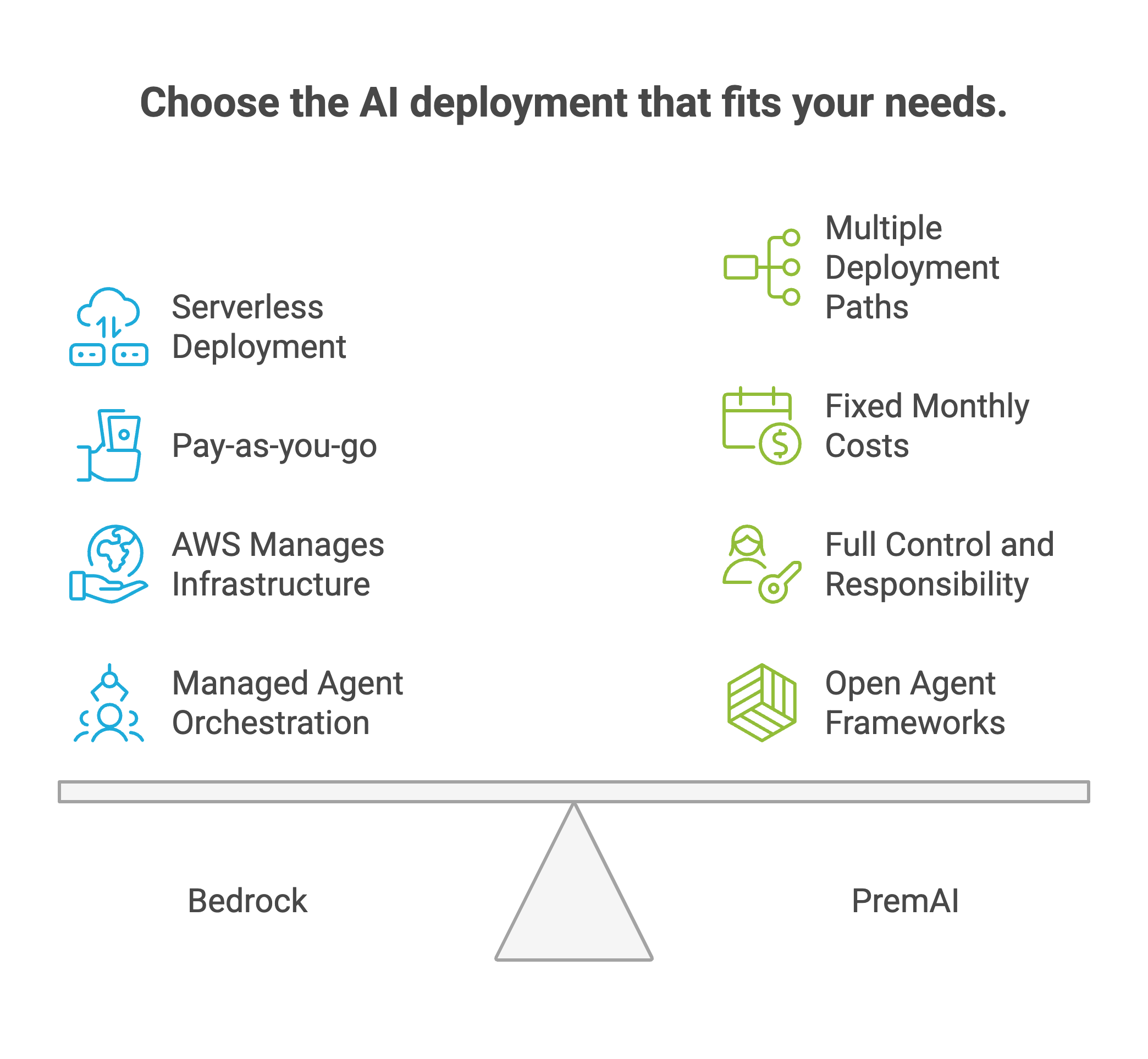

1. Bedrock deployment

Bedrock is serverless. No instances to provision. No GPUs to manage. You call an API, you get a response. AWS handles scaling, availability, and cross-region failover automatically.

This matters for variable workloads. If your generative AI usage spikes 10x on Monday and drops to near-zero on weekends, you only pay for what you use. No idle hardware.

But serverless comes with constraints. New AWS accounts start with rate limits as low as 2 requests per minute for some models. Quota increases require support tickets and can take days. Provisioned throughput solves the rate limit problem but adds fixed monthly costs.

For generative AI deployments across multiple AWS regions, Bedrock's cross-region inference is genuinely useful. It routes requests to the nearest available region automatically, reducing latency and improving reliability.

2. PremAI deployment

PremAI offers four deployment paths:

- On-premise: Deploy on your own hardware. Full control. Full responsibility.

- AWS VPC: Run within your own AWS account. Securely within your own AWS infrastructure, but you manage the compute.

- Hybrid: Critical workloads on-premise, less sensitive workloads in cloud.

- AWS Marketplace: SaaS deployment through AWS with simplified billing.

For self-hosted deployment, PremAI supports serving fine-tuned models through vLLM or Ollama. You can locally serve OpenAI-compatible endpoints from your Prem fine-tuned models, which means existing applications that use the OpenAI SDK can switch between different model backends with minimal code changes.

The workflow difference:

Bedrock workflow: Select model → Call API → Get response → (Optional: Fine-tune → Provision throughput → Call API)

PremAI workflow: Upload data → Auto-clean + PII redact → Fine-tune → Evaluate → Deploy anywhere → Own the model

Bedrock optimizes for speed-to-first-response. PremAI optimizes for long-term AI infrastructure ownership. Different goals, different architectures.

For AI agents on AWS, Bedrock's AgentCore provides managed orchestration with tool calling, memory, and multi-step execution.

PremAI takes a more open approach. You can build agents using any framework (LangGraph, CrewAI, custom) and pair them with your fine-tuned models. More flexibility, more setup.

When Amazon Bedrock Is the Right Call

Bedrock makes sense when:

- You're already deep in AWS. Lambda, S3, SageMaker, Redshift, the whole stack. Bedrock plugs in natively. IAM policies, CloudWatch monitoring, and VPC networking work out of the box.

- You need a wide range of foundation models. Testing Anthropic Claude against Llama 3 against Mistral against Amazon Titan for different applications and use cases? Bedrock lets you switch between different models with a parameter change. No infrastructure changes.

- Your workload is inference-heavy, not fine-tuning-heavy. If you mostly need to send prompts and get responses across multiple use cases (customer experience chatbots, document summarization, code generation), Bedrock's serverless model is efficient.

- VPC isolation satisfies your compliance needs. Not every organization needs on-premise. If your security team is comfortable with AWS region-level isolation and encrypted processing, Bedrock's compliance certifications cover most requirements.

- You used OpenAI and want to diversify. Migrating from OpenAI to Bedrock gives you access to multiple providers through one integration, reducing vendor dependency and letting you select the best model for each specific use case.

- Speed matters more than ownership. You can go from zero to a working generative AI application in hours, not weeks. For prototyping and early-stage AI development, that velocity is hard to beat.

When PremAI Is the Right Call

PremAI makes sense when:

- Data cannot leave your infrastructure. If you're in a regulated industry (finance, healthcare, government, EU enterprises), and "cloud isolation" isn't enough for your compliance team, on-premise sovereignty is the only answer. PremAI provides that with cryptographic verification.

- You need specialized custom models. Generic foundation models are good at general tasks. They're mediocre at domain-specific work like regulatory compliance processing, medical document analysis, or financial fraud detection. PremAI's fine-tuning pipeline builds models that are purpose-built for your use case.

- Token volume makes per-token pricing painful. At 500M+ tokens/month, the math shifts hard. Per-token APIs bleed money at scale. PremAI's infrastructure-based pricing flattens that curve.

- You want to own your AI models. Downloadable checkpoints mean you're never locked to a single vendor. Deploy on vLLM today, switch to a different serving framework tomorrow. Model portability is a strategic advantage.

- You're building a competitive moat. If AI is core to your product (not just a feature), owning specialized reasoning models that competitors can't replicate matters. You can't build a moat on someone else's API.

- You want to accelerate without ML expertise. PremAI's autonomous fine-tuning handles the ML complexity. Upload data, set goals, and the platform runs experiments automatically. No ML team required for enterprise AI fine-tuning.

Can You Use Both? (Yes, and Many Teams Do)

This isn't actually an either/or decision. PremAI is available on AWS Marketplace, and the two platforms solve different parts of the AI stack.

The hybrid approach:

Use Bedrock for general-purpose inference where you need fast access to multiple foundation models. Chatbots, summarization, content generation, simple tasks that don't need custom training.

Use PremAI for specialized models where domain accuracy and data control matter. Compliance workflows, fraud detection, medical analysis, proprietary AI capabilities that create competitive advantage.

PremAI also supports a "bring your own endpoint" capability. You can route Bedrock-deployed models through PremAI's platform, giving you a unified interface across both managed and self-hosted models.

The point: don't put all your eggs in one basket. Use managed APIs where convenience matters and sovereign infrastructure where control matters. Many enterprise AI strategies for 2025 and beyond are moving toward exactly this kind of hybrid setup.

FAQ

1. Is Amazon Bedrock truly private?

Bedrock encrypts your data, keeps it in your chosen AWS region, and doesn't use it for training. That's strong security. But your data still processes on AWS infrastructure, and there are no cryptographic proofs of how it was handled. For most companies, Bedrock's privacy is sufficient. For regulated industries needing verifiable sovereignty, it may not be.

2. Can PremAI work with AWS infrastructure?

Yes. PremAI is available on AWS Marketplace as a SaaS deployment. You can also deploy PremAI within your own AWS VPC, or use a hybrid setup with on-premise and cloud components. The platforms aren't competitors so much as they solve different problems within the same ecosystem.

3. Which is cheaper for enterprise-scale generative AI?

Depends on volume. Under 100M tokens/month, Bedrock's pay-per-token model avoids upfront costs and is often simpler to budget. Above 500M tokens/month, PremAI's infrastructure-based pricing typically delivers 50-70% cost reduction. The hidden costs (CloudWatch, OpenSearch, provisioned throughput) on the Bedrock side are what catch most teams off guard.

4. Do I need ML expertise for either platform?

Bedrock requires minimal ML knowledge for basic inference and RAG. Fine-tuning on Bedrock needs some understanding of training data preparation. PremAI's autonomous fine-tuning pipeline was designed to remove the ML expertise bottleneck. Upload data, set objectives, and the platform handles experiment design, hyperparameter optimization, and evaluation automatically.

5. Can I migrate from OpenAI to either platform?

Both support it. Bedrock gives you a managed alternative with multi-model access through a single API. PremAI offers OpenAI-compatible endpoints, so applications built on the OpenAI SDK can switch to self-hosted PremAI models with minimal code changes. The OpenAI to Bedrock path is simpler for teams that want to stay in managed cloud. The OpenAI to PremAI path makes more sense for teams that want to own their models long-term.

The Bottom Line

Bedrock and PremAI aren't competing for the same job. Bedrock is the best way to get fast, managed access to 100+ foundation models inside AWS. PremAI is the best way to own your AI stack, from training data to deployed model, with full data sovereignty.

If your generative AI needs are mostly inference on standard models, you're already running on AWS, and VPC isolation checks your compliance boxes, Bedrock is the straightforward choice. You'll be up and running in hours.

If you're processing sensitive data in regulated industries, need specialized custom models that outperform generic APIs, or your token volume has made per-request pricing unsustainable, PremAI solves those problems in ways that managed cloud APIs structurally can't.

Most enterprise teams will eventually use elements of both. Managed APIs for general tasks, sovereign infrastructure for the AI capabilities that actually differentiate their business.

The question isn't which platform is better. It's which problems you're solving first.

Ready to own your AI? Start with Prem Studio to build, fine-tune, and deploy custom models on your infrastructure. Or book a demo to see how PremAI fits your enterprise AI stack.