16 Best OpenRouter Alternatives for Private, Production AI (2026)

Compare 16 OpenRouter alternatives for production AI. Covers private gateways, self-hosted proxies, enterprise platforms, and high-speed inference providers.

OpenRouter gives you one API key for hundreds of LLMs. For prototyping, that's enough. For production AI systems, it starts to crack.

The 5% markup hits hard at scale. On $100K/month in API spend, that's $60K/year just for routing. There's no self-hosted option, which means every request passes through a third-party proxy. Observability is thin. And if your industry requires data residency controls, OpenRouter simply doesn't offer them.

We tested and compared 16 alternatives to OpenRouter across four categories: AI gateways, enterprise platforms, inference providers, and self-hosted options. Some drop the markup entirely. Others give you full control over where your data lives. A few handle the entire model lifecycle from training data to deployment.

Quick Comparison Table

AI Gateways and Routing Proxies

These are the closest functional alternatives to OpenRouter. They sit between your application and LLM providers, handling routing, fallbacks, and API translation across multiple models.

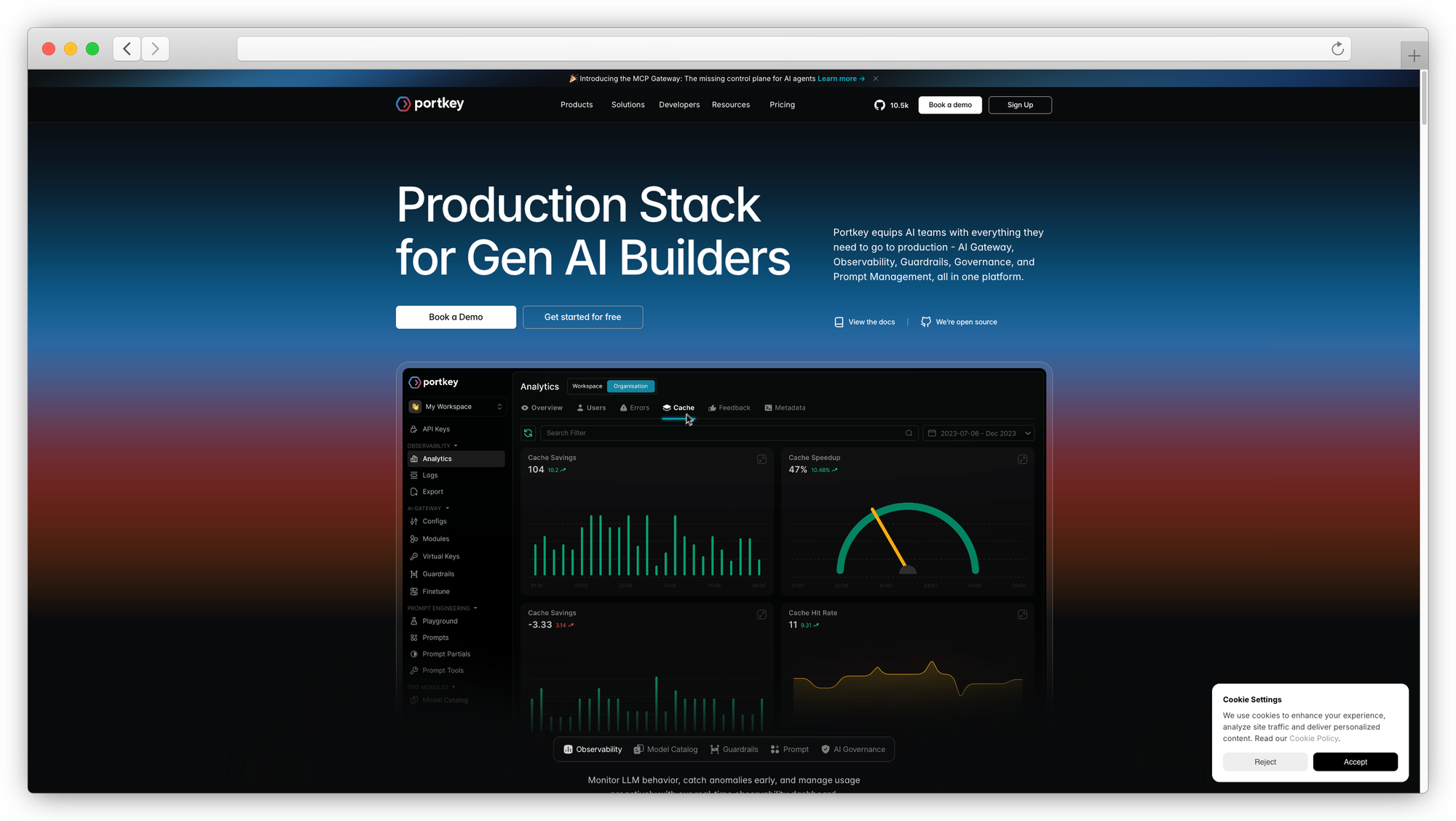

1. Portkey

Enterprise AI gateway with built-in compliance and observability.

Portkey routes requests across 200+ LLMs with smart caching, retries, and load balancing. SOC2, GDPR, and HIPAA compliance come standard. The observability layer tracks cost per user, latency distributions, and error rates natively. Self-hostable or managed. 10K+ GitHub stars.

Fits: Regulated industries needing compliance-first routing. Gaps: Managed tier pricing adds up at high volume.

2. LiteLLM

Open-source Python proxy. 100+ LLM APIs in OpenAI format. Zero markup.

Install with pip or run as a Docker container. LiteLLM translates different provider formats (Anthropic, Cohere, Bedrock, Vertex AI) into a unified API, so swapping Claude for Gemini is a config change. Budget tracking, load balancing, and fallback routing are built in.

The trade-off: you manage everything. No hosted dashboard, no support team. You run it, you maintain it.

Fits: Teams that want full control over their gateway logic and can handle the ops. Gaps: Requires technical chops. No built-in compliance features.

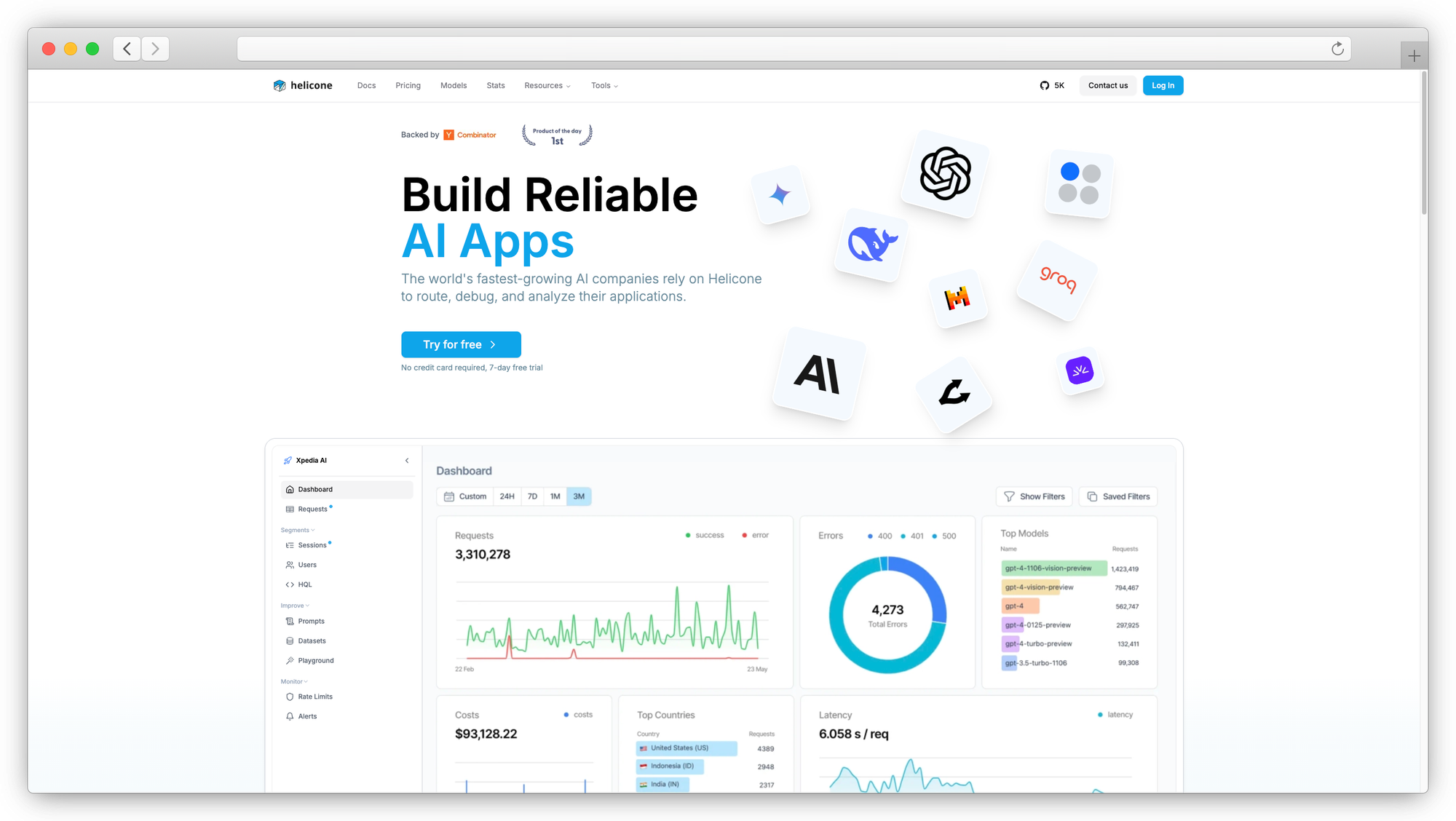

3. Helicone

Zero-markup gateway with real-time cost tracking and latency monitoring.

Migration from OpenRouter takes minutes: swap your base URL and API key. Helicone charges no markup on provider costs, which directly addresses OpenRouter's 5% tax.

Real-time cost tracking, latency monitoring, and error analysis come standard. Self-host via Docker or Kubernetes for full data residency, or use managed cloud.

Fits: Teams migrating off OpenRouter who want the same simplicity with better cost visibility. Gaps: Smaller model catalog. Enterprise features are newer.

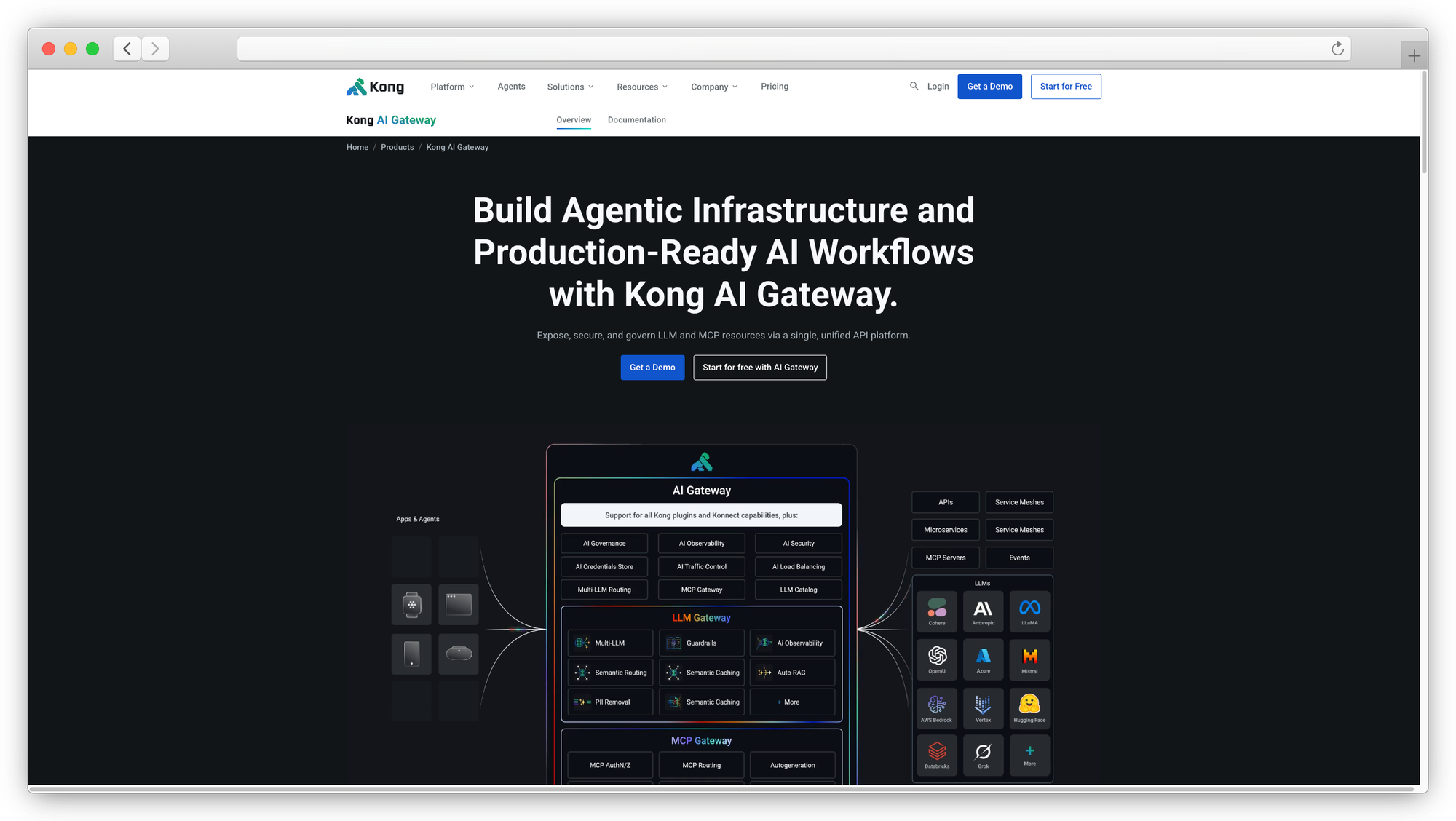

4. Kong AI Gateway

Open-source API gateway extended for AI traffic management.

Kong AI Gateway is an open-source extension of Kong Gateway. It operates at the network edge, so rate limiting, authentication, and routing happen before requests reach your backend. If you're already running Kong for API governance, adding LLM routing slots right in.

Setup takes more effort than purpose-built alternatives. Kong wasn't built for AI from the start, and you'll wire up caching and observability yourself through plugins.

Fits: Teams already on Kong who want to centralize AI traffic with existing API infrastructure. Gaps: Steep learning curve if you're not already a Kong user.

5. Cloudflare AI Gateway

Basic AI routing on Cloudflare's global CDN.

If your stack already lives on Cloudflare, adding an AI gateway takes minutes. Caching, rate limiting, and basic analytics included. The CDN means low-latency routing requests to providers regardless of user location.

Cloud-only, no self-hosting. Most teams outgrow it once they need advanced routing logic or compliance features.

Fits: Cloudflare users who need basic LLM routing fast. Gaps: No self-hosting. Thin customization.

6. Vercel AI Gateway

Managed, OpenAI-compatible gateway with tight Next.js integration.

Vercel's gateway handles routing, fallbacks, and bring-your-own-key support for multiple LLM providers. Integrates directly with the Vercel AI SDK. Managed-only, no self-hosting option.

Fits: Frontend teams already on Vercel/Next.js. Gaps: Cloud-only. Limited to Vercel ecosystem.

Enterprise and Privacy-First Platforms

If your primary concern is data sovereignty, these platforms go beyond routing. They offer infrastructure-level privacy guarantees that a gateway alone can't match.

7. Prem AI

Swiss-based confidential AI platform. Full model lifecycle with zero data retention.

Prem AI isn't a routing layer. It handles the full pipeline: dataset preparation with automatic PII redaction, autonomous fine-tuning across 30+ base models, LLM-as-a-judge evaluations, and one-click deployment to your own AWS VPC or on-prem hardware.

Every interaction is cryptographically verified. The architecture is stateless, meaning nothing persists after inference. GDPR, HIPAA, and SOC2 compliance come through Swiss jurisdiction under the FADP. Prem Studio lets you run up to 6 concurrent fine-tuning experiments and deploy specialized reasoning models at sub-100ms inference latency.

If your concern with OpenRouter is that every prompt flows through a third-party proxy with no visibility into data handling, Prem removes that dependency entirely. You own the models, the data, and the infrastructure. See the enterprise setup.

Fits: Regulated industries (finance, healthcare, government) that need AI sovereignty and a complete fine-tuning pipeline. Gaps: Not a simple routing swap. This is a platform commitment, not a drop-in alternative.

8. TrueFoundry

Deploy an AI gateway inside your own VPC with enterprise governance.

TrueFoundry runs entirely within your controlled environment. Data never touches an external proxy. Supports agentic AI workflows and multi-model orchestration. Enterprise-tier pricing, aimed at organizations with dedicated MLOps teams.

Fits: Large orgs that need on-premise AI infrastructure. Gaps: Enterprise pricing. Requires MLOps expertise.

9. Eden AI

Multi-service AI aggregator. One unified API for text, vision, speech, translation, and more.

Eden AI goes wider than most alternatives to OpenRouter. Beyond LLMs, it provides a single API for image generation, translation, text-to-speech, OCR, and sentiment analysis across dozens of AI providers. Pay-as-you-go, no vendor lock-in.

Fits: Teams that need access to multiple AI services beyond language models. Gaps: Weaker on LLM-specific routing, cache, and monitoring.

High-Performance Inference Providers

Not every team needs a routing layer. Sometimes the bottleneck is raw speed or cost per token. These providers focus on fast, affordable model serving.

10. Together AI

200+ models with sub-100ms latency. Fine-tuning included.

Together AI hosts open-source and proprietary models with solid inference performance. Fine-tuning on the same platform means you're not stitching together separate tools for training and serving. VPC deployment available for enterprise AI customers. Training costs start at $2 per million tokens.

Fits: Teams that want fast inference and model customization on one platform. Gaps: Not fully self-hosted unless on VPC tier. Less routing flexibility than a dedicated AI gateway.

11. Fireworks AI

Optimized for throughput. 4x lower latency than vLLM on common architectures.

Fireworks built their stack for production AI workloads where every millisecond counts. Training costs at $2 per million tokens. Model variety is narrower than OpenRouter, but inference performance is strong on supported architectures.

Fits: Latency-sensitive applications: real-time chat, code completion, search augmentation. Gaps: Smaller model catalog. No self-hosting.

12. Groq

Custom LPU hardware. Fastest first-token latency in benchmarks.

Groq runs inference on proprietary Language Processing Unit chips. Independent benchmarks show 0.13s first-token latency on short prompts, 0.14s on long prompts. Nothing on shared cloud GPUs comes close.

Model selection is limited to what Groq's hardware supports. But for those models, the speed gap is obvious.

Fits: Applications where inference speed is the primary constraint. Gaps: Limited model selection. No fine-tuning or self-hosting.

13. SambaNova

Custom RDU chips. Enterprise-focused with on-prem options.

SambaNova builds Reconfigurable Dataflow Unit chips for AI workloads. On-premise deployment, strong long-context support, high-throughput batch processing. Not a drop-in alternative to OpenRouter. This is an infrastructure play for large organizations running AI at scale on dedicated silicon.

Fits: Large enterprises with heavy compute needs and hardware budget. Gaps: High cost of entry. Not practical for small teams.

Open-Source and Self-Hosted Options

For teams that want to eliminate third-party dependencies entirely. Run open-source models on your own hardware, keep data local, pay nothing for routing. Our self-hosted LLM guide covers the setup and costs in more detail.

14. Ollama

Run open-source LLMs locally with a single command. OpenAI-compatible API.

Pull a model, start serving. Supports Llama, Mistral, Gemma, and dozens more. The API is OpenAI-compatible, so switching from a cloud provider is a config change. Fastest path from zero to a self-hosted local LLM.

Fits: Developers who want local model access for prototyping or privacy-sensitive work. Gaps: Not built for production-scale serving. Limited by local hardware.

15. GPT4All

Desktop app for running open-source models offline. No internet required.

GPT4All runs entirely on your machine. No API calls, no data leaving your device. Works across Windows, Mac, and Linux. Good for individual developers and researchers who want a local AI chat interface without touching a terminal. Not meant for production API serving.

Fits: Individual users who want offline, private LLM access. Gaps: No API serving. No multi-user support.

16. Unify AI

Routes each query to the cheapest or fastest provider using real-time benchmarks.

Unify continuously tests LLM provider performance and picks the best endpoint per request. Optimizes for cost, speed, or both. A smart routing layer on top of existing providers, not a replacement for them.

Fits: Teams running multiple AI providers who want to simplify cost management. Gaps: Adds another dependency. Routing decisions are opaque without digging into analytics.

How to Choose the Right OpenRouter Alternative

The right pick depends on what's actually limiting you.

- Cutting costs: LiteLLM (free, self-hosted), Helicone (zero markup, managed), or Unify (automatic cost optimization across providers).

- Privacy and compliance: Prem AI for Swiss-jurisdiction sovereignty with cryptographic verification. TrueFoundry for VPC-only deployment. Portkey for SOC2/HIPAA with a managed gateway option.

- Raw speed: Groq for lowest latency on supported models. Fireworks for high-throughput production workloads. SambaNova for enterprise batch processing.

- Quick setup: Ollama for local dev. Cloudflare or Vercel if you're already on those platforms. Minimal config, minimal overhead.

- Full model lifecycle: Prem AI and Together AI both handle fine-tuning and deployment alongside inference, so you're not stitching together separate tools for each step.

If you've outgrown OpenRouter, the question isn't which alternative is "best." It's whether you need a better router or a better platform.

FAQ

1. What is OpenRouter and why look for alternatives?

OpenRouter is an LLM aggregator providing a unified API for hundreds of AI models through a single API key. Teams switch when the 5% markup gets expensive, when they need self-hosted deployment for data residency, or when they need better observability and compliance.

2. Can I self-host an OpenRouter alternative?

LiteLLM, Helicone, Kong AI Gateway, and Ollama are fully open-source and self-hostable. Prem AI and TrueFoundry offer on-premise or VPC deployment. Portkey supports self-hosting too. Cloudflare and Vercel are cloud-only.

3. Which OpenRouter alternative is best for enterprise AI?

Prem AI for data sovereignty with Swiss jurisdiction, zero data retention, and a full fine-tuning pipeline. Portkey for SOC2/HIPAA with a managed AI gateway. TrueFoundry for deploying entirely within your own VPC.

4. Is LiteLLM a good replacement for OpenRouter?

It's the closest open-source equivalent. Translates 100+ LLM APIs into OpenAI format, handles load balancing and fallbacks, costs nothing. The gap is managed features: no dashboard, no support team, no compliance tooling. If your team can handle the infrastructure, it's one of the strongest picks.

Bottom Line

OpenRouter works fine for experimentation. Once you're running AI in production, you need more control over cost, privacy, and how your models are served.

If your main frustration is the 5% markup, LiteLLM or Helicone solve that in an afternoon. If speed is the bottleneck, Groq and Fireworks deliver inference performance that no aggregator can match.

But if your team needs the full picture, data sovereignty, fine-tuning on your own data, and deployment to infrastructure you control, Prem AI handles the entire lifecycle. From dataset preparation to autonomous fine-tuning to production deployment, everything stays within your environment. Swiss jurisdiction. Zero data retention. Cryptographic proof on every interaction.

Start with Prem Studio or book a demo to see how it fits your stack.