Enterprise AI Trends for 2025: What's Next for Businesses?

In 2025, enterprises will accelerate Edge AI adoption, leverage multimodal AI for enhanced analytics, and implement Multi-Agent Systems for efficient automation, emphasizing sustainability, governance, explainability, and workforce readiness in AI deployment strategies.

In 2025, enterprises will continue to refine their AI strategies, moving beyond experimentation to full-scale deployment. This article examines the key AI trends influencing enterprises, encompassing the adoption of Edge AI, multimodal AI systems, sustainability considerations, AI governance, and workforce transformation. With AI rapidly evolving, companies must balance innovation with explainability, efficiency, and ethical responsibility.

The Rise of Edge AI in Business

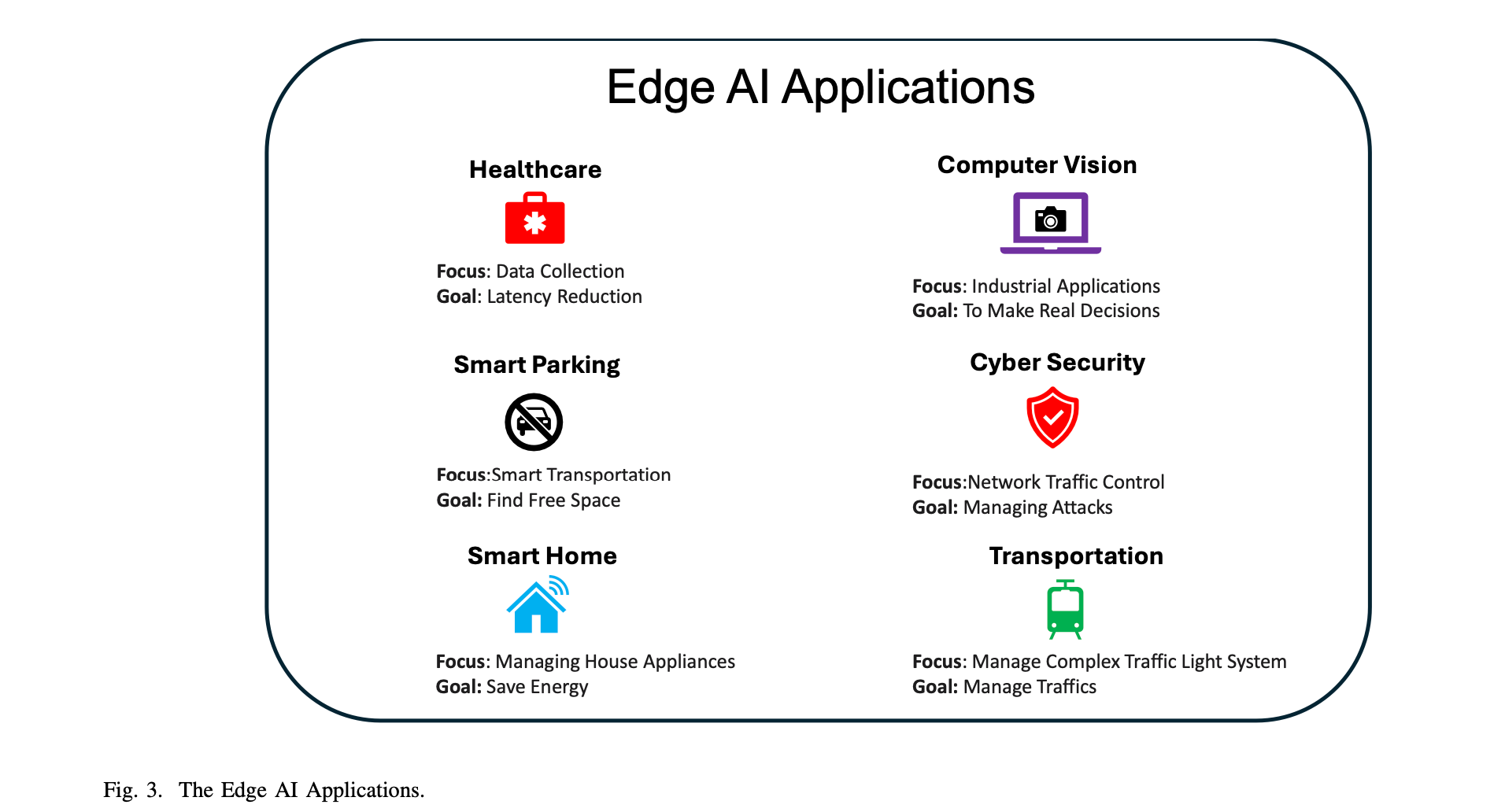

As businesses increasingly rely on AI for decision-making, the traditional cloud-based approach is facing limitations. High latency, rising cloud costs, and data security concerns are pushing enterprises toward Edge AI, a technology that processes data closer to its source, reducing the need for cloud dependency.

Edge AI enables real-time decision making, enhances data privacy, and improves operational efficiency, making it a game-changer across industries like manufacturing, healthcare, and retail.

Why Companies Are Moving AI to the Edge

More enterprises are integrating Edge AI to overcome the challenges of centralized cloud AI models. Here’s why:

- Instant decision making – Unlike cloud AI, which sends data to remote servers for processing, Edge AI analyzes information on local devices, reducing response time for applications like autonomous systems, predictive maintenance, and fraud detection.

- Lower operational costs – Companies spend millions on cloud storage and data transfer fees. Processing data locally with Edge AI helps cut bandwidth costs while reducing cloud dependence.

- Enhanced security and compliance – With stricter data privacy regulations (GDPR, HIPAA), businesses need on device AI processing to keep sensitive data local, avoiding legal risks.

Example: In manufacturing, AI-powered visual inspection systems running at the edge detect defects in milliseconds, preventing defective products from moving down the production line.

Real-World Examples of Edge AI

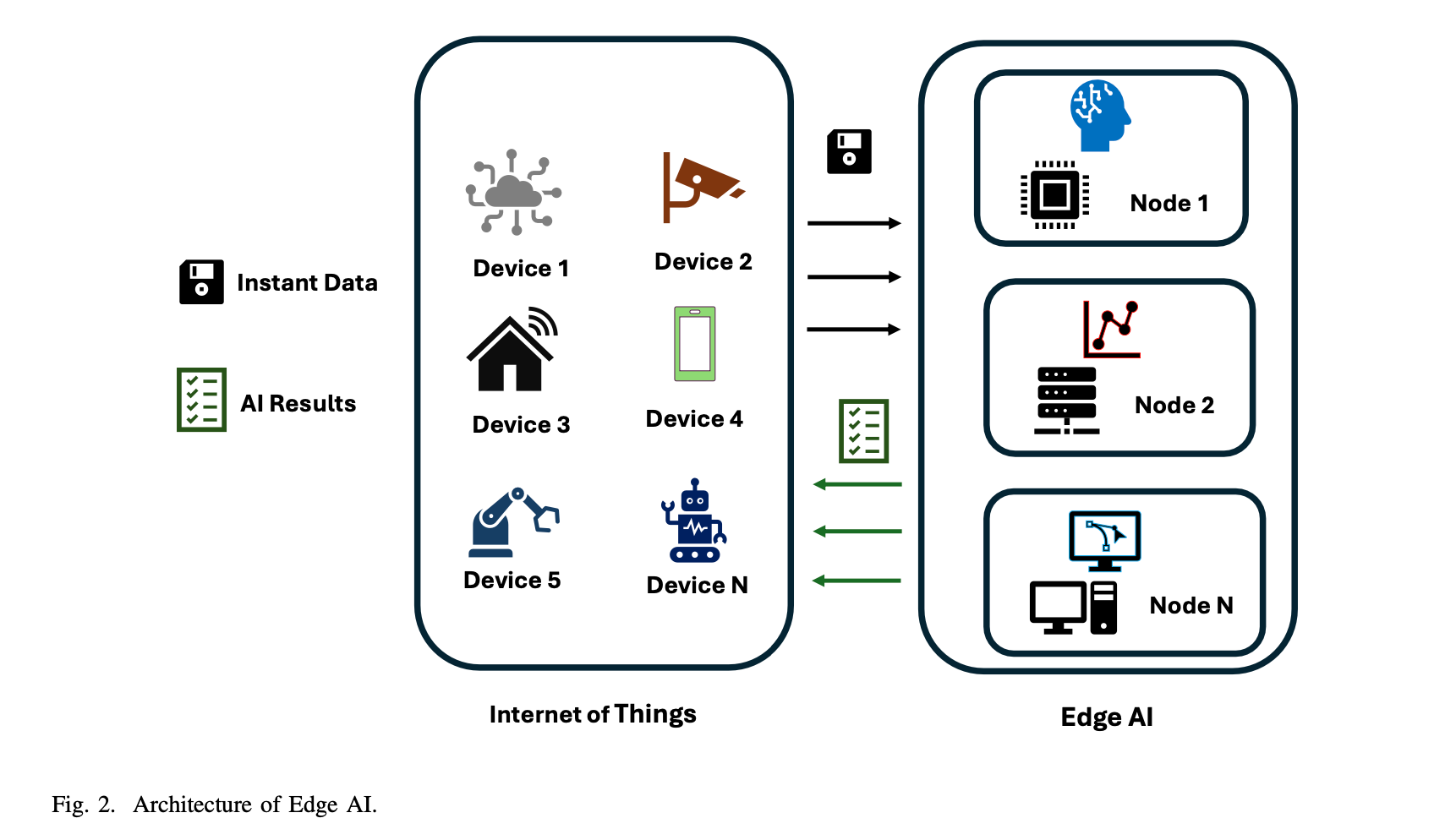

Edge AI is unlocking new efficiencies across multiple industries:

- Healthcare: Wearable devices and medical imaging tools use Edge AI for real-time patient monitoring and diagnostics, reducing reliance on cloud-based analysis.

- Retail & Smart Spaces: Smart checkout systems, AI-powered security cameras, and real-time inventory tracking reduce theft and improve customer experience.

- Manufacturing: AI-driven predictive maintenance detects machine failures before they happen, reducing downtime and improving productivity.

- Financial Services: Edge AI enhances fraud detection by analyzing transaction patterns in real time, minimizing security risks.

Common Roadblocks to Implementing Edge AI

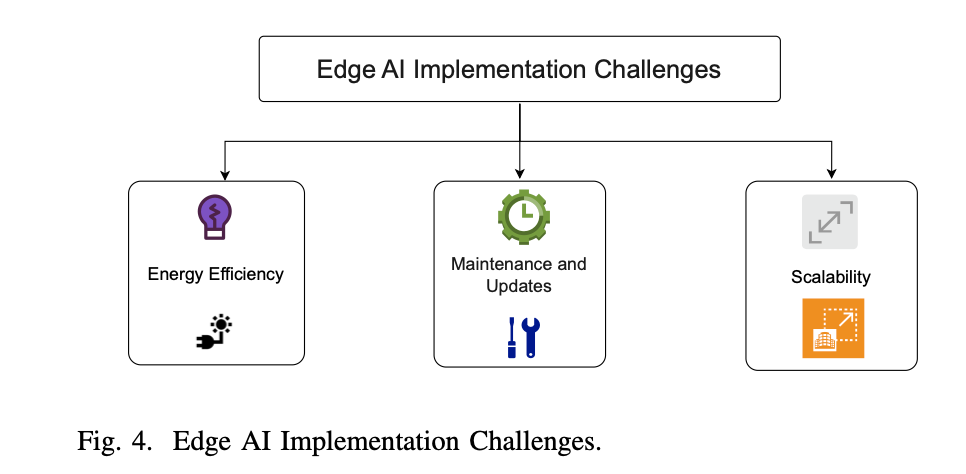

Despite its advantages, Edge AI adoption presents technical and infrastructural challenges:

- Scalability issues – Managing AI across thousands of distributed edge devices requires centralised model orchestration and updates.

- Computing power limitations – Edge devices have limited processing capacity, requiring optimised AI models for efficient inference.

- Security risks – Because edge devices are deployed across various locations, they are vulnerable to cyberattacks and data breaches, requiring strong encryption and secure access protocols.

Example: A global logistics company using AI-powered cameras for real-time fleet tracking faced security threats from edge device hacking, forcing them to implement end-to-end encryption for all AI data flows.

Going Beyond Text: The Era of Multimodal and Multi-Agent AI

As enterprise AI evolves, businesses are moving beyond traditional text-based solutions towards multimodal intelligence AI systems capable of understanding and producing diverse content such as text, images, audio, and video. Simultaneously, Multi-Agent Systems (MAS) are increasingly being adopted, enabling specialized AI agents to collaborate seamlessly to automate complex business tasks.

The Shift to Multimodal AI

Until recently, enterprise AI primarily relied on Natural Language Processing (NLP). Today, multimodal AI models, including OpenAI’s GPT-4o and Google's Gemini, are transforming enterprise applications by integrating multiple data types into cohesive workflows. This transition is critical as enterprises deal with large amounts of heterogeneous data, requiring solutions that efficiently interpret and act upon complex information.

Key applications of multimodal AI in enterprises include:

- Enhanced customer support: AI chatbots and virtual assistants can process and respond to user queries via text, voice, images, or screenshots, creating richer, more intuitive interactions.

- Advanced document processing: Multimodal AI enhances document analysis and retrieval by incorporating visual elements, enabling faster and more accurate knowledge management.

- Automation in compliance and regulatory environments: Systems capable of interpreting both textual and visual data streamline compliance workflows, such as document review and data extraction from scanned images or handwritten notes.

How Multimodal AI Works

Successfully integrating multiple data types requires advanced multimodal fusion methods. Enterprises commonly deploy one of the following fusion strategies based on their specific use cases:

- Early Fusion: Integrates raw data at the beginning of processing, suitable for emotion recognition and real-time audio-visual synchronization tasks.

- Intermediate Fusion: Independently processes each modality to create latent representations before combining them. This approach benefits use cases like multimodal sentiment analysis and video captioning, offering a balance between complexity and depth of understanding.

- Late Fusion: Analyzes modalities separately, combining results at the end. Commonly used in multimodal search and retrieval tasks due to its simplicity and preservation of distinct modal characteristics.

- Hybrid Fusion: Combines elements of early, intermediate, and late fusion, ideal for complex environments like autonomous driving, where understanding sensor data, video inputs, and real-time conditions simultaneously is critical.

The Power of Multi-Agent Systems in Business Automation

Multi-Agent Systems (MAS) consist of specialized AI agents working collaboratively to handle complex enterprise tasks. These systems excel in scenarios requiring coordinated problem-solving and operational efficiency.

Benefits of Multi-Agent Systems in enterprises:

- Cross-functional collaboration: MAS allows different AI agents to independently manage tasks such as data analysis, customer interactions, and business process automation.

- Scalability and flexibility: The modular nature of MAS enables easy scaling and adaptation to evolving enterprise needs, making it highly suitable for dynamic business environments.

- No-code deployment: The adoption of no-code platforms has further accelerated MAS implementation by allowing non-technical users to create custom workflows, significantly reducing the technical barrier for AI adoption in enterprises.

Key enterprise use cases for MAS include:

- Financial services: Agents automate fraud detection, regulatory compliance, and risk assessment.

- Retail and e-commerce: AI-driven agents optimize inventory management, personalized recommendations, and customer support.

- HR processes: MAS streamline resume screening, employee onboarding, and skill management tasks.

Challenges Enterprises Face with Multimodal and Multi-Agent AI

While multimodal AI and MAS have strong potential, several implementation challenges must be addressed:

- Computational resource demands: Processing multiple data modalities simultaneously significantly increases the computational load, requiring enterprises to adopt efficient algorithms and optimized hardware solutions.

- Ensuring consistency and governance: Multimodal models might produce inconsistent outcomes when interpreting various data forms, requiring enterprises to establish rigorous validation processes and governance frameworks.

- Integration complexity: Enterprises face technical hurdles integrating multimodal and multi-agent systems with existing IT infrastructure, such as legacy ERP and CRM systems, necessitating careful system architecture planning.

Addressing these challenges involves adopting strategic best practices such as modular system design, careful model optimization, comprehensive staff training, and robust AI governance frameworks.

The Hidden Costs of AI: Environmental Impact and Sustainability

As AI becomes deeply embedded into enterprise processes, its environmental impact is increasingly drawing attention. Enterprises deploying AI at scale in 2025 must balance performance improvements with sustainable practices to manage energy consumption and reduce carbon emissions. From hardware production to model deployment and daily operations, AI carries significant environmental implications, necessitating more sustainable practices.

AI's Growing Carbon Footprint

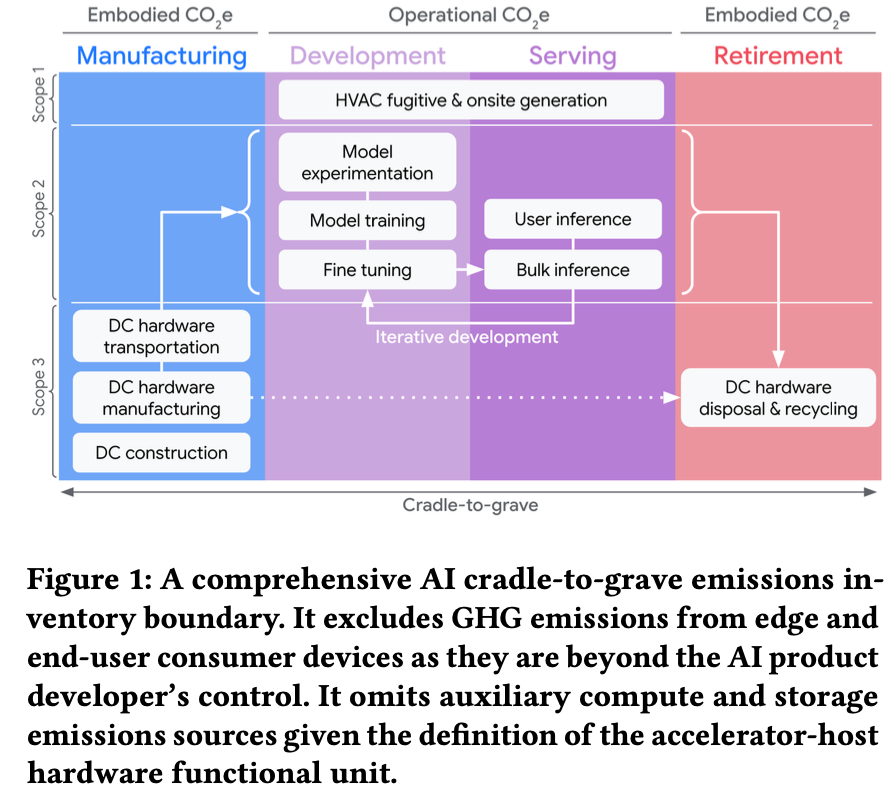

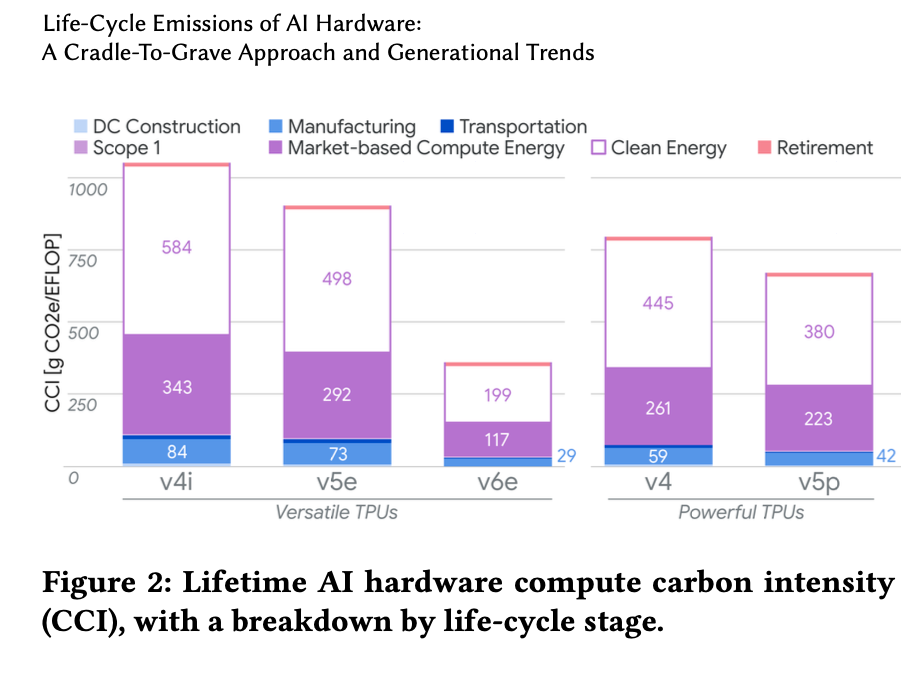

AI’s rapid expansion significantly increases energy consumption and greenhouse gas (GHG) emissions, primarily driven by data-intensive model training, inference tasks, and hardware manufacturing. A recent lifecycle analysis by researchers at Google introduced the Compute Carbon Intensity (CCI) metric, which quantifies emissions per computational operation. This metric provides enterprises with a clear framework to measure and manage AI-related environmental impacts.

The primary contributors to AI’s environmental footprint include:

- Model training and inference energy use: Training large-scale models like GPT-4 consumes vast amounts of electricity, intensifying carbon emissions if energy is sourced from non-renewable power grids.

- Data center infrastructure: Energy-intensive cooling systems and servers further amplify operational emissions.

- Hardware lifecycle: Manufacturing AI-specific accelerators (e.g., Tensor Processing Units or TPUs) contributes substantial embodied emissions, frequently overlooked in sustainability discussions.

Reducing AI’s Energy Consumption

Enterprises are beginning to address AI’s environmental challenges through targeted sustainability strategies:

- Low-power AI hardware: Advanced chipsets, such as Google's newer TPU generations, have significantly reduced Compute Carbon Intensity by improving operational efficiency, offering lower energy consumption for equivalent performance.

- Optimized model techniques: Methods like quantization, pruning, and knowledge distillation can shrink AI models, decreasing both memory requirements and energy usage without significantly compromising accuracy.

- Federated and Edge AI: Moving computation to edge devices not only improves latency but reduces data transmission overhead, thus minimizing energy use and emissions associated with cloud infrastructure.

Additionally, enterprises are adopting carbon-aware computing practices, dynamically adjusting workloads based on real-time renewable energy availability, and actively selecting data centers powered by renewable or low-carbon energy sources.

How Sustainability is Changing AI Governance

Regulatory frameworks around environmental sustainability are growing stricter, compelling enterprises to integrate sustainability metrics within AI governance practices:

- Transparent emissions reporting: Enterprises will need comprehensive carbon accounting methodologies, such as those defined by the GHG Protocol, to transparently disclose AI’s environmental impact.

- Renewable energy commitments: Aligning with stricter environmental standards, enterprises increasingly source renewable energy or engage in hourly matching of clean electricity consumption to mitigate AI-driven emissions effectively.

- Integrating sustainability into AI governance: Incorporating carbon emission metrics like CCI into AI decision making frameworks promotes responsible AI deployment aligned with corporate ESG (Environmental, Social, and Governance) strategies.

Companies actively adopting these practices are better positioned to meet global regulatory requirements and investor expectations regarding sustainable technology use.

Why Enterprises Need Explainable AI (XAI)

The widespread integration of AI into critical business processes has heightened concerns about transparency and interpretability. Enterprises, especially in regulated industries like finance, healthcare, and law, must ensure that AI-driven decisions are clear, trustworthy, and accountable. Explainable AI (XAI) thus emerges as an essential factor, aligning AI use with business ethics, compliance, and user trust.

Explainability as a Compliance Requirement

AI's "black-box" nature poses considerable challenges to transparency and accountability. In industries subject to heavy regulation such as finance and healthcare, businesses must provide clear justifications for automated decisions. Adopting explainable AI (XAI) ensures enterprises can:

- Meet regulatory standards (e.g., GDPR, EU AI Act) by transparently documenting decision-making processes.

- Improve customer and employee trust by clarifying how decisions, such as loan approvals or candidate screenings, are reached.

- Facilitate bias detection and mitigation, thereby reducing unintended discrimination and associated legal risks.

Companies that proactively adopt transparent AI systems can build stronger relationships with customers and regulators, thus creating competitive advantages.

Practical Approaches to Making AI Explainable

Several methodologies enhance AI interpretability, helping enterprises meet regulatory and operational requirements:

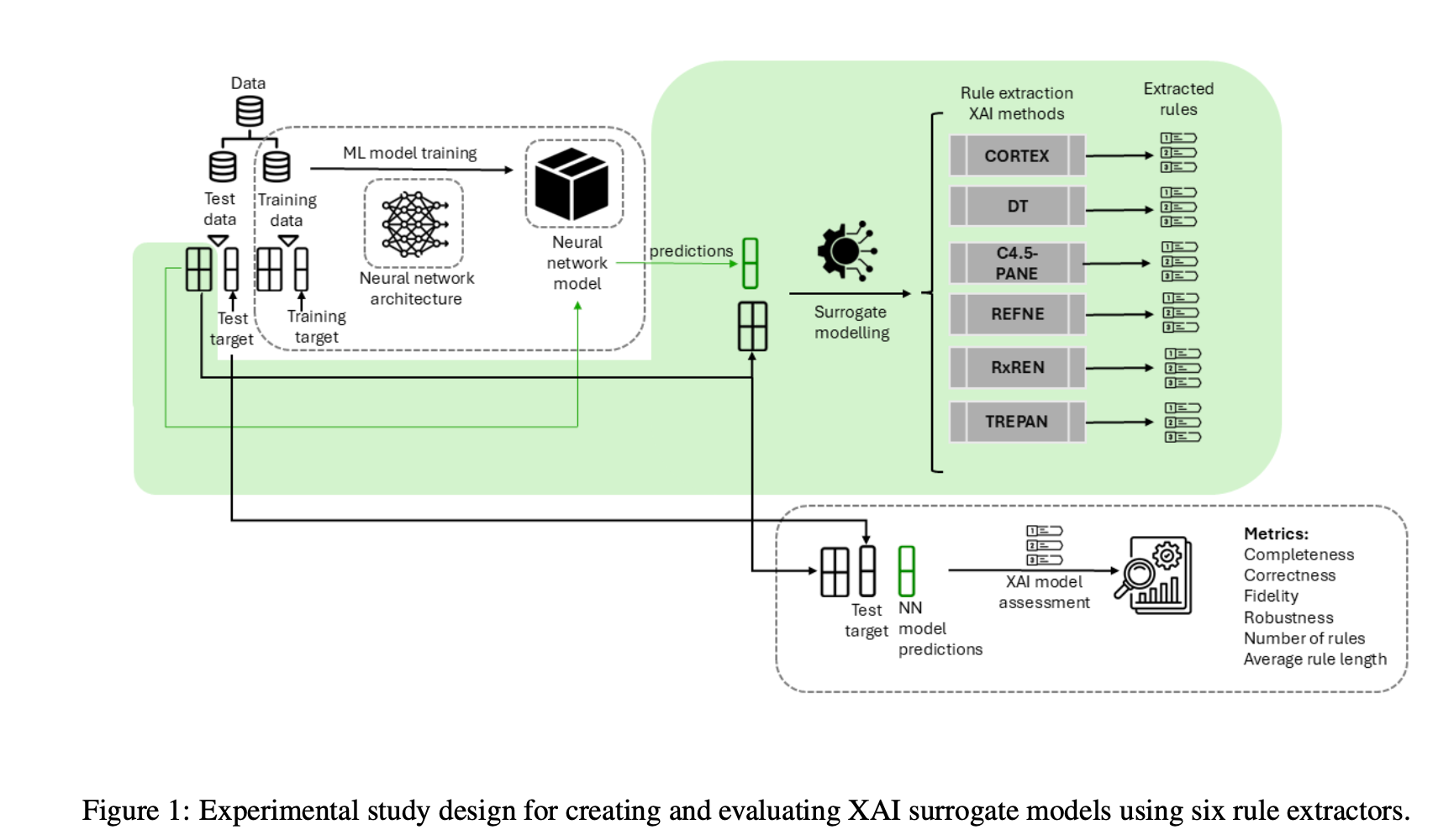

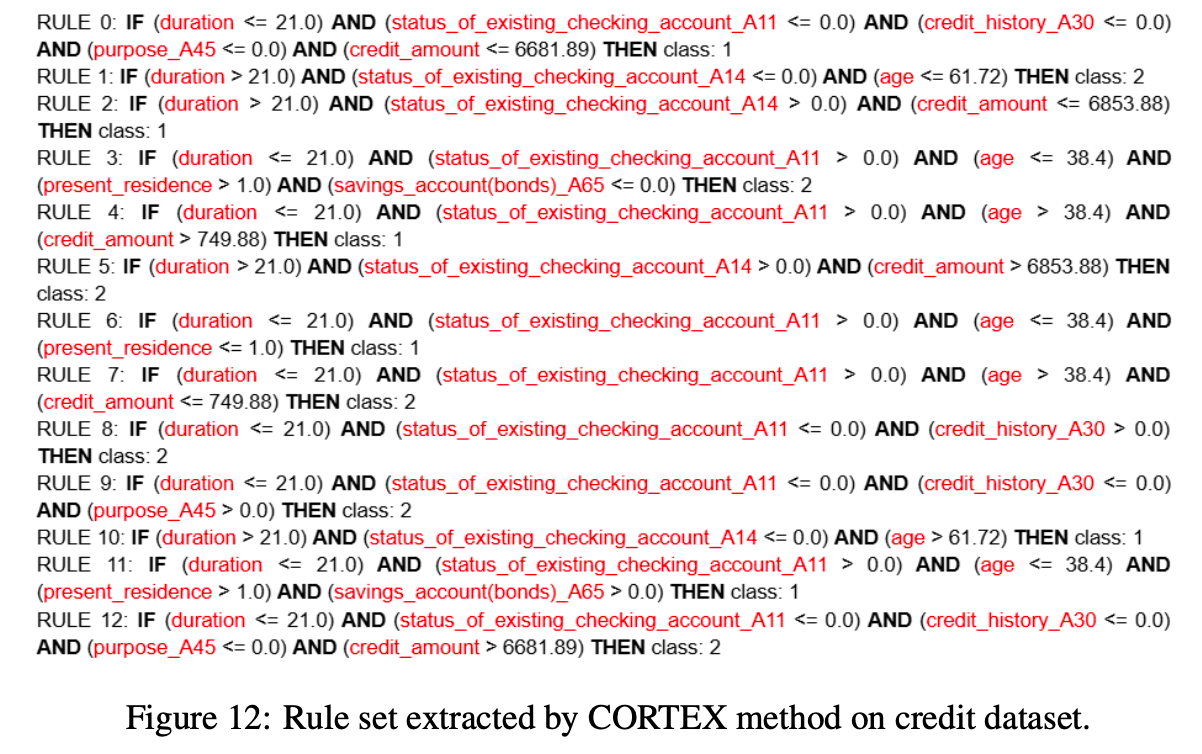

- Decision Trees and Rule-Based Models: These models naturally offer clear insights into how decisions are made, making them suitable for sensitive applications. Methods such as CORTEX provide interpretable rule sets that balance predictive performance with human readability, addressing cost-sensitive decisions effectively.

- Surrogate Models for Interpretability: Techniques like Local Interpretable Model-agnostic Explanations (LIME) and SHapley Additive exPlanations (SHAP) explain individual predictions from complex models by approximating their behavior with simpler, interpretable models.

- Hybrid and Cost-Sensitive Models: Enterprises can use hybrid methods that balance performance and interpretability, optimizing for specific use cases where clarity of decisions is critical. Cost-sensitive approaches, such as the CORTEX method, adjust model complexity based on interpretability requirements and accuracy trade-offs.

Balancing Complexity, Performance, and Explainability

Despite clear benefits, explainable AI presents practical challenges that enterprises must address:

- Interpretability versus Accuracy: Highly interpretable models may not always achieve the accuracy of complex deep learning approaches. Enterprises need clear guidelines on acceptable trade-offs tailored to their use-cases.

- Scalability: Implementing and maintaining explainable models across large-scale enterprise environments requires efficient tools, dedicated resources, and continuous validation frameworks.

- Integration and Legacy Systems: Ensuring seamless integration of explainable AI into existing systems (CRM, ERP, legacy databases) involves significant technical planning, often necessitating customized solutions.

By carefully considering these challenges, enterprises can effectively embed explainability into their AI strategy, improving stakeholder trust and regulatory compliance.

Closing the Workforce AI Gap

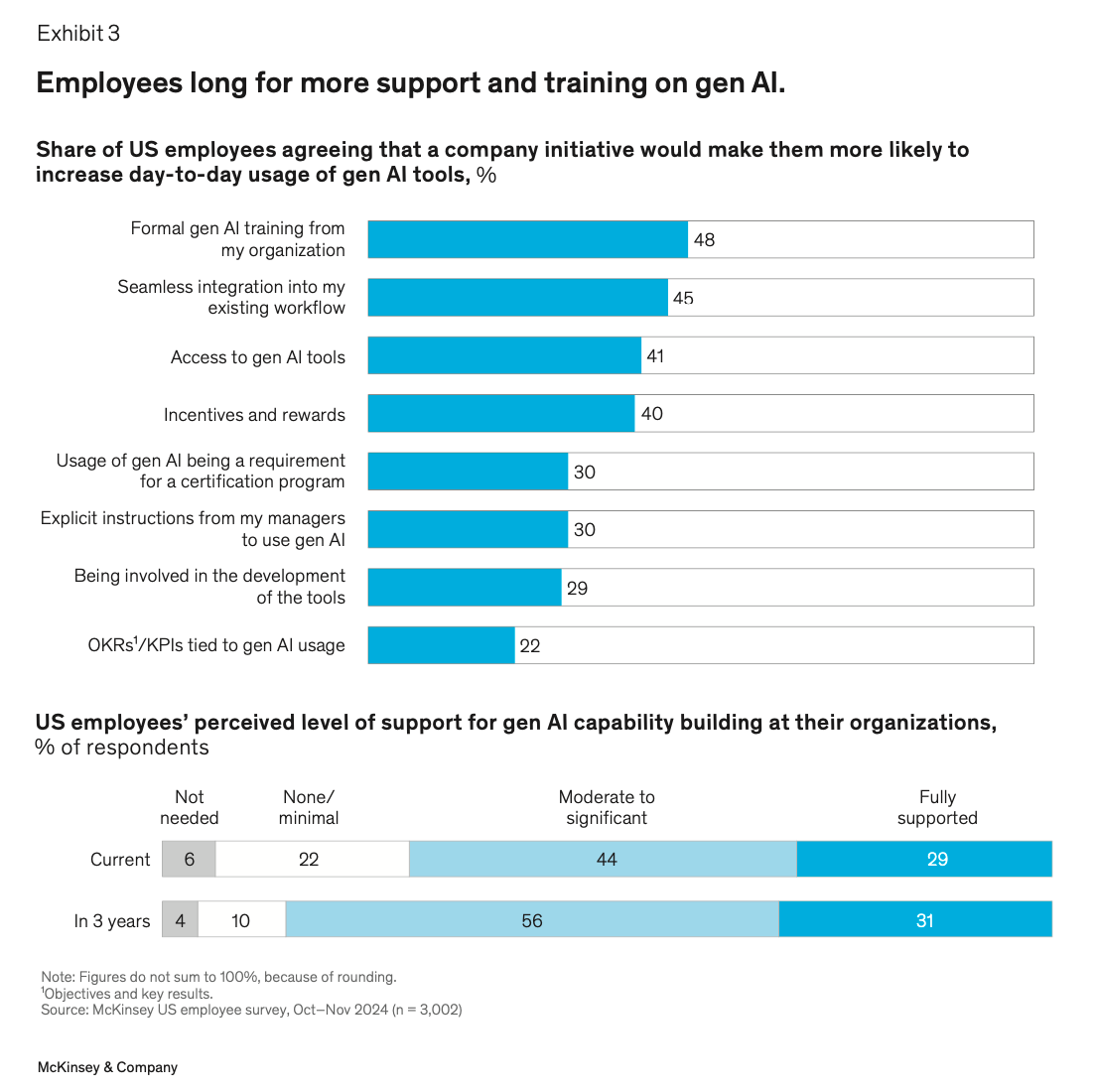

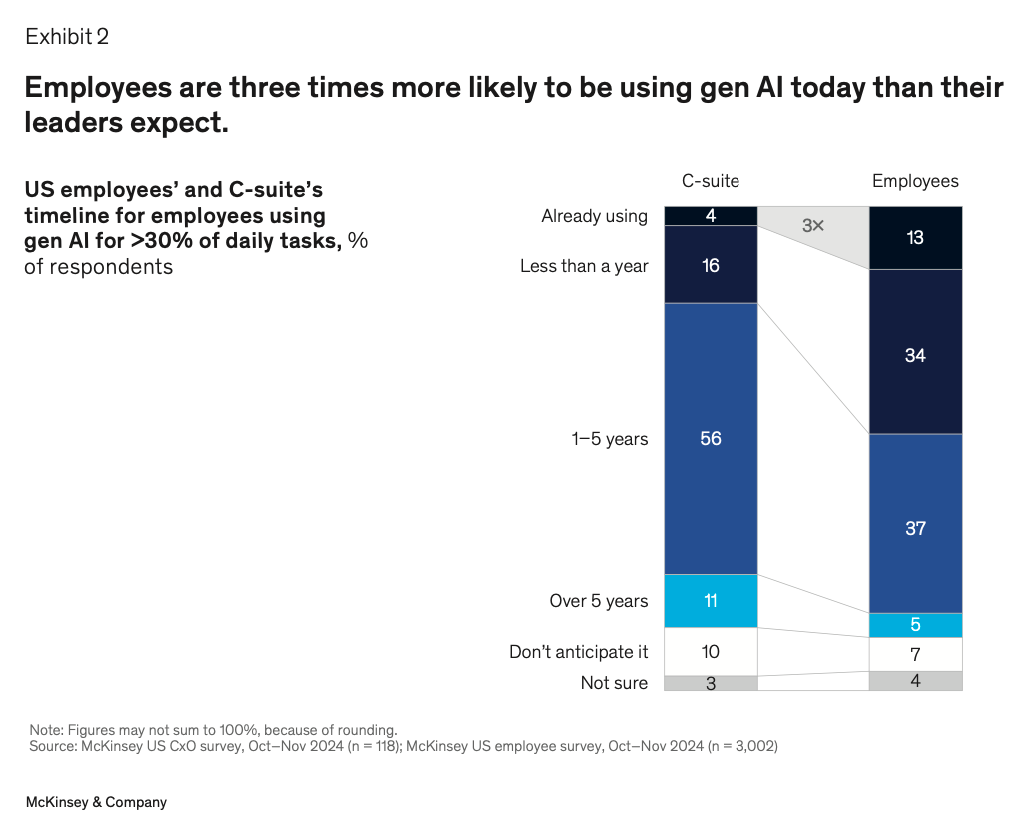

While enterprise leadership embraces AI to drive productivity and innovation, many employees still struggle with the realities of integrating AI into daily operations. The gap between leadership expectations and employee readiness remains a significant barrier, posing risks to successful AI adoption if left unaddressed.

Identifying Barriers to AI Adoption

Although 92% of enterprises plan to increase investments in AI, studies indicate that only a small fraction consider themselves truly AI-mature. Employees frequently report feeling overwhelmed, citing a lack of proper training and education to effectively utilize AI tools. This gap in readiness significantly hinders AI's potential to enhance productivity and efficiency.

Common issues contributing to the AI readiness gap include:

- Insufficient AI literacy: Employees struggle to understand how to effectively use AI tools in their daily tasks.

- Lack of structured training: Without adequate learning resources, employees are uncertain about integrating AI-driven processes into their workflows.

- Resistance to adoption: Employees fear job displacement, leading to hesitancy in embracing automation.

Empowering Employees through AI

To bridge this gap, enterprises are adopting the concept of "superagency," which emphasizes enhancing employee capabilities through AI collaboration rather than replacement. This strategic shift involves:

- Employee empowerment: Enterprises deploy AI to automate routine tasks, allowing workers to concentrate on more strategic, creative, and value-added activities.

- Hands-on AI training: Businesses providing structured, practical AI training experience faster adoption rates and higher employee productivity.

- AI-driven workforce culture: Promoting experimentation and hands-on experience with AI tools encourages acceptance, familiarity, and trust among employees.

Enterprises adopting this strategy report higher overall productivity, employee satisfaction, and greater alignment between executive vision and employee experiences.

Leadership’s Role in Successful AI Integration

Ultimately, the success of AI integration within enterprises depends significantly on leadership actions:

- Executives must clearly align AI strategy with business objectives, ensuring transparent communication about AI's benefits and limitations.

- Structured and continuous training programs help employees stay competent and confident in using AI tools.

- Leaders should visibly endorse and participate in AI initiatives, demonstrating clear commitment and setting expectations across the organization.

Addressing these areas allows enterprises to unlock AI's full potential, empowering their workforce rather than displacing it.

References: