Function Calling vs. NLU: the Real Power of Chatbots

Function calling is seen as revolutionary in chatbots, enhancing real-time capabilities. However, older NLU + Core bots handled many use cases effectively. This article explores the evolution of chatbots, weighing the hype of function calling against the proven efficiency of traditional methods.

1. Chatbot Evolution: Is Function Calling the Real Game-Changer?

The world of chatbots has seen rapid evolution over the past few years, driven by advancements in artificial intelligence (AI). Recent trends have spotlighted function calling as a groundbreaking feature that enhances chatbots' capabilities in areas like customer service and information retrieval. However, there is ongoing debate about whether function calling is truly transformative or simply an overhyped development in chatbot technology.

Historically, chatbots have handled a wide variety of use cases through simpler approaches. The NLU (Natural Language Understanding) + Core methodology was effective in simulating human conversations and addressing many business needs. But as function calling is celebrated as a significant leap forward, one must ask: Is this shift warranted, or were earlier chatbots equally capable?

To answer this, it's essential to revisit the chatbots developed around 2017 and earlier. These bots relied on NLU to understand user input and used a dialogue core for managing conversational flow. Despite technological constraints, they efficiently addressed users’ queries. This article will explore the evolution of chatbot technologies, analyzing both the hype surrounding function calling and the capabilities of NLU + Core-based chatbots in meeting early use cases.

The chatbot market has also grown significantly. According to AI Market Research, the global chatbot market is expanding quickly, with diverse applications ranging from customer service to lead generation. Major players have adopted new AI models to enhance chatbot functionality, capitalizing on advancements in language processing and understanding. The need for improved user interaction, 24/7 support, and the automation of routine processes are among the key drivers behind this expansion.

2. The Evolution of Chatbots & Function Calling

Early Chatbots and the Rise of NLU + Core Systems

The early development of chatbots can be traced back to simple rule-based systems like ELIZA (1966) and ALICE (1995), which used pattern matching techniques to simulate dialogue but had limited capabilities in understanding user intent. These chatbots laid the foundation for more sophisticated approaches by employing template-based responses. However, their inability to retain context or offer personalized interactions highlighted the need for deeper natural language processing.

By the 2000s, the focus shifted towards Natural Language Understanding (NLU) and machine learning to handle dialogue more effectively. Chatbots evolved to use NLU for intent recognition and paired it with a dialogue core for response generation, leading to the NLU + Core approach. This combination allowed for more meaningful interactions, as the systems could understand context, user intent, and handle multi-turn dialogues. Chatbots using this architecture were able to effectively perform tasks like appointment scheduling, FAQs, and basic customer service inquiries.

Within the NLU framework, we saw rising trends of core properties of Language models like sentence representation, context awareness, etc. With exponential rise of research in this field, we saw the boom of Large Language Models which already comes with all the above mentioned properties but also instruction following properties which finally bridged the gap between simple command-response bots and those capable of nuanced interactions.

Function Calling Enters the Scene

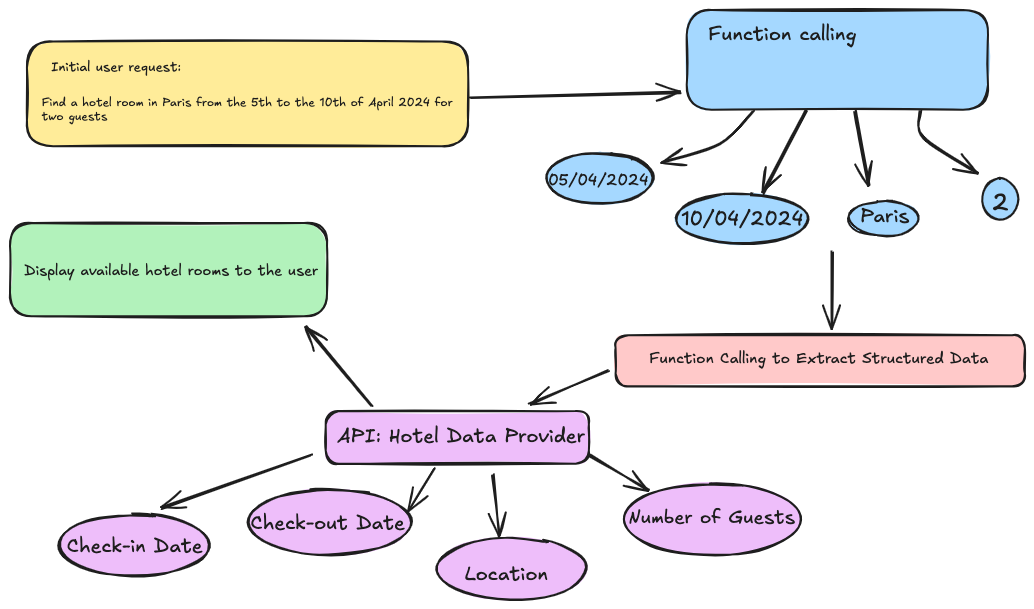

The introduction of function calling in AI represented an attempt to extend the capabilities of chatbots beyond basic dialogue. Function calling allows bots to execute specific tasks by invoking predefined functions, such as accessing APIs to book a flight or retrieve user-specific data. Unlike the NLU + Core model, which primarily focused on understanding and responding to user intent based on a pre-structured dialogue flow, function calling provides the ability to dynamically access external information and services.

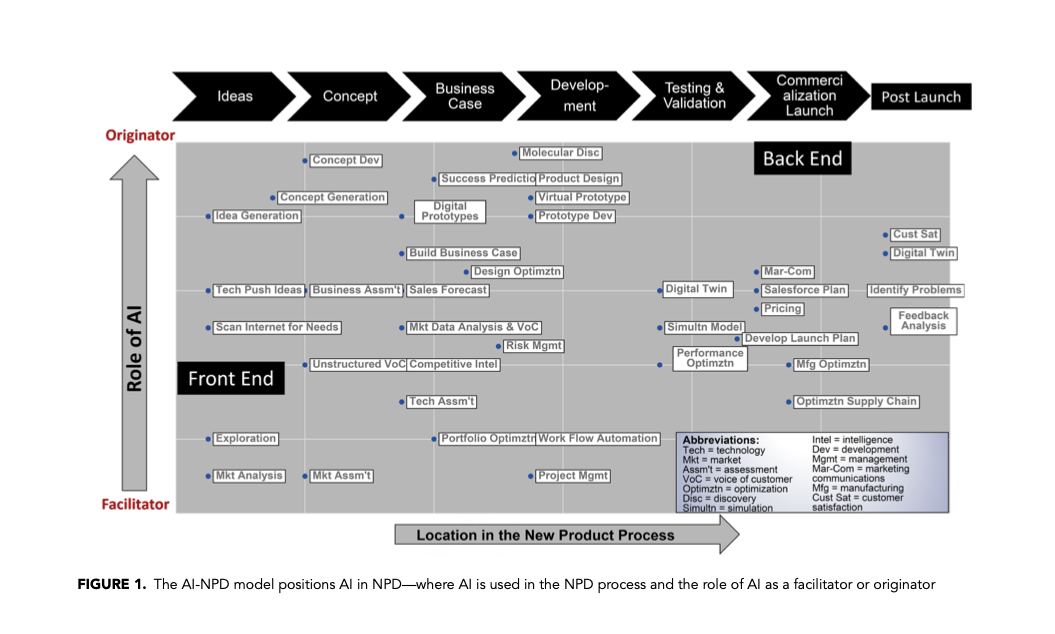

The rise of function calling is largely due to the growing need for AI chatbots to handle complex, transactional interactions that extend beyond simple conversation. For example, integrating APIs to fetch live data or automating multi-step workflows became increasingly important for modern chatbots. AI and New Product Development reveals how companies began leveraging AI models to perform tasks like analyzing customer feedback, monitoring trends, and automating decisions, making function calling an appealing feature for expanding the role of chatbots in business processes.

However, it’s essential to assess whether this shift is truly a leap forward in capability. While function calling allows chatbots to be more dynamic and multi-functional, it does not necessarily replace the core principles of understanding and context management that NLU + Core approaches have addressed well for many years. The transformation has been more about expanding the scope of what chatbots can achieve rather than fundamentally changing their operational principles.

Market Trends and Industry Adoption

The market trends outlined in the AI Market Research report suggest that both the demand for multi-functional bots and advancements in AI technologies have pushed the rise of function-calling capabilities. Businesses are seeking to improve customer interactions and automate routine processes, which aligns with the strengths of function calling in facilitating seamless, data-driven interactions.

However, the Trends in Natural Language Understanding document also underscores the continued importance of refining NLU capabilities, particularly as chatbots evolve to handle more diverse and complex conversations. Even as function calling has been introduced, the ability to comprehend and contextualize natural language remains a core component of chatbot technology.

3. The Benefits and Limits of NLU + Core Approach

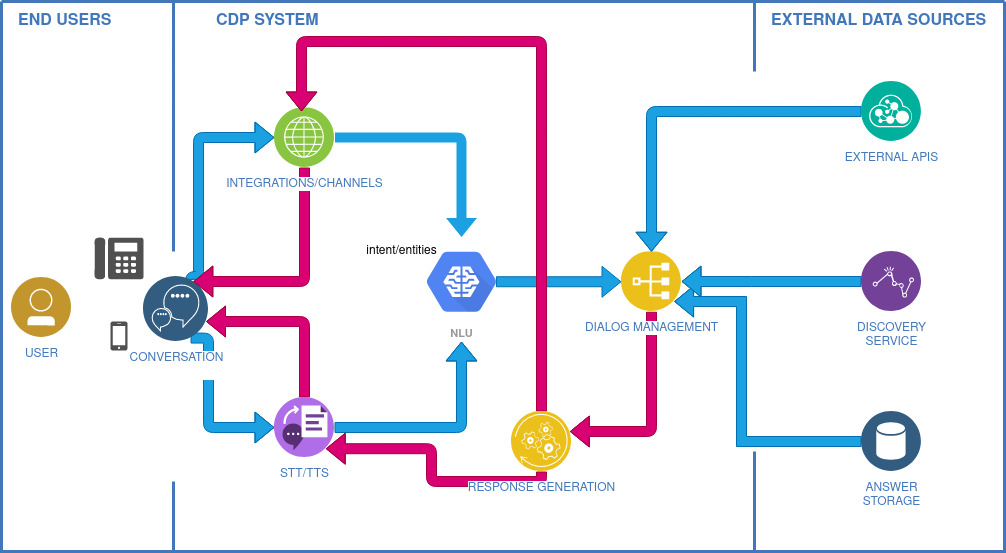

The NLU + Core approach forms the backbone of many traditional chatbots, particularly those built before the rise of function-calling capabilities. These systems rely on Natural Language Understanding (NLU) to decode user input and map it to predefined intents, while a Dialogue Core manages the conversational flow. Understanding how these two components work together is essential to evaluating the approach's strengths and shortcomings.

Benefits of NLU + Core

- Robust Intent Recognition & Context Management

- NLU models are designed to take user input (e.g., "What’s the weather like tomorrow?") and break it down into two main components:

- Intent Recognition: The NLU model maps the input to a corresponding "intent," which is essentially the purpose of the user's message (e.g., "CheckWeather").

- Entity Extraction: It identifies and extracts key pieces of information or "entities" within the input (e.g., "tomorrow" as the time frame).

With this structure, chatbots are capable of understanding a wide range of user utterances while mapping them to specific actions. The dialogue core then uses this information to trigger the appropriate response, giving the chatbot a clear and efficient way to handle structured dialogues and respond accurately.

- Handling of Specific Use Cases Efficiently

- The NLU + Core approach is particularly effective in managing task-oriented interactions with clearly defined workflows, such as booking appointments, answering FAQs, or handling customer inquiries.

- The dialogue core allows for contextual understanding, meaning the chatbot can keep track of the conversation's state across multiple turns. For example, if a user says, "I'd like to book a flight to New York," and then follows up with "What’s the earliest available?", the NLU + Core chatbot will remember the user's previous request and respond based on the context.

- Scalability in Simple, Single-Domain Dialogues

- NLU + Core systems perform well in domains where the range of topics is limited and the required responses are predictable. The simplicity of handling structured, repetitive workflows allows these bots to provide consistent and reliable interactions, without the need for complex external integrations.

- The dialogue core's ability to manage multiple variations of user input means that it can respond to users naturally, giving an impression of a smooth conversational experience.

- Customizable and Low-Code Frameworks

- Frameworks like Rasa, Dialogflow, and Botpress provide tools for developers to customize intents, entities, and dialogues without extensive programming. These platforms enable the creation of rule-based dialogues that can be quickly tailored to specific business needs.

- Since pre-built modules are often provided within these frameworks, developers can accelerate the development of use-case-specific chatbots without the need to build every component from scratch.

Limitations of NLU + Core

- Inability to Handle Real-Time Data and External Services

- The traditional NLU + Core approach is designed to manage pre-defined conversation flows. However, it struggles to handle real-time data retrieval or dynamic tasks that require calling external services (e.g., accessing live stock prices, performing real-time bookings).

- While it is possible to integrate APIs into NLU + Core systems, this typically involves manually pre-configuring integrations, which is time-consuming and limits the chatbot’s flexibility.

- Complexity in Managing Multi-Turn and Multi-Intent Conversations

- When conversations become more multi-turn (requiring multiple exchanges) or involve multi-intent queries (where the user has multiple requests in a single sentence), NLU + Core chatbots may struggle to handle these efficiently.

- For example, a query like "Can you tell me my order status and also book a table for tonight?" may confuse an NLU + Core bot if it’s not explicitly programmed to recognize combined intents. Unlike function calling, which can dynamically adapt to such requests, NLU + Core requires each possible conversation path to be explicitly mapped out in advance.

- High Maintenance and Rule Management Overhead

- As chatbots' capabilities expand, maintaining the NLU model and dialogue core can become increasingly challenging. Developers must manually define and maintain intents, entities, dialogue flows, and rules for every possible user interaction.

- Each time a new feature or use case is added, the NLU model often needs retraining, and the dialogue core needs updating to ensure proper handling of new paths. This high maintenance overhead limits the scalability of NLU + Core systems in rapidly changing environments.

- Limited Flexibility for Unpredictable or Open-Ended Conversations

- NLU + Core bots are generally not designed for open-ended dialogues where users might ask questions or make requests outside of the bot's predefined domain. This lack of adaptability means that if a user deviates from the expected conversation paths, the bot may fail to provide an appropriate response.

- In contrast, chatbots with function-calling capabilities can more dynamically adjust to a variety of user inputs by invoking different functions to retrieve data, process actions, or even escalate complex queries.

4. Function Calling – Hype vs. Reality

4.1 What Does Function Calling Add?

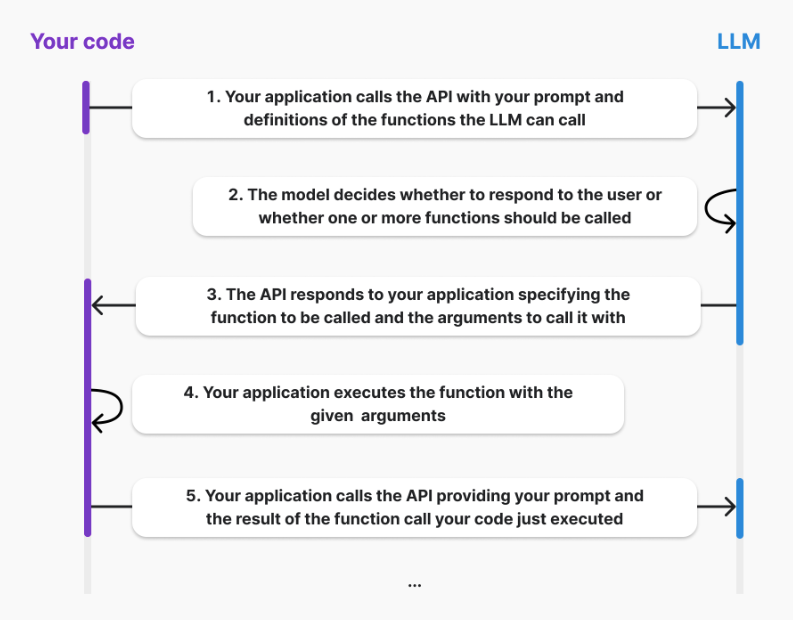

The concept of function calling has emerged with the rise of Large Language Models in general and ChatGPT from OpenAI in particular. Unlike traditional NLU + Core approaches, where chatbots rely solely on predefined intents and entities to respond, function-calling models allow for dynamic task execution. Specifically, they enable bots to call pre-defined functions in real time based on user queries, such as retrieving live data from APIs, making bookings, or accessing databases.

For instance, when a user asks, "Can you tell me the weather for tomorrow?" a function-calling chatbot can dynamically invoke a weather API, retrieve live data, and provide an accurate, contextually relevant response. This level of contextual adaptation and task fulfillment was not feasible in traditional NLU + Core systems, which often required extensive pre-scripting for specific responses and integrations.

4.2 Advantages of Function Calling

Function calling introduces several enhancements to chatbot performance and user experience:

- Dynamic Task Execution & Real-Time Data Access

- Function calling enables chatbots to perform on-demand operations that require accessing external services, providing live data, or integrating with third-party APIs. Instead of static responses, the bot can process data and respond in real time, such as retrieving order status, making reservations, or pulling user account information.

- Adaptive Conversations & Personalized User Experience

- The ability to call functions dynamically means chatbots are better equipped to handle open-ended or evolving conversations. They can seamlessly switch between tasks and adjust responses based on user context or preferences, improving the flow and naturalness of conversations.

- As demonstrated in the Medium case study, a function-calling chatbot can handle multi-turn dialogues efficiently, adapting its responses based on the user's needs without predefined flows. This allows for a more personalized user experience, improving overall interaction quality.

- Reduced Scripting & Maintenance Overhead

- Traditional NLU + Core systems required extensive scripting of all possible dialogue flows and manual updates whenever a new intent or use case was added. With function calling, developers define functions that handle specific tasks, reducing the need for manual scripting of conversation paths.

- Function-calling models can handle unexpected user queries by invoking functions that process data or retrieve information in real time, offering an easier way to scale the chatbot's capabilities without extensive retraining or rule management.

4.3 Use function calling with your preferred LLM in just a few lines of code with PremAI SDK

Getting started with function calling using PremAI is very easy. All you need is a valid PremAI account and then it's just a few lines of code. Let's say you want to arithmetic calculations of very big numbers using natural language. If you do this without function calling then LLMs or the models will fail. Here is an example:

import os

premai_api_key = os.environ.get("PREMAI_API_KEY")

project_id = 1234

client = Prem(api_key=premai_api_key)

messages = [{

"role": "user",

"content": "What is 9297838399297827278289292 divided by 26181927361 plus 16238137181"

}]

model_name = "gpt-4o"

response = client.chat.completions.create(

model=model_name,

messages=messages,

project_id=project_id

)

If you will run this above piece of code, then the LLM answers 371410150275.0, but the answer was 355140529450725.56. But now, let's try the same using function calling:

# Define the airthmetic functions you want your LLM to call here:

# For demo purposes, let's keep it simple (only addition and division)

def addition(a, b):

return a + b

def division(a, b):

return a / b

# Now Define the set of available tools in the form of JSON

tools = [

{

"type": "function",

"function": {

"name": "addition",

"description": "Adds two numbers",

"parameters": {

"type": "object",

"properties": {

"a": {

"type": "integer",

"description": "The first number"

},

"b": {

"type": "integer",

"description": "The second number"

}

},

"required": ["a", "b"]

}

}

},

{

"type": "function",

"function": {

"name": "division",

"description": "Divides two numbers",

"parameters": {

"type": "object",

"properties": {

"a": {

"type": "integer",

"description": "The first number"

},

"b": {

"type": "integer",

"description": "The second number"

}

},

"required": ["a", "b"]

}

}

},

]

# Now call the LLM:

messages = [{

"role": "user",

"content": "What is 9297838399297827278289292 divided by 26181927361 plus 16238137181"

}]

response = client.chat.completions.create(

project_id=project_id,

model=model_name,

messages=messages,

tools=tools

)

print(response.to_dict())

This time it will give results which will match the ground truth. Hence we can say that function calling makes LLM's behavior more reliable for more factual questions.

4.4 Limitations and Challenges of Function Calling

- Dependency on External Systems and Vulnerability to Latency

- A key limitation of function calling is its reliance on external APIs and services, which can introduce potential points of failure. Any delay, error, or downtime from these external services can negatively impact the chatbot’s performance, leading to slow response times or failed task execution.

- Additionally, integrating multiple external APIs increases the complexity of development and testing. Ensuring that each function call is correctly implemented, handles errors gracefully, and meets the expected security standards can become a significant challenge.

- Privacy, Security, and Data Protection Concerns

- Function-calling chatbots often handle sensitive user data, such as financial transactions, personal information, and health records, necessitating robust security protocols. The Concurrency and Computation paper outlines several concerns:

- Authentication & Authorization: Ensuring that the user is verified when requesting sensitive operations is critical. Token-based authentication and end-to-end encryption (E2EE) must be integrated to secure data exchanges.

- Data Storage & GDPR Compliance: Function-calling chatbots must adhere to data protection regulations, such as GDPR, which requires data pseudonymization, encryption, and secure storage. Failure to comply with such standards can lead to breaches of privacy and legal issues.

- Complexity in Development and Maintenance

- Introducing function-calling capabilities increases the technical complexity of chatbot development. Developers need to design, implement, and maintain robust functions for all required actions, ensuring they work seamlessly with the dialogue flow.

- The complexity extends to error handling and debugging, as developers must anticipate and handle potential failures in external API calls. This requires additional testing and monitoring, making it costlier and more resource-intensive than managing a static NLU + Core chatbot.

4.4 Evaluating the Hype: Does Function Calling Live Up to Its Promise?

- Comparison to NLU + Core Approach in Real-World Scenarios

- While function calling enables chatbots to dynamically handle multi-tasking and real-time data access, it’s essential to recognize that many chatbot use cases are still well-served by NLU + Core-based systems. These systems efficiently handle task-oriented, predictable dialogues where predefined paths are more practical than real-time function executions.

- The ACM Digital Library article provides insights indicating that while function calling offers advantages for complex scenarios, the NLU + Core approach is optimal for simpler, single-domain dialogues, suggesting that function calling’s utility is context-dependent.

- Balancing Practicality and Innovation

- Businesses should assess the specific needs of their chatbot applications when considering function calling. If real-time data retrieval, complex workflows, and dynamic task switching are not core requirements, a well-designed NLU + Core model might be more efficient and easier to maintain.

- For applications where adaptability and real-time functionality are critical, the benefits of function calling can justify the added complexity and development costs. As such, understanding the scope and goals of the chatbot is crucial to choosing the right approach.

- Industry Perspectives on the Rise of Function Calling

- The growing demand for more responsive and adaptable AI solutions in various industries has driven the rise of function calling. However, it remains important for businesses to balance hype with practicality, ensuring that the chosen technology aligns with the specific problems they aim to solve.

5. Balancing Innovation and Practicality: Future Trends and Strategic Adoption

5.1 Balancing Function Calling with Practical Needs

The decision to implement function-calling capabilities in chatbots hinges on evaluating the balance between innovation and practicality. While function-calling chatbots offer flexible and dynamic task execution, NLU + Core models still present a simpler yet effective approach for many use cases. Understanding the technical and practical nuances of both approaches is critical.

- Technical Breakdown of NLU vs. Function Calling

- NLU Systems and Core Components: Natural Language Understanding (NLU) involves several core components, such as entity extraction, intent classification, and contextual understanding. These components allow chatbots to map user input to specific intents and extract key entities (e.g., dates, names) to provide an appropriate response. The strength of an NLU-based system lies in its ability to maintain single-domain, task-oriented dialogues without requiring complex external data interactions.

- In contrast, function-calling chatbots are designed to execute pre-defined functions that dynamically pull data from external APIs or systems. This enhances the bot’s ability to perform complex tasks in real time, such as fetching live stock prices, booking appointments, or accessing user-specific information. While this flexibility is an advantage, the technical implementation requires robust function definitions, API integration, and error-handling protocols to ensure seamless performance.

- Cost vs. Benefit and Resource Allocation

- From a resource perspective, NLU + Core models are typically more cost-effective in terms of development and maintenance. They require fewer resources since their dialogue flows are predetermined and typically limited to internal systems. This makes them ideal for single-domain use cases, such as customer FAQs or support inquiries.

- Function-calling bots, on the other hand, have a higher cost of implementation due to the need for developing API integrations, continuous testing of function performance, and security protocols to manage external data safely. For scenarios where a business requires multiple data interactions or complex, multi-turn conversations, the investment in a function-calling chatbot is justifiable. However, this approach also demands regular updates and monitoring to adapt to changes in APIs or external data sources.

- Security, Privacy, and Compliance Considerations

- With function-calling capabilities, handling sensitive user data becomes a key concern. The reliance on third-party APIs introduces potential vulnerabilities in data privacy, encryption, and compliance with regulations like GDPR. Proper authentication mechanisms, such as OAuth protocols, and end-to-end encryption (E2EE) are necessary to ensure safe interactions with external services.

- NLU-based systems have fewer privacy challenges since they mainly handle internal dialogues without external data exchange, making it easier to maintain privacy standards and compliance. However, they are limited in their ability to handle dynamic, real-time data tasks, which are becoming increasingly common in complex chatbot applications.

5.2 Emerging Trends in Chatbot Development

The future of chatbots is evolving rapidly, with emerging trends focused on enhancing user experience, personalization, and multi-modality. These trends are shaping the next generation of chatbots, and understanding them is crucial for strategically adopting new AI technologies.

- Multi-Modal and Context-Aware Chatbots

- The development of multi-modal chatbots that can process text, voice, and even visual inputs is a major trend. These models are built using advanced transformer-based architectures, which enable chatbots to maintain context across different types of inputs, leading to more holistic and accurate conversations.

- As chatbots become more context-aware, they will have improved capabilities for sentiment analysis, context retention, and personalized interactions. Function-calling models will play a pivotal role in this evolution by enabling chatbots to retrieve and process data dynamically from different sources, making them adaptable to diverse user needs.

- Personalization and Self-Improving Chatbots

- The demand for personalized user experiences is driving the rise of chatbots that can tailor conversations to individual users, considering their preferences, behavior patterns, and past interactions. The ability to call external functions is integral to this, as it allows for data retrieval and personalized recommendations, providing a more customized experience.

- Moreover, self-improving chatbots are emerging as a key trend. These bots use feedback from user interactions to improve their performance autonomously, leveraging techniques like reinforcement learning and continuous model fine-tuning. This shift towards self-improvement reduces the manual effort required to maintain and update chatbot functionalities, allowing businesses to deploy more responsive and accurate conversational agents.

- Ethics, Privacy, and User Trust

- The integration of AI into conversational systems brings to light significant ethical considerations around privacy, transparency, and user trust. As bots become more capable of accessing user data and personalizing responses, maintaining data privacy and ethical transparency is becoming increasingly important.

- Concurrency and Computation’s study emphasizes the necessity of ethical AI practices when implementing function-calling bots. These include secure data storage, consent-based data sharing, and transparent communication about how user data is being used. Ensuring adherence to ethical standards will not only protect user data but also build trust and confidence in the use of AI-powered chatbots.

By balancing innovation with practicality, businesses can leverage function-calling and NLU-based chatbots based on their specific needs, resources, and privacy considerations. Future developments in multi-modality, personalization, and self-improving systems will continue to shape how chatbots are used across industries, making it crucial to choose the right technologies that align with strategic goals and ethical practices.

References

https://www.researchgate.net/publication/377460646_AI_and_New_Product_Development

https://platform.openai.com/docs/guides/function-calling

https://onlinelibrary.wiley.com/doi/epdf/10.1002/cpe.6426