GDPR Compliant AI Chat: Requirements, Architecture & Setup 2026

Build GDPR compliant AI chatbots. Covers 7 legal requirements, 3 architecture approaches, implementation checklist, and EU AI Act overlap. Practical guide.

Italy banned ChatGPT in March 2023. The reason: GDPR violations. OpenAI wasn't transparent about data collection, lacked a legal basis for processing personal data, and had no age verification. The ban lifted only after OpenAI scrambled to add consent mechanisms and an opt-out for training data.

Most companies building AI chatbots today are making the same mistakes, and the consequences are getting expensive. GDPR fines for chatbot-related violations now range from €35,000 for missing consent to €1.5 million for unreported breaches. The maximum penalty hits €20 million or 4% of global revenue.

Here's the uncomfortable truth: if your chatbot sends prompts to US-based APIs, stores chat transcripts without defined retention periods, or lacks explicit user consent before processing personal data, you're exposed.

This guide covers the specific GDPR requirements for AI chat, why cloud APIs create compliance gaps, and how to build a chatbot that's actually audit-ready, without drowning in legal complexity.

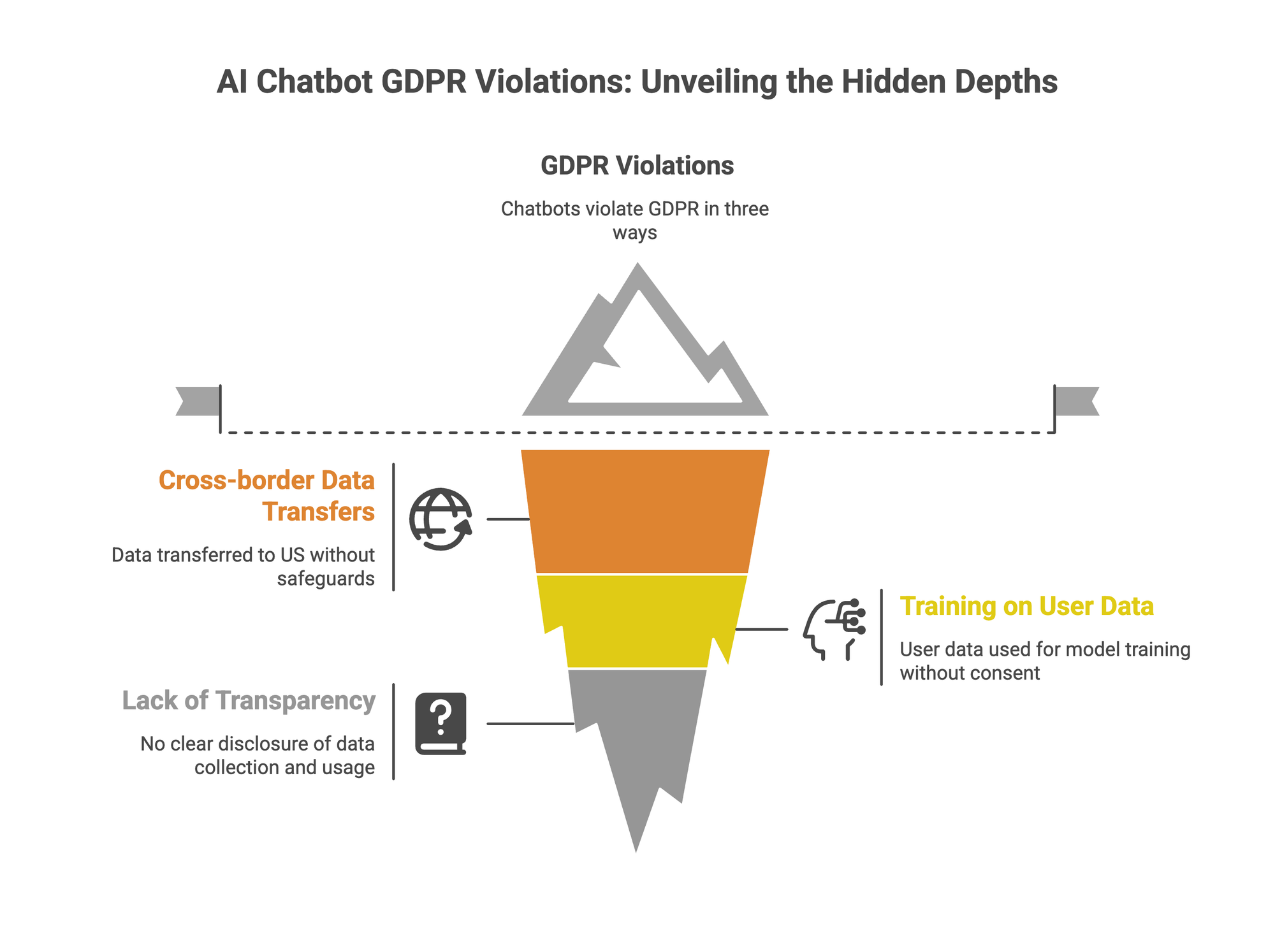

Why Most AI Chatbots Fail GDPR

The default setup for most AI chatbots violates GDPR in at least three ways. Understanding where compliance breaks helps you avoid the same traps.

Problem 1: Cross-border data transfers without safeguards

When your chatbot calls OpenAI's API, user prompts travel to servers in the US. Post-Schrems II, transferring personal data to the US requires additional safeguards beyond Standard Contractual Clauses. Most implementations don't have them.

The EU has an adequacy agreement with the US under the Data Privacy Framework, but it only covers companies that self-certify. And data protection authorities are actively challenging whether these transfers actually protect EU citizens. Your audit trail needs to prove compliance, not assume it.

Problem 2: Training on user data without consent

OpenAI's default: your prompts improve their models. That's data processing for a purpose users never agreed to. ChatGPT Enterprise and API offer opt-outs, but the standard tiers don't. If a customer shares sensitive data in your chatbot and it ends up training a foundation model, you've lost control, and violated purpose limitation.

Anthropic, Google, and other providers have similar policies buried in terms of service. "We don't train on API data" often has asterisks.

Problem 3: No transparency, no audit trail

GDPR requires you to clearly inform users what data you collect, why, and how long you keep it. Most chatbot implementations skip this entirely, no disclosure before chat begins, no accessible privacy policy, no retention schedule.

When data protection authorities come knocking, "we use ChatGPT" is not a compliance strategy. You need documented data flows, defined retention periods, and evidence of user consent. Cloud APIs give you none of this by default.

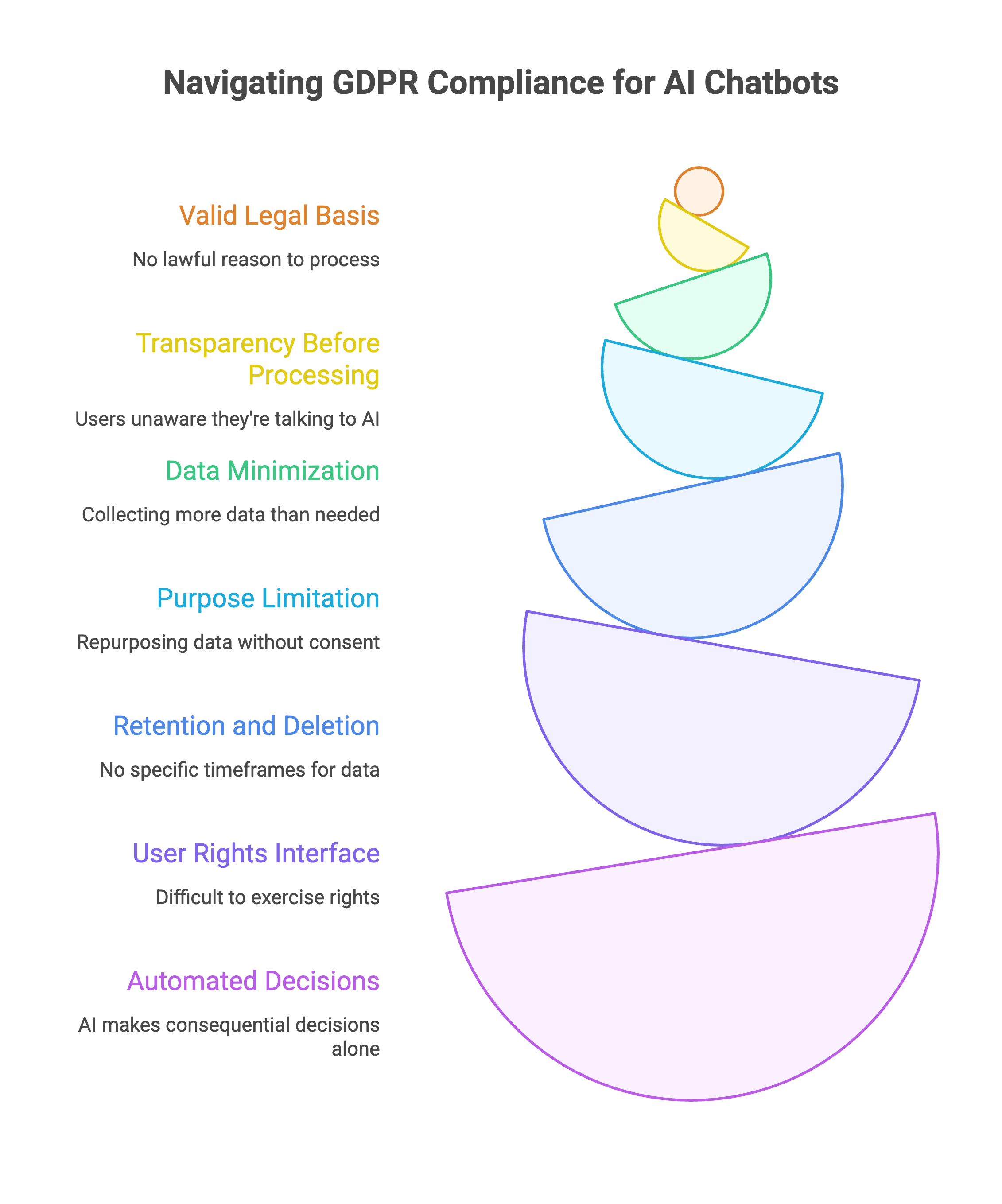

7 GDPR Requirements Every AI Chatbot Must Meet

First of all, GDPR is not a checklist. These seven requirements shape how you design, deploy, and operate your chatbot system. Miss anyone, and you're exposed.

1. Establish a Valid Legal Basis (Article 6)

You cannot process personal data without a lawful reason. For AI chatbots, three legal bases typically apply:

Consent is the most common. But GDPR consent isn't a pre-checked box buried in terms of service. It must be:

- Freely given: Users can't be forced to accept chatbot data processing to use your service

- Specific: "We use AI to process your messages" not "we may use your data for various purposes"

- Informed: Explain what happens to their data before they type anything

- Unambiguous: Requires clear affirmative action (unchecked checkbox, explicit button click)

Example implementation:

Before starting this chat, please note:

I understand this chatbot uses AI to process my messages.

My conversation may be stored for 90 days to improve support quality.

[Read full privacy policy]

[Start Chat] [Decline]

Contract performance works only if the chatbot is genuinely necessary to deliver a service the user requested. A support chatbot helping with an existing order? Arguably yes. A marketing chatbot collecting leads? No, you need consent.

Legitimate interest rarely applies to AI chatbots because the balance test usually fails. Processing someone's messages through AI systems that may retain or analyze data is hard to justify as "expected" by users.

2. Be Transparent Before Processing (Articles 13-14)

Users must know they're talking to AI, not optional, not buried in footnotes. GDPR requires you to provide specific information before collecting any personal data:

What you must disclose:

- Identity of the data controller (your company, not "powered by OpenAI")

- Purpose of processing (support, sales, lead generation, be specific)

- Legal basis you're relying on

- Data retention periods

- Third parties who receive the data (including AI providers)

- User rights and how to exercise them

- Right to withdraw consent

What this looks like in practice:

Bad: "By using this chat, you agree to our terms."

Good: "You're chatting with an AI assistant. Your messages are processed by [Company Name] to answer your questions. We store conversations for 90 days, then delete them. Your data stays in the EU. You can request deletion anytime by typing 'delete my data.' [Full privacy policy]"

The disclosure must be easily accessible and written in clear language, not legalese. If your users include non-native speakers, consider localized versions.

3. Collect Only What You Need (Article 5 - Data Minimization)

Every data field your chatbot captures needs justification. If you can't explain why you need it, don't collect it.

Audit your chatbot's data collection:

Progressive disclosure works better than upfront forms. Don't ask for email, name, and company before the conversation starts. Let users ask their question first. Request identifying information only when actually needed, like if they want a follow-up email.

Example flow:

- User asks question → chatbot answers (no personal data needed)

- User wants order status → chatbot asks for order number only

- User wants email confirmation → now request email address

This approach respects data minimization and improves user experience. Win-win.

4. Stick to Your Stated Purpose (Article 5 - Purpose Limitation)

Data collected for one purpose cannot be repurposed without new consent. This is where most ChatGPT integrations fail catastrophically.

Scenario: User asks your support chatbot about a product defect. Their message contains personal details about their use case.

- Allowed: Using that data to resolve their support ticket

- Not allowed: Feeding it to marketing for targeted campaigns

- Not allowed: Training your AI model on their conversation (without separate consent)

- Not allowed: Sharing with "partners" for "service improvement"

The training data trap: When you use OpenAI or Anthropic APIs, check whether user prompts contribute to model training. Even if you're compliant, your chatbot provider might not be. This is why self-hosted solutions or providers with zero data retention policies matter for regulated industries.

Document your purposes explicitly:

- Primary: Answer user questions

- Secondary: Generate aggregate analytics (anonymized)

- Not permitted: Model training, marketing, third-party sharing

5. Define Retention and Deletion Schedules (Article 5 - Storage Limitation)

"We keep data as long as necessary" is not a retention policy. GDPR requires specific timeframes tied to specific purposes.

Build a retention schedule:

Implement automated deletion. Don't rely on manual processes. Your chatbot platform should auto-purge data when retention periods expire. If it doesn't support this, you have a compliance gap.

The "delete my data" function must actually work. When a user requests erasure, you need to delete their chat history from:

- Your chatbot database

- Your analytics systems

- Any third-party processors (including AI providers)

- Backup systems (within reasonable timeframes)

Test this. Many organizations discover their deletion processes don't actually delete anything.

6. Enable User Rights Through the Chat Interface (Articles 15-22)

GDPR grants users specific rights over their personal data. The smartest implementations let users exercise these rights directly in the chatbot, no email to [email protected] required.

Rights your chatbot should support:

- Right to access (Article 15): User types "show my data" → chatbot exports their conversation history and any stored profile information.

- Right to rectification (Article 16): User says "my email is wrong" → chatbot updates it or routes to a process that does.

- Right to erasure (Article 17): User types "delete my data" → chatbot confirms and executes deletion, provides confirmation.

- Right to object (Article 21): User says "don't use my data for analytics" → chatbot flags their account to exclude from processing.

Example implementation:

User: Delete my conversation historyChatbot: I can delete all your chat data with us. This includes:

- Your 3 previous conversations (Jan 12, Jan 18, Feb 2)

- Your stored email address

This cannot be undone. Confirm deletion?

[Yes, delete everything] [Cancel]

User: [Yes, delete everything]

Chatbot: Done. All your personal data has been deleted.

Confirmation reference: DEL-2025-8847

This approach builds user trust while ensuring compliance. Users feel in control because they are in control.

7. Don't Let AI Make Consequential Decisions Alone (Article 22)

GDPR restricts "solely automated" decisions that produce "legal effects" or "significantly affect" individuals. For AI chatbots, this matters more than most realize.

What counts as a "significant effect":

- Approving or denying a loan application

- Determining insurance eligibility or pricing

- Making hiring or firing decisions

- Denying service access based on AI assessment

- Medical triage or treatment recommendations

What's generally fine:

- Answering FAQs

- Routing to appropriate department

- Providing product recommendations (non-binding)

- Scheduling appointments

If your chatbot makes consequential decisions, you must:

- Obtain explicit consent for automated decision-making, OR

- Ensure human oversight before final decisions execute

Example of compliant design:

Bad: AI chatbot reviews loan application → automatically approves/denies → notifies user

Good: AI chatbot reviews loan application → generates recommendation → human reviews → decision made → notifies user with explanation

Explainability requirement: Users have the right to "meaningful information about the logic involved" in automated decisions. Your chatbot needs to explain why it reached a conclusion, not just state the outcome.

User: Why was my application flagged for review?

Chatbot: Based on the information provided, our system identified

that your stated income requires additional verification. This

is a standard check, a team member will review within 24 hours.

You can also request a fully manual review by typing "human review."

This is a good product design. Users accept AI decisions more readily when they understand the reasoning.

Architecture Approaches for GDPR Compliance

Your chatbot's architecture determines your compliance ceiling. Some setups make GDPR easy. Others make it nearly impossible.

Decision shortcut: If your chatbot handles health data, financial information, HR records, or anything requiring DPIA, skip Option 1. Choose between self-hosted (if you have the team) or managed self-hosting (if you don't).

Smart move. The 7 Requirements section already covers the "how"—no need to repeat. Let's get to the solution.

Prem AI: GDPR Compliance Without the Infrastructure Pain

You've seen the requirements. You know the architecture options. Here's why teams in regulated industries skip the DIY route.

The Problem With DIY Compliance

Self-hosting gives you control. But it also gives you:

- GPU infrastructure to manage

- Security patches to apply

- Compliance documentation to maintain

- 2am debugging when inference breaks

Most teams don't have MLOps capacity. They want compliant AI, not a second job.

What PremAI Handles

- Swiss Jurisdiction Prem AI operates under Swiss data protection law, recognized by the EU as providing adequate protection. No Schrems II headaches. No complex transfer mechanisms.

- Zero Data Retention Your prompts and outputs aren't stored. Nothing to delete because nothing is kept. This simplifies your retention policy to one line: "AI provider retains nothing."

- EU Data Residency Processing stays in the EU. When auditors ask where data goes, you have a simple answer.

Built-In Compliance Features

- Audit logs ready for export

- Consent management integration

- DPIA templates for AI chat use cases

- Data subject request handling

OpenAI-Compatible API Already built on OpenAI? Switching is a config change, not a rewrite.

# Before

client = OpenAI(api_key="sk-...")

# After

client = OpenAI(

api_key="your-prem-key",

base_url="https://api.premai.io/v1"

)Same code. Different compliance posture.

Who Uses This

- Healthcare - Patient chat triage that meets HIPAA + GDPR. No data leaves controlled environment.

- Finance - Customer service bots handling account queries. PCI-DSS and GDPR covered.

- Legal - Document Q&A without client data hitting third-party servers.

- HR - Employee chatbots processing sensitive workplace data under GDPR's stricter rules for employment context.

DIY compliant infrastructure: 2-3 engineers, 3-6 months, ongoing maintenance. Managed self-hosting: API key, afternoon integration, compliance on day one. If your bottleneck is shipping product, not building infrastructure, this is the faster path.

→ Talk to the team | See enterprise features

GDPR Meets the EU AI Act

GDPR covers data. The EU AI Act covers the AI system itself. If you're building chatbots in the EU, you need to think about both. The EU AI Act classifies AI systems by risk level. Most chatbots fall into "limited risk", which means one main obligation:

Transparency. Users must know they're talking to AI, not a human.

That's it for most chatbots. You're probably doing this already.

It gets stricter when some chatbot use cases hit "high risk" classification:

- Employment decisions (screening, hiring)

- Credit or insurance assessments

- Access to essential services

- Education admissions or grading

High-risk systems face additional requirements: conformity assessments, technical documentation, human oversight, and accuracy monitoring.

Just so you all know - Aug 2026 is the deadline for full enforcement for high-risk systems.

What To Do Now

For most chatbots:

- Disclose AI use clearly (you should already do this for GDPR)

- Document your system's purpose and limitations

For high-risk use cases:

- Start conformity assessment planning

- Implement human oversight for consequential decisions

- Document training data and model behavior

Good news: GDPR compliance gives you a head start.

If you've built for GDPR, you're already halfway to AI Act compliance.

FAQ

1. Is ChatGPT GDPR compliant?

Not by default. ChatGPT's standard tier trains on your inputs and lacks EU data residency. ChatGPT Enterprise and API offer better controls, opt-out of training, DPA available, but you're still responsible for consent, transparency, and retention on your end. The tool alone doesn't make you compliant.

2. Do I always need user consent for chatbot data?

Not always. Consent is one legal basis. If the chatbot is necessary to deliver a service the user requested (contract performance), that can work too. But for most marketing, support, or lead-gen chatbots collecting personal data, consent is the safest route.

3. Can I use US-based AI providers under GDPR?

Yes, but it's complicated. You need a valid transfer mechanism, typically Standard Contractual Clauses plus supplementary measures. The EU-US Data Privacy Framework helps if your provider is certified. Easier path: choose providers with EU data residency or zero data retention.

4. What are the fines for non-compliant AI chatbots?

Same as any GDPR violation: up to €20 million or 4% of global annual revenue, whichever is higher. In practice, most chatbot-related fines range €50K–€1.5M. The reputational damage often costs more than the fine itself.

5. Do I need a Data Protection Officer to run a chatbot?

Only if your core business involves large-scale processing of personal data or special categories (health, biometrics). A simple support chatbot probably doesn't trigger DPO requirements. But if your chatbot processes sensitive data at scale, yes, you likely need one.

Conclusion

GDPR compliance for AI chatbots is the cost of operating in the EU market.

The requirements are clear: valid legal basis, transparency, data minimization, purpose limitation, retention limits, user rights, human oversight for consequential decisions. Miss any of these, and you're exposed.

You have three paths:

- Cloud APIs with guardrails - works for low-risk use cases, but you inherit compliance complexity

- Self-hosted - maximum control, but requires infrastructure and MLOps capacity

- Managed self-hosting - compliance built in, no infrastructure burden

If you're in healthcare, finance, legal, or HR, anywhere sensitive data flows through chat, the math favors option three.

Prem AI gives you GDPR compliance by default: Swiss jurisdiction, zero data retention, EU residency, audit-ready logs. Same OpenAI-compatible API, different compliance posture.

→ Book a demo to see how it works for your use case.