How to Save 90% on LLM API Costs Without Losing Performance

Learn why LLM API costs skyrocket and discover 7 proven strategies to reduce spending by up to 90% without losing performance. Explore real-world examples, industry insights, and how PremAI helps you build smarter, scalable AI workflows.

Introduction: The Rising Cost of LLMs

Large Language Models (LLMs) have revolutionized the way we build intelligent applications. From customer support automation to data analysis and content generation, developers are integrating GPT-5, LLaMA, Claude, and other models into real-world workflows at unprecedented speed.

But with great power comes a heavy price tag. LLM APIs are expensive, and costs scale rapidly as usage grows. A prototype that costs a few dollars per day can quickly become a five-figure monthly bill when deployed at enterprise scale. For startups and enterprises alike, this is a major concern:

- How can you keep innovation fast while staying within budget?

- Can you reduce API spend without killing performance?

- And how can you leverage tools like PremAI to make this process more efficient?

In this blog, we’ll explore why LLM costs escalate, seven proven strategies to cut spending, advantages and disadvantages of cost reduction, real-world examples, and how PremAI fits into your AI workflow.

Why Do LLM API Costs Escalate?

Before we look at strategies, let’s break down the core reasons:

- Token Usage - LLMs charge by tokens (input + output). Long prompts, unnecessary context, and verbose responses add up.

- Model Selection - Using GPT-4 for every query when GPT-3.5 or an open-source model would suffice inflates costs.

- High Latency Calls - Slow, unoptimized queries increase compute usage.

- Overprovisioning - Teams often overestimate capacity, paying for idle usage.

- Untracked Spend - Without monitoring tools, costs spiral without clear accountability.

👉 Key takeaway: Most organizations overspend not because LLMs are inherently costly, but because they lack optimization.

7 Proven Strategies to Reduce LLM Costs

Based on industry best practices and insights from top AI builders, here are seven strategies customized for PremAI users, along with examples that PremAI supports.

1. Use the Right Model for the Job

Not every task needs a massive LLM. With Prem Studio, enterprises can build Customized Small Language Models (SLMs) tuned on their private data. These models run faster, cost less, and deliver higher relevance than generic APIs.

Example: A fintech company used Prem Studio to fine-tune a smaller open-source model on their internal compliance documents. Instead of relying on expensive closed APIs, they cut response times by 40%, reduced costs by 65%, and kept all data fully secure within their VPC.

2. Optimize Prompts and Responses

Prompt engineering = cost engineering. Longer prompts and bloated outputs burn unnecessary tokens. Prem Studio’s tools make it easy to test, refine, and compare prompts across models for efficient AI agent performance.

Example: A support team using Prem Studio replaced long, verbose prompts with short, structured ones like: Instead of “Retrieve all past interactions this customer had and explain in detail what issues were raised,” use “Summarize this customer’s last 3 support tickets in 2 lines.”

With PremAI Studio, teams can A/B test prompts across models, see cost and accuracy trade-offs instantly, and lock in the most efficient version, cutting both latency and token spend.

3. Truncate Inputs with Pre-processing

LLM pricing depends on tokens. Sending full PDFs, chats, or documents wastes cost. Prem Studio pre-processing trims noise and extracts only relevant sections optimizing both accuracy and spend.

Example: A legal team working with Prem Studio trimmed full contract PDFs into only clauses relevant to compliance checks before sending them to the model. This reduced token usage by 50%, speed up responses, and ensured lawyers only saw the sections that mattered.

4. Hybrid Inference (Mix Local + Cloud Models)

Enterprise AI doesn’t need to choose between local open-source models and cloud APIs. With Prem Studio’s model orchestration, you can route simple tasks to lightweight models and advanced reasoning to powerful APIs.

Example: A healthcare startup used Prem Studio’s orchestration to run quick symptom triage queries on small LMs, while sending complex diagnostic reasoning to big LMs. This hybrid setup cut costs by 60% without compromising on accuracy for critical cases.

5. Monitor Usage and Set Alerts

You can’t optimize what you don’t measure. Prem Studio dashboards track usage, spend, and performance, and apply smart routing to balance cost and accuracy in real time.

6. Cache Frequent Queries

Many enterprise AI use cases involve repetitive queries. With caching, you can serve FAQs instantly without hitting the model each time, cutting cost and latency.

7. Batch Requests Where Possible

Running multiple small requests separately wastes compute. Batching LLM calls lets you process groups of inputs together, making AI workflows more scalable and cost-effective.

Advantages and Disadvantages of Cost-Cutting

Like any strategy, reducing costs comes with trade-offs.

✅ Advantages

1. Lower API bills (up to 90% savings)

By fine-tuning smaller, domain-specific models instead of constantly hitting expensive base LLM APIs, you reduce token usage and latency. This means fewer wasted tokens on irrelevant context, tighter prompts, and predictable costs. Teams can reallocate budget to product growth instead of API overages.

2. More efficient developer workflows

Developers spend less time on prompt engineering and more time building features. With tuned models that already understand domain context, prompts are shorter, cleaner, and require fewer retries. This cuts down debugging cycles, accelerates prototyping, and allows faster iteration.

3. Easier to scale AI applications sustainably

Fine-tuned models are lighter, faster, and cheaper to run in production compared to large generic LLMs. This makes it easier to deploy at scale without breaking infra budgets. You can serve more users simultaneously, maintain reliability, and reduce environmental/energy overhead as usage grows.

⚠️ Disadvantages

- Over-optimization can reduce model accuracy

Over-tuning a model to specific benchmarks or datasets can cause it to underperform in real-world scenarios.

✅ Prevention: Test models on diverse, real-world datasets, run controlled pilot deployments, and track accuracy against practical use cases before rolling out fully. - Cheaper models may lack advanced reasoning

Lightweight or budget-friendly models can struggle with complex problem-solving or nuanced queries.

✅ Prevention: Choose models that align with task complexity, use cost-effective models for routine tasks, and stronger models for advanced reasoning. Hybrid deployments can balance cost and capability. - Requires ongoing monitoring and adjustment

AI systems can degrade over time due to changing data, customer needs, or system updates.

✅ Prevention: Set up continuous monitoring with automated evaluation pipelines, regular audits, and user feedback loops to catch issues early and make timely adjustments.

👉 PremAI’s role: By allowing experimentation across multiple models and testing workflows, PremAI helps you balance cost savings with performance.

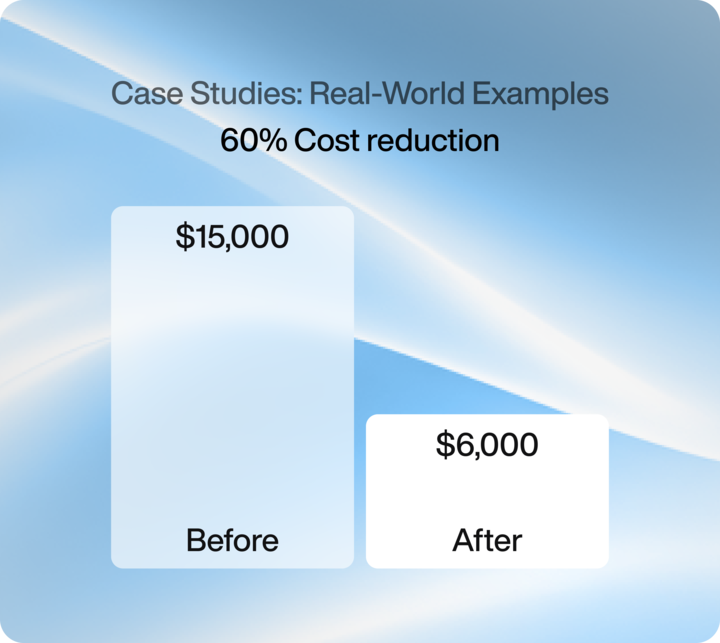

Case Studies: Real-World Examples

- Case Study 1: Startup Scaling to Production

A SaaS startup building an AI writing assistant found costs ballooning with GPT-4. By switching 70% of calls to Qwen models on Prem Studio and adding caching, they cut spend from $15,000/month to $4,500/month, while maintaining customer satisfaction. - Case Study 2: Enterprise AI Deployment

A global enterprise used hybrid inference running Small models for basic tasks, and big models for complex reasoning. Combined with monitoring and batching, this reduced costs by 60%.

PremAI Benefits You Can Count On

While PremAI doesn’t directly manage API billing, it indirectly helps teams cut costs by:

- Allowing multi-model experimentation, choose the best fit for cost vs performance.

- Providing a studio for rapid prototyping so teams don’t waste tokens.

- Supporting open-source model integration for hybrid setups.

- Accelerating developer workflows, which reduces engineering costs overall.

👉 PremAI = smarter workflows = indirect cost savings.

Conclusion: Building Sustainable AI with PremAI

As LLM adoption accelerates, cost management is no longer optional, it’s mission-critical. Startups risk burning through funding; enterprises risk unsustainable scaling.

By applying the seven proven strategies, right model selection, prompt optimization, caching, batching, truncation, hybrid inference, and monitoring, teams can reduce LLM API costs by up to 90% without sacrificing performance.

And while you implement these strategies, PremAI empowers you to test, compare, and orchestrate models faster than ever, helping you focus on innovation, not just optimization.

Call to Action

🚀 Ready to cut costs and scale smarter?

👉 Try Prem Studio today and start building powerful AI workflows that save money while delivering performance.

💡Join the Conversations

We’d love to hear your thoughts! Share your perspective and tag us on X and LinkedIn. let’s talk about building smarter, scalable AI together.

FAQs

1. Why are LLM API costs so high?

LLM APIs charge based on tokens (input + output). Long prompts, verbose outputs, and using expensive models like GPT-4 for simple tasks make costs escalate quickly.

2. How much can I realistically save on LLM costs?

By applying strategies like prompt optimization, caching, batching, and hybrid inference, companies can reduce costs by 50–90% without major performance loss.

3. Does PremAI provide direct cost management tools?

No, PremAI doesn’t directly track or manage billing. Instead, it helps reduce costs indirectly by enabling multi-model experimentation, efficient prototyping, and hybrid workflows.

4. What’s the best model for reducing costs?

It depends on the use case. GPT-3.5 or open-source models often work for summarization or classification, while GPT-4 or Claude may be reserved for complex reasoning.

5. Can I combine open-source and API models together?

Yes. This hybrid inference strategy allows you to run cheap local models for simple tasks and use premium APIs only for advanced reasoning.

6. How often should I monitor usage?

Ideally, daily or weekly. Setting cost alerts ensures you catch unexpected spikes early and switch to cheaper models when needed.

7. Do cost optimizations reduce accuracy?

If over-optimized, yes. The key is balance, trimming unnecessary tokens and using the right model without compromising the quality your users expect.

Prem Studio provides secure, private compute in Switzerland for building and deploying your own models.