How to Succeed with Custom Reasoning Models?

Custom reasoning models enable multi-step reasoning beyond LLMs. Learn how PremAI helps enterprises build scalable, explainable, high-performance AI.

Recent advancements in AI have highlighted the significance of custom reasoning models that can explicitly perform complex, multi-step reasoning tasks. Unlike standard Large Language Models (LLMs), reasoning models employ deliberate cognitive processes to achieve greater accuracy, interpretability, and performance in challenging scenarios. This article will guide developers and businesses through the technical methodologies essential for creating, optimizing, and implementing custom reasoning models effectively.

The Rise of Custom Reasoning Models

Standard Large Language Models (LLMs) like OpenAI o1, DeepSeek R1, etc, while powerful, primarily operate based on System 1 Thinking, characterized by intuitive and rapid reasoning. They excel at tasks closely matching patterns observed during their training, but falter when facing problems requiring deeper reasoning or extrapolation beyond known contexts.

Custom Reasoning Models, often referred to as Reasoning Language Models (RLMs), explicitly address these limitations. By integrating deliberate, structured reasoning processes, known as "System 2 Thinking", these models can tackle sophisticated, multi-faceted problems through structured approaches such as tree-search algorithms or iterative logic-based exploration. These architectures allow models to not just mimic known patterns but also genuinely reason about novel scenarios to deduce novel, contextually accurate solutions.

The shift from standard LLMs to custom reasoning models arises from significant technological advancements across three main pillars:

Large Language Models as Knowledge Reservoirs

LLMs, such as GPT-4 or LLaMA, have revolutionized natural language processing by providing extensive linguistic and factual knowledge encoded in their neural weights. However, their reasoning capability primarily arises from statistical language modeling and next-token prediction. Consequently, their output often remains shallow and limited to known contexts, reflecting primarily interpolation rather than genuine extrapolation.

Reinforcement Learning: A Structured Reasoning Catalyst

Reinforcement Learning (RL) is central to empowering explicit reasoning capabilities in custom models. Through iterative exploration and reward-driven feedback loops, RL enhances reasoning performance by systematically refining model outputs. Specifically, Reinforcement Learning with Human Feedback (RLHF) and algorithmic paradigms such as Monte Carlo Tree Search (MCTS) significantly strengthen the reasoning process, enabling models to navigate large, complex search spaces and iteratively converge on optimal solutions.

The empirical research conducted by the STILL project highlights that RL can significantly improve the reasoning capabilities of models such as QWEN2.5-32B, demonstrating that even highly performant fine-tuned models benefit substantially from further RL-based optimization.

High-Performance Computing (HPC)

The growth of reasoning models has also been enabled by advancements in computational infrastructure. Modern reasoning architectures often require significant computational resources due to the iterative, exploratory nature of their inference processes. Innovations such as GPU/TPU accelerators, parallel computing techniques, and efficiency-focused optimizations (quantization, pruning, sparsity) play an essential role in scaling these models effectively and economically, ensuring that reasoning models remain practical and deployable at scale.

Advantages and Technical Challenges of Custom Reasoning Models

The shift toward custom reasoning models introduces notable advantages:

- Improved Accuracy and Interpretability: Explicit reasoning mechanisms provide clearer visibility into decision-making processes, enabling easier debugging, verification, and higher confidence in critical outcomes.

- Extrapolation Capabilities: Unlike LLMs confined largely to their training distributions, RLMs can systematically navigate uncharted reasoning paths, allowing them to generate innovative and contextually accurate solutions to previously unseen problems.

However, the successful deployment of custom reasoning models involves significant technical hurdles:

- Computational Complexity: Structured reasoning processes typically require iterative inference steps, resulting in higher computational and inference costs. Managing these costs while maintaining reasoning quality is critical.

- Quality and Availability of Training Data: The accuracy and effectiveness of reasoning models heavily depend on the quality of their training data. Synthetic and verified data generation methods are essential yet pose their own challenges in terms of scalability and cost-efficiency.

- Robust Evaluation and Benchmarking: Standard benchmarks often inadequately differentiate between true reasoning and superficial pattern matching. More sophisticated evaluation methods, such as Probabilistic Mixture Models (PMM) and Information-Theoretic Consistency (ITC) analyses, provide deeper insights into actual reasoning capabilities.

The following sections will further explore these technical strategies and methodologies in detail, providing a clear roadmap for successfully creating, optimizing, and deploying custom reasoning models without relying on specific platform implementations.

Building and Optimising Custom Reasoning Models

Creating effective custom reasoning models demands specialized architectures, strategic fine-tuning, and advanced optimization techniques. This section details essential methodologies for successfully implementing and refining reasoning models, ensuring robust and reliable performance across diverse and complex problem domains.

Reasoning Architectures and Explicit Structures

Reasoning Language Models (RLMs) distinguish themselves from traditional LLMs by employing explicit reasoning frameworks that facilitate deliberate multi-step inference. These structures provide enhanced interpretability, allowing clearer insights into the model’s reasoning process, easier debugging, and increased confidence in the correctness of outputs.

Implicit vs. Explicit Reasoning Architectures

Reasoning models can adopt either implicit or explicit reasoning architectures, each offering distinct advantages and limitations:

- Implicit Reasoning Models embed reasoning structures within the neural weights, resulting in faster inference but limited interpretability. Models such as Alibaba's QwQ encapsulate complex reasoning implicitly, leading to black-box behaviors difficult to audit or adjust systematically.

- Explicit Reasoning Models, conversely, externalize reasoning processes. Techniques such as Monte Carlo Tree Search (MCTS), Beam Search, and Tree of Thoughts explicitly represent reasoning paths, allowing systematic exploration and iterative refinement. OpenAI’s reasoning models (e.g., o1 and o3) exemplify this architecture, facilitating transparent reasoning processes crucial for mission-critical and complex tasks.

Prominent Reasoning Paradigms

To effectively structure explicit reasoning processes, several paradigms are commonly employed:

- Chain of Thought (CoT) explicitly generates linear sequences of reasoning steps. CoT significantly improves accuracy by breaking down complex problems into manageable, clearly articulated steps. This method has demonstrated considerable success in multi-step logical reasoning and mathematical problem-solving tasks.

- Tree of Thoughts (ToT) enhances reasoning by systematically branching potential reasoning paths. It leverages tree structures to explore multiple intermediate outcomes, allowing backtracking and refinement of paths, enabling models to converge on optimal solutions through comprehensive reasoning exploration.

- Graph of Thoughts (GoT) further extends ToT, using graph-based structures to simultaneously explore multiple interconnected reasoning pathways. By facilitating flexible transitions across different reasoning nodes, GoT supports nuanced, complex reasoning scenarios that exceed the capabilities of linear or purely tree-based methods.

Fine-Tuning Strategies: SFT and Reinforcement Learning

Reasoning models significantly benefit from specialized fine-tuning processes. These processes adapt base LLMs to specific reasoning tasks through supervised data, reinforcement learning methods, and automated feedback loops:

Supervised Fine-Tuning (SFT)

Supervised Fine-Tuning remains foundational in developing reasoning models, leveraging carefully prepared datasets that provide explicit reasoning steps and clear solution paths. Models are trained on structured examples, learning not just to provide correct answers but also to articulate coherent reasoning processes clearly. Datasets designed with CoT reasoning steps are particularly effective in priming models for subsequent optimization through reinforcement learning strategies.

Reinforcement Learning (RL) Optimization

Reinforcement Learning methods substantially enhance reasoning capabilities by introducing iterative feedback mechanisms that enable models to refine their reasoning pathways actively:

- Reinforcement Learning from Human Feedback (RLHF) involves human-labeled preferences guiding the model towards outcomes aligned with human expectations and reasoning accuracy. Although effective, RLHF requires extensive human annotation efforts, limiting scalability.

- Autonomous RL Optimization Methods, such as Monte Carlo simulations combined with Process Reward Models (PRMs) and Outcome Reward Models (ORMs), reduce dependency on human feedback. These methods utilize automated verification of reasoning steps through predefined rewards, systematically guiding models to self-improve without extensive human involvement. Research efforts, such as the STILL project, have demonstrated that RL training markedly enhances even well-performing, pre-trained reasoning models, unlocking advanced reasoning abilities through continuous iterative improvement.

Reasoning Efficiency at Inference Time

Explicit reasoning processes, while powerful, typically entail higher computational costs due to iterative, token-intensive inference. Addressing inference efficiency without compromising reasoning accuracy is essential:

Sketch-of-Thought (SoT): A Cognitive-Inspired Approach

The Sketch-of-Thought (SoT) framework addresses computational efficiency by mirroring human cognitive shortcuts and minimizing verbosity in intermediate reasoning steps. It leverages three distinct cognitive paradigms optimized for specific tasks, significantly reducing token usage:

- Conceptual Chaining efficiently compresses commonsense and multi-hop reasoning into concise logical sequences.

- Chunked Symbolism employs structured symbolic representations, condensing mathematical and computational tasks into compact notation.

- Expert Lexicons utilize specialized domain-specific shorthand and notation, ideal for specialized fields such as medicine, science, or engineering.

Adaptive Paradigm Selection through Routing Models

Effectively deploying reasoning models across diverse tasks requires dynamic selection of optimal reasoning paradigms. Lightweight auxiliary routing models analyze task characteristics and automatically select the most efficient reasoning approach, enhancing computational efficiency and maintaining accuracy. This adaptive mechanism ensures reasoning models consistently achieve optimal performance regardless of task variations.

Technical Challenges and Best Practices

Despite significant advancements, building effective custom reasoning models involves addressing critical technical challenges:

- Data Quality and Generation: High-quality reasoning datasets are vital yet challenging to produce manually. Leveraging automated data augmentation and verification using existing LLMs is essential to scale the data production process economically.

- Managing Computational Costs: Explicit reasoning methods, such as MCTS or Graph of Thoughts, inherently require more computational resources. Effective management strategies include token-efficient prompting techniques, adaptive inference strategies, and hardware optimizations to minimize computational expenses while maintaining reasoning accuracy.

In summary, building and optimizing custom reasoning models involves a balanced integration of advanced reasoning architectures, targeted fine-tuning, efficient inference strategies, and rigorous evaluation frameworks. Adopting these best practices ensures robust, reliable reasoning models capable of handling sophisticated tasks across diverse problem spaces.

Evaluating and Deploying Custom Reasoning Models

Accurate evaluation and practical deployment represent two critical yet challenging stages in the lifecycle of custom reasoning models. Traditional benchmarks frequently fail to differentiate superficial pattern matching from genuine reasoning capabilities, requiring more robust and nuanced evaluation methods. Simultaneously, deployment decisions must account for computational efficiency, security, and flexibility to ensure reasoning models remain practical and effective in real-world scenarios.

Limitations of Traditional Benchmarks

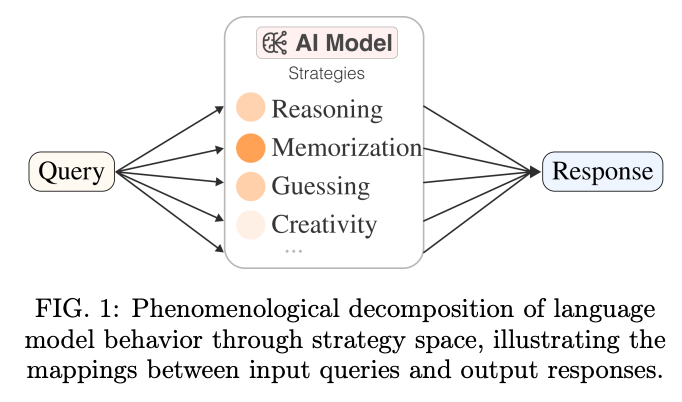

Common reasoning benchmarks such as GSM8K, GPQA, and Big-Bench, while popular, primarily measure aggregate accuracy or F1-scores, often masking underlying model behaviors. They typically treat model reasoning as a "black box," inadequately capturing whether the model employs genuine logical deduction or relies heavily on memorization and statistical heuristics. For instance, minor reformulations of benchmark tasks can dramatically impact model performance, highlighting superficial memorization rather than robust reasoning capabilities.

Consequently, a more refined evaluation approach is necessary, one that explicitly assesses reasoning depth, consistency, and robustness rather than relying solely on traditional accuracy metrics.

Advanced Evaluation Metrics: PMM and ITC Analysis

Two promising advanced evaluation techniques have emerged to provide more insightful analyses of reasoning models:

- Probabilistic Mixture Models (PMM): PMM decomposes model predictions into distinct cognitive strategies, memorization, reasoning, and guessing. This framework explicitly quantifies how much genuine reasoning a model performs versus reliance on superficial cues. PMM provides nuanced insights, significantly improving the understanding of how models make decisions under various conditions and perturbations.

- Information-Theoretic Consistency (ITC) Analysis: ITC analysis quantifies the relationship between model confidence, reasoning strategy selection, and uncertainty. By systematically perturbing inputs and evaluating model responses, ITC helps establish quantitative bounds on decision-making consistency and reliability, crucial for deploying reasoning models in sensitive, high-stakes applications.

Together, these metrics represent a sophisticated shift towards genuinely understanding and quantifying reasoning capabilities beyond superficial accuracy assessments, enhancing trust and interpretability of deployed reasoning models.

Practical Deployment Considerations

Deploying custom reasoning models effectively involves strategic decisions around infrastructure, computational efficiency, and data security:

- Cloud vs. On-Premises Deployments: Cloud deployments offer scalability, easier management, and access to advanced computational resources (GPUs, TPUs, etc.). However, on-premises deployment often provides greater control, privacy assurances, and potentially lower long-term costs for large-scale or security-sensitive operations.

- Security and Privacy: Reasoning models frequently handle sensitive information. Robust data encryption, secure inference pipelines, and compliance with privacy regulations (GDPR, HIPAA) are critical. Data security measures must ensure model training and inference remain safe from unauthorized access, particularly in sensitive sectors such as healthcare, finance, and governance.

- Computational Efficiency and Cost Management: Due to their iterative reasoning processes, explicit reasoning models typically require significant computational resources. Techniques such as token-efficient prompting (e.g., Sketch-of-Thought), quantization, and parallelized inference can significantly reduce deployment costs while maintaining high performance.

Future Directions

Custom reasoning models represent a significant evolution beyond standard large language models, enabling explicit, structured, multi-step reasoning to address complex, nuanced problems effectively. By combining deliberate reasoning architectures (such as Monte Carlo Tree Search, Tree of Thoughts, and Graph of Thoughts) with targeted fine-tuning strategies and reinforcement learning optimizations, developers can build sophisticated models capable of robust, explainable inference.

Future advancements in this domain will likely focus on automating fine-tuning processes, leveraging more efficient autonomous reinforcement learning methods, and increasingly exploring hybrid architectures combining explicit reasoning structures with intuitive pattern-matching capabilities. Moreover, integrating multi-modal capabilities will broaden reasoning models' applicability across diverse contexts, making these advanced AI systems even more versatile, powerful, and indispensable in solving real-world problems.

References: