Improving ROI: ML & Generative AI Integration Strategies

Machine learning and generative AI offer potential, but high costs and complexity challenge ROI. This article explores strategic integration to maximize value, with Prem AI providing the tools to bridge the gap.

High Costs and Complexity of Machine Learning (ML)

Machine Learning (ML) holds immense promise for transforming industries, but beneath its appealing exterior lies significant complexity. The costs associated with developing, deploying, and maintaining ML systems are often higher than anticipated, challenging even the most resourceful organizations.

The True Complexity of ML Systems

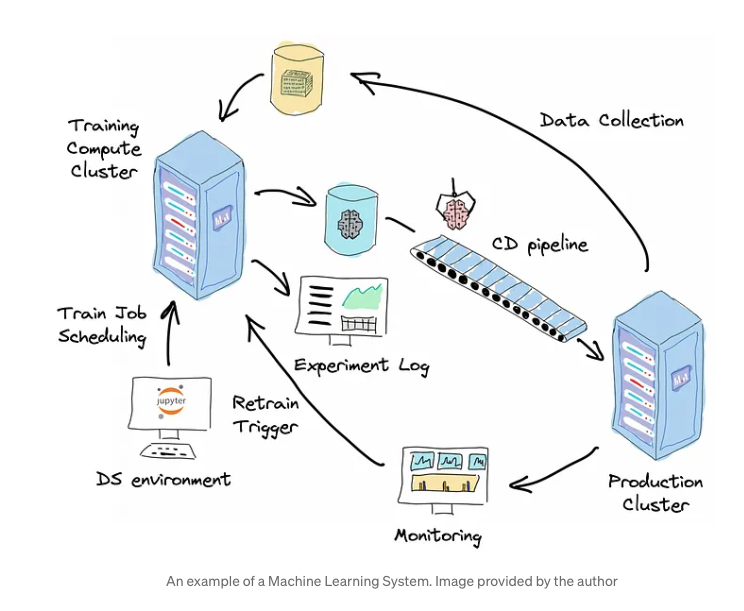

While developing an ML model might seem straightforward, integrating it into a fully operational system introduces significant challenges. Google researchers have pointed out the concept of "technical debt" in ML systems. This debt comprises hidden costs that accumulate over time due to decisions made during development. Unlike traditional software, where components can often be modular and predictable, ML systems require intricate interdependencies between data pipelines, model training, deployment mechanisms, and real-time performance monitoring.

Technical debt in ML can manifest in various ways:

- Code Entanglement: Unlike standard software engineering, where functions and modules are relatively isolated, ML code often intertwines with data processing logic. This makes it harder to update one component without affecting others.

- Pipeline Complexity: Data pipelines must be robust to handle changes in incoming data types or formats. Even minor shifts in data can necessitate reworking substantial parts of the pipeline, adding to both time and financial costs.

Data Dependency and Its Impacts

One of the most distinctive features of ML systems is their reliance on data. The quality and stability of input data play crucial roles in the performance of these models. Unlike rule-based systems that follow pre-defined logic, ML models can become ineffective if there is a shift in data distribution—an issue often referred to as "data drift." When data changes, models may produce outputs that are no longer valid or reliable, necessitating re-training or fine-tuning.

This dependency can lead to:

- Increased Monitoring Needs: Continuous data monitoring is required to identify shifts that could degrade model performance.

- Resource Allocation: Regular re-training of models consumes significant computational and human resources, contributing to the ongoing costs.

Operational Challenges and Maintenance Costs

Deployment is just the beginning of an ML system’s lifecycle. Many organizations face significant post-deployment challenges due to inadequate system observability and data lineage tracking. Unlike traditional applications, ML systems are highly dynamic, and their performance can change as data inputs evolve or external conditions shift.

Typical operational challenges include:

- Model Degradation: Over time, even well-trained models can degrade if not properly monitored and updated. This degradation necessitates additional work to diagnose issues, collect new data, and re-train models.

- Reactive Maintenance: When problems arise unexpectedly, reactive troubleshooting can be time-consuming and costly. Without proactive system design that includes thorough data tracking and performance metrics, maintenance efforts often turn into fire-fighting scenarios that drain resources.

Comparison to Traditional Systems

Traditional software systems, while complex, are generally less susceptible to changes and are more deterministic in their operating data. Hence, rule-based systems perform predictably as long as the underlying rules and environment remain consistent. In contrast, ML systems are more sensitive to variations in data and require more adaptive infrastructure. This difference underscores why ML systems come with higher long-term costs and more demanding operational requirements.

Generative AI's Expense and ROI Challenges

Generative AI has captured the attention of industries with its potential to revolutionize creative processes, automate content generation, and enhance productivity. However, the journey from potential to practical value often reveals significant cost-related challenges.

Cost Implications of Generative AI

Implementing generative AI solutions can be financially demanding, not just in initial development but across the entire lifecycle. These models typically require extensive computational resources for training and inference, which can rapidly escalate expenses, particularly when models must be retrained or fine-tuned frequently to maintain performance. Additionally, high-quality training data comes at a price, adding to the overall cost of development.

The ROI Dilemma

While generative AI promises transformative capabilities, its business return on investment (ROI) can be limited if not strategically implemented. Without integration into a well-structured system, the standalone use of generative AI may lead to underwhelming results. For instance, deploying a generative model without considering how it scales or integrates with other systems can result in inefficiencies and wasted resources.

A comparative perspective on rule-based systems highlights that simpler architectures, though limited, often offer predictable maintenance and scalability at lower costs. This contrast underscores that the true value of generative AI lies in its strategic deployment within a comprehensive system that maximizes the capabilities of the model while maintaining sustainable costs.

Strategic Implementation for Value

To realize tangible business benefits, generative AI must be embedded within a larger system that supports its operation, scalability, and maintenance. This includes robust data pipelines, continuous monitoring, and integration with existing workflows. Without these components, generative AI risks becoming an isolated, expensive feature rather than a valuable part of the business ecosystem.

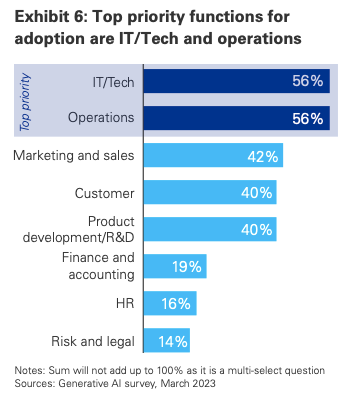

The focus on integrating generative AI into core business functions is reflected in the high priority given to IT and operations as primary areas for adoption. This strategic alignment is crucial to harnessing its potential effectively.

Lessons from Case Studies of Poor Planning

Implementing machine learning (ML) and generative AI technologies presents significant potential for business transformation, but the path is fraught with challenges when planning is inadequate. Case studies reveal that failure to account for comprehensive strategies and system integration often leads to suboptimal outcomes and resource waste.

Financial Strains and Strategic Misalignment

Generative AI's rapid development has drawn substantial investments; for instance, companies like OpenAI face immense operational costs, with estimated annual expenses reaching billions. This highlights the economic strain when deploying generative AI without a robust strategy that aligns with long-term business goals. The lesson here is that mere technological prowess without a well-integrated strategy can lead to financial instability.

The Impact of Limited Preparation

A 2023 KPMG survey underlines the issues organizations face, such as the lack of skilled personnel and insufficient risk mitigation frameworks, which result in implementation delays and hinder the realization of generative AI’s potential. Companies often underestimate the importance of skilled talent and structured risk management, which are crucial to successful adoption. This leads to outcomes where investments in pilot projects don't scale effectively, failing to deliver substantial ROI.

Lessons from Enterprise Pitfalls

The challenges faced by enterprises underscore that generative AI cannot operate in isolation as a standalone feature. Stephanie Kirmer in The Economics of Generative AI notes that successful business models often do not rely solely on AI as a product but rather as part of an integrated system. When generative AI is treated as a one-off implementation rather than embedded within a cohesive operational strategy, companies miss out on its full potential, leading to inefficiencies and wasted resources.

How Proper System Integration Creates Value

Effective integration of machine learning (ML) and generative AI into broader business systems is key to unlocking their full potential. Successful examples showcase that strategic implementation, aligned with organizational goals, significantly enhances ROI and operational efficiency.

Enhancing Efficiency and Growth

Generative AI is increasingly driving value across industries through optimized workflows and new revenue channels. By enabling automation and rapid data analysis, AI facilitates strategic resource allocation and operational improvements. For instance, ACL Digital emphasizes that generative AI enhances productivity by automating repetitive tasks, thus allowing teams to focus on high-value activities like strategy and innovation.

Real-World Success Stories

A compelling example of strategic generative AI use is Northwestern Medicine’s application of a multimodal small language model. This AI system accelerated diagnostic processes by reviewing chest X-rays quickly, lifting radiologists’ productivity by 40% and enhancing patient outcomes. This success underscores the benefits of embedding AI within specialized operational frameworks to improve service quality and efficiency.

Another case is Duos Technologies' Railcar Inspection Portal, which uses AI to inspect trains at high speeds. The implementation boosted inspection performance and safety, demonstrating the importance of using AI in a targeted way to scale processes.

Strategies for Success

Effective integration involves a multi-faceted approach: focusing on alignment with strategic business objectives, ensuring the system is adaptable for continuous improvement, and incorporating robust data infrastructure. According to ACL Digital, adopting generative AI with a focus on efficiency and risk management lays the foundation for sustainable growth. Additionally, consistent monitoring and updates are vital to keeping AI solutions aligned with evolving operational needs.

To evaluate the true potential of generative AI, companies need to approach the technology with a clear strategy focused on measurable ROI. Successful integration of generative AI yields significant benefits, including improved productivity, enhanced customer experiences, and streamlined operations.

Demonstrable Returns on Investment

A study commissioned by Microsoft revealed that for every $1 invested in AI, companies are seeing an average return of $3.5, indicating substantial financial gains when AI is implemented strategically. This ROI stems from generative AI’s capabilities to optimize business processes, automate routine tasks, and support data-driven decision-making.

Productivity and Cost Savings

Generative AI implementations have proven effective in enhancing productivity and cutting costs. For example, enterprises leveraging AI for automation and predictive maintenance have reported reduced operational expenses and improved asset management. These benefits highlight AI’s role in reallocating resources more efficiently, which, in turn, boosts overall business performance.

The Path to Scalable Solutions

Achieving long-term ROI requires that generative AI systems are not only integrated but scalable. As noted in the Cost-Benefit Analysis of Generative AI, enterprises must ensure sustainable adoption practices to align with their strategic goals. This involves training staff, continuous system evaluation, and adapting to evolving technological capabilities.

How Prem AI Bridges the Gap

The journey toward maximizing generative AI's value is complex, involving the integration of robust tools and strategic foresight. Prem AI positions itself as a vital solution to bridge the gap between powerful generative AI capabilities and effective system-building, providing a holistic platform that addresses key challenges developers face.

Comprehensive Toolset for System Integration

Prem AI offers a suite of advanced features tailored to simplify the integration and optimization of generative AI into business workflows. This includes tools such as Prem Playground, where users user can compare different large language models based on their response quality, cost, speed, accuracy etc.. and Prem Gym for autonomous fine-tuning, which ensures models evolve with user data for enhanced performance.

The Launchpad feature facilitates seamless deployment, allowing for final configuration adjustments that align with specific project needs. This end-to-end approach enables businesses to go beyond model deployment and build comprehensive AI-driven systems that deliver sustained value.

Focused on Systemic Solutions

Prem AI's commitment to providing SDKs and APIs for easy integration into existing technology stacks empowers organizations to incorporate AI with minimal disruption. The platform's real-time monitoring and tracing capabilities ensure continuous optimization, transparency, and adaptability—key factors for maintaining efficiency and reliability.

Moreover, the platform's support for Retrieval Augmented Generation (RAG) ensures that AI assistants can utilize domain-specific documents, delivering contextually accurate and relevant responses. This approach reinforces that true ROI from generative AI isn't limited to deploying a model but extends to crafting well-integrated systems that enhance decision-making, scalability, and productivity.

Final Takeaway

In conclusion, while generative AI presents immense potential, its standalone deployment often falls short of achieving significant ROI. Prem AI bridges this gap by equipping businesses with an ecosystem designed for streamlined integration, continuous optimization, and strategic scalability. By focusing on building robust systems rather than isolated features, Prem AI empowers organizations to unlock the full potential of generative AI, fostering sustainable growth and competitive advantage.

References:

https://kpmg.com/kpmg-us/content/dam/kpmg/pdf/2023/generative-ai-survey.pdf