Prem AI Adds DeepSeek-V3.1 for Smarter Enterprise AI

PremAI now supports DeepSeek-V3.1, a hybrid MoE model with 128K context, smart routing, and benchmark gains, built for enterprise use with secure, ready-to-deploy APIs.

TL;DR: PremAI now supports DeepSeek-V3.1: the latest hybrid MoE model that combines speed and reasoning in one system. With 128K context, smarter expert routing (~37B active params per token), and benchmark gains across math and coding, V3.1 is built for real-world enterprise workloads. Now production-ready with secure APIs, evaluation, and deployment on PremAI.

Introducing DeepSeek-V3.1

DeepSeek has introduced V3.1, the newest version in its model family. Compared to earlier releases, V3.1 extends the context window to 128K, improves MoE routing for better efficiency, and delivers higher scores on math and coding benchmarks. Designed with flexibility and production readiness in mind, the solution positions itself as ideal for enterprise workloads.

What’s New & Improved in DeepSeek-V3.1

DeepSeek-V3.1 advances both the core model and its enterprise usability on PremAI:

- Smarter MoE Efficiency → 671B total params with ~37B active per token, cutting compute cost without losing capacity.

- 128K Context → 4× longer than previous versions, powering long-doc and compliance workflows.

- Dual-Mode Intelligence → Switch between fast “stream” mode and deep “reason” mode in a single model.

- Stronger Benchmarks → Double-digit gains on AIME, MATH-500, and LiveCodeBench vs earlier DeepSeek versions.

- Enterprise-Grade Support → Now production-ready on PremAI with secure inference APIs, evaluation workflows, and agent orchestration.

👉 In short: V3.1 blends efficiency, reasoning, and enterprise reliability, making it the most versatile DeepSeek yet.

Hybrid Intelligence (Modes)

DeepSeek‑V3.1 is unique. It adapts on the fly between two modes:

- Stream Mode (Non-Thinking): Ideal for routine tasks ; think quick completions, simple queries, and lightweight tool calls.

- Reason Mode (Thinking): Engages step-by-step logic for complex workflows such as debugging, long-form reasoning, or multi-step planning.

Built on a 671B parameter Mixture-of-Experts foundation with dynamic activation of just 37B parameters per token and context lengths up to 128K, DeepSeek-V3.1 is designed to handle projects ranging from single-turn chats to long-form document workflows efficiently.

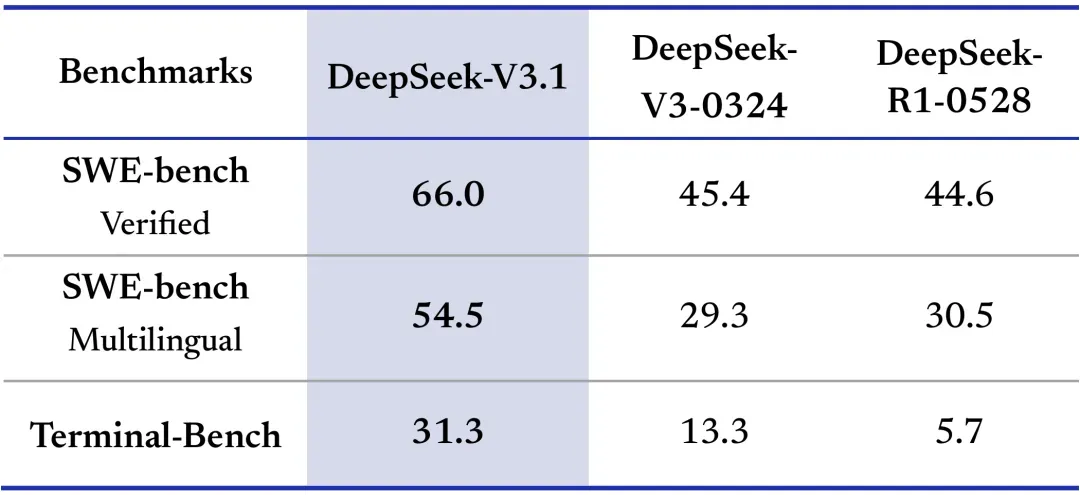

Deepseek R1 vs V3.1

Both DeepSeek‑R1 and V3.1 serve compelling, specialised enterprise needs:

DeepSeek‑R1 excels at structured reasoning:

- Outperforms in math and logic benchmarks (e.g., 97.3% on MATH-500, 79.8% on AIME)

- It offers greater precision and transparent reasoning, making it well-suited for diagnostics, coding tasks, and critical decision-making.

DeepSeek‑V3.1 excels at versatility and scalability:

- Executes fast, adaptive workflows across tasks, from chat to tool execution

- Built for real-time enterprise agents with mixed-response needs.

| Model | Best For | Strength |

|---|---|---|

| DeepSeek-R1 ⟶ | Deep logic, step-by-step reasoning | Precision, structured outputs |

| DeepSeek-V3.1 ⟶ | Speed + reasoning in one unified system | Agile, adaptable, MoE-powered |

PSA: Many enterprise workflows require both agility and depth - making V3.1 the smarter single-model solution. But in high-stakes logic work, teams may prefer pairing V3.1 with R1 for full control and accuracy.

Real-World Enterprise Applications

- Code Agents:Stream: Generate APIs, create unit tests, syntax fixes

- Reason: Troubleshoot distributed failure, architect micro-service migrations, optimise schema redesigns

- Document & Compliance Workflows:Stream: Extract key terms or classify contract types

- Reason: Navigate multi-document regulatory checks, synthesise policy changes, and summarise emerging compliance risks

No need to ping multiple models - just swap the mode. That means seamless, intelligent automation, with gravity where it matters.

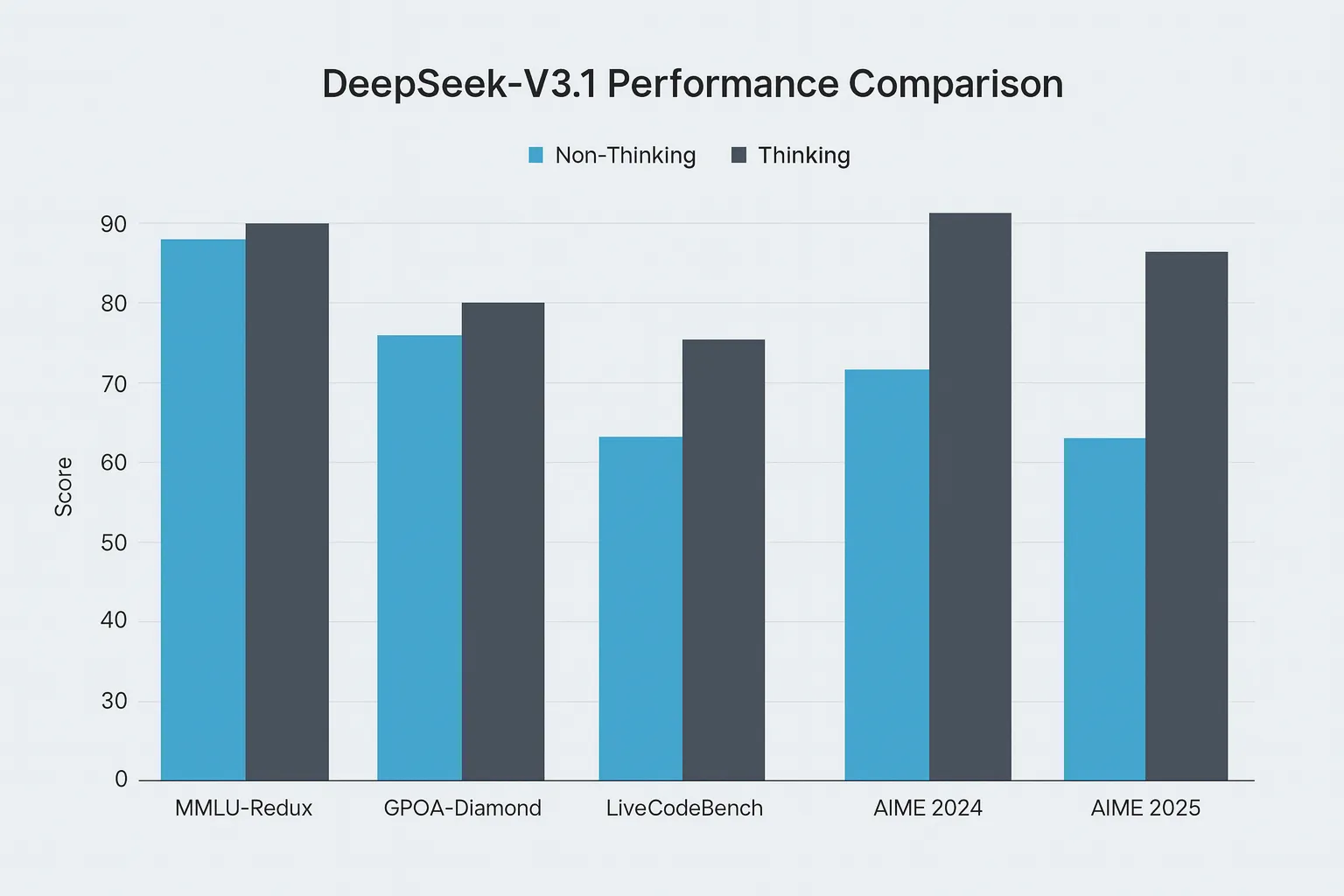

Performance Across Both Modes

The performance differences show where each mode excels. Non-thinking mode handles routine tasks efficiently, while thinking mode provides substantial improvements on complex problems requiring multi-step reasoning. Mathematical benchmarks show the most dramatic gains - jumping from 66.3% to 93.1% on AIME 2024 and 56.4% to 74.8% on LiveCodeBench coding challenges.

This hybrid approach eliminates the need to route between different specialised models, letting you choose the appropriate cognitive mode for your task complexity.

Getting Started

Integrating DeepSeek-V3.1 into your PremAI project is straightforward. First, ensure you have the PremAI Python SDK installed and your API key ready. (If you don’t, checkout the docs here - https://docs.premai.io/get-started/sdks)

import os

from premai import PremAI

client = PremAI(

api_key=os.environ.get("PREMAI_API_KEY"), # Get your API key from /apiKeys

)

response = client.chat.completions(

messages=[{

"role": "user",

"content": "Write a one-sentence bedtime story about a unicorn."

}],

model="deepseek-v3.1", # Choose from available models

)

print(response.choices[0].message.content)

You can dynamically control the model's cognitive effort based on the complexity of each query, allowing you to optimize for both speed and depth within a single, unified integration.

Check out the DeepSeek-V3.1 model card on Prem Studio for full specs and deployment details.

In summary: DeepSeek-V3.1 is a multi-faceted agent built to deploy across enterprises with intelligence and trust. And with PremAI powering it from model to monitoring, your teams get agility, sovereignty, and scale in one enterprise-ready package.

FAQ

Q: When should I use Stream vs. Reason mode?

A: Use Stream Mode for fast, simple tasks like completions or simple queries. Use Reason Mode for complex problems requiring step-by-step logic, such as debugging or multi-step planning.

Q: What is the key advantage of the 128K context?

A: It allows the model to process and understand exceptionally long documents in one go, ideal for legal, compliance, and technical documentation workflows.

Q: Can I use V3.1 and R1 together?

A: Yes. Use V3.1 as a versatile main model and route highly complex logic tasks to R1 for maximum precision.

Q: Is my data secure?

A: Yes. PremAI provides enterprise-grade security, ensuring full data compliance and privacy.

Want a walkthrough or early access? Reach out to us, let's build smarter, together.