SOC 2 Compliant AI Platform: What the Certification Misses About AI Security

Samsung leaked source code into ChatGPT in 20 days. SOC 2 wouldn't have caught it. Here's what enterprise buyers should actually verify when evaluating AI platforms.

Samsung allowed its semiconductor engineers to use ChatGPT in March 2023. Within 20 days, three separate employees had fed proprietary source code, chip yield data, and confidential meeting transcripts directly into the model. That data entered OpenAI's training pipeline. Samsung couldn't retrieve it.

The vendor those engineers were using was SOC 2 compliant.

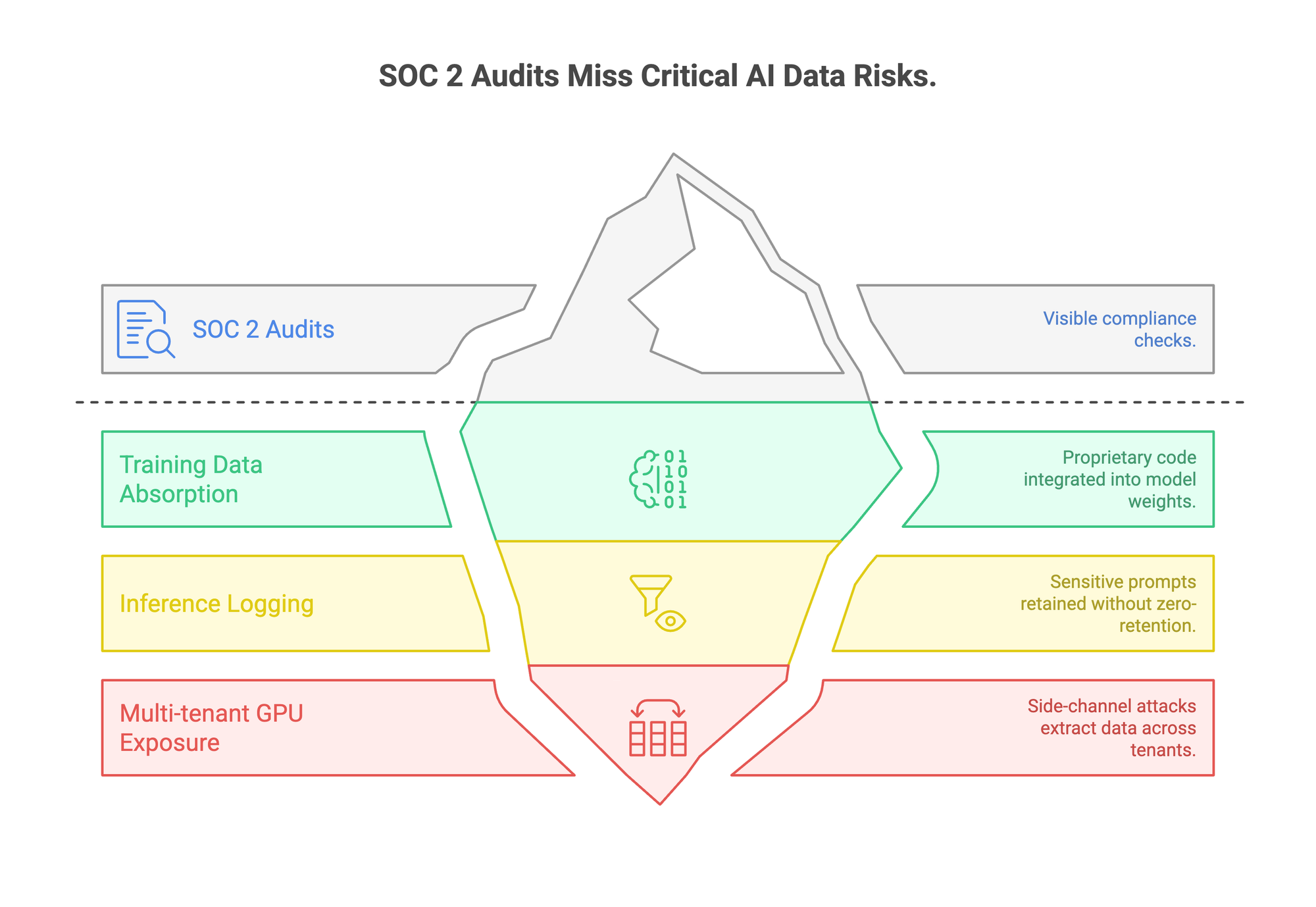

SOC 2 is a controls framework built for SaaS companies handling customer records. It checks whether a vendor has policies for access management, encryption, and monitoring. It was not designed for AI-specific risks like training data absorption, inference logging, or model weight exposure.

If you're evaluating AI platforms for enterprise use, SOC 2 should be the starting requirement on a much longer checklist. Here's what else belongs on it.

What SOC 2 Checks vs. What AI Platforms Actually Risk

SOC 2 evaluates five Trust Service Criteria. Each one matters, but each one also has a blind spot when applied to AI infrastructure.

The gap between what SOC 2 audits and what AI platforms actually do with your data is significant. A few of these deserve a closer look.

Training data absorption.

When an employee sends a prompt to an AI platform, does that input train the model? This is exactly what happened at Samsung. Engineers pasted proprietary code into ChatGPT, and that code became part of OpenAI's training set. SOC 2 checks whether a vendor has data protection policies. It doesn't check whether your data gets folded into model weights, where it becomes impossible to isolate or delete.

A 2023 Cyberhaven study found that 3.1% of workers had put confidential company data into ChatGPT. That was within months of launch. By 2025, IBM reported that one in five organizations experienced a breach tied to shadow AI, with employees pasting data into unsanctioned tools. The problem has scaled with adoption.

Inference logging.

Most AI platforms log prompts and responses for debugging and quality improvement. SOC 2 checks whether those logs are encrypted and access-controlled. But for a law firm summarizing privileged documents or a hospital processing patient records through an AI tool, the prompt itself is the sensitive data. The question isn't whether the logs are protected. The question is whether they exist at all.

Some platforms operate on a zero-retention architecture where data is processed and immediately discarded. Others retain prompts for 30 days, 90 days, or indefinitely. SOC 2 doesn't distinguish between these approaches.

Multi-tenant GPU exposure.

Fine-tuned models typically run on shared GPU clusters. SOC 2 audits logical access controls between tenants, but GPU memory doesn't work like a database with row-level permissions. Academic research has demonstrated side-channel attacks that extract data across GPU tenants. The only mitigation is dedicated infrastructure, which SOC 2 doesn't require.

Platforms like Prem AI deploy fine-tuned models into isolated VPC or on-premise environments specifically to avoid this risk. Others offer shared multi-tenant inference as the default tier, with isolation available only on enterprise plans. During vendor evaluation, ask which tier you're actually getting.

The Breach Data Behind the Gaps

IBM's 2025 Cost of a Data Breach report examined AI-specific incidents for the first time. The numbers confirm what the Samsung incident suggested two years earlier.

Two things stand out. First, 97% of organizations that experienced an AI breach didn't have AI-specific access controls in place. SOC 2 compliance didn't close that gap.

Second, shadow AI is now a measurable cost multiplier. Employees using private, self-hosted alternatives instead of uncontrolled public tools can eliminate this exposure entirely.

For teams in healthcare or finance where breach costs run highest, the gap between "SOC 2 compliant" and "actually secure for AI workloads" can represent millions in risk.

8 Questions to Ask Your AI Vendor Before You Sign

SOC 2 compliance tells you a vendor has passed an audit. These questions tell you whether the architecture behind that audit actually protects your data when AI is involved.

1. What happens to my data after inference?

Ask for the specific retention policy. "We take security seriously" is not an answer. You want to know: is the prompt logged? For how long? Can it be used for any purpose beyond delivering the response? Zero retention means the platform discards inputs immediately after processing. Some vendors offer this by default. Others only offer it on enterprise tiers. Get it in the contract.

2. Will my data ever train or improve shared models?

This needs to be in the data processing agreement, not buried in terms of service. OpenAI's enterprise tier opts out of training on customer data. Not every vendor does. If your data touches model weights that other customers use, your IP is effectively shared.

3. Can I deploy in my own infrastructure?

A shared cloud environment with SOC 2 controls is still a shared environment. For regulated industries, VPC or on-premise deployment removes multi-tenancy risk entirely. Ask whether the vendor supports deployment into your AWS VPC, Azure tenant, or on-prem hardware. If the answer is "not yet," that's a meaningful limitation.

4. How do you handle PII in training data?

If you're fine-tuning models on customer support tickets, medical records, or transaction data, PII will be in your dataset. Ask whether the platform detects and redacts PII automatically before training begins. Automated dataset preparation should handle this before data ever touches model weights. If PII enters model parameters, you can't extract it later. That's a compliance liability with no technical fix.

5. Can I export my fine-tuned model?

If you invest in custom model training on a vendor's platform, can you take the model with you? Full portability means you can download model weights and run them on your own hardware using tools like vLLM or Ollama. Vendor lock-in on a model trained with your proprietary data is a risk that grows over time.

6. Where is my data physically processed and stored?

SOC 2 doesn't specify geography. A platform can be SOC 2 compliant and route your data through servers in any jurisdiction. For teams subject to GDPR, HIPAA, or sector-specific regulations, data residency is non-negotiable. Ask where inference runs, where fine-tuned model weights are stored, and whether you can restrict both to specific regions.

Jurisdictional choice also matters. Swiss data protection law (FADP) provides some of the strongest sovereignty protections available. A vendor headquartered in Switzerland operates under different legal constraints than one in a jurisdiction with broad government access provisions.

7. Do you offer cryptographic verification of data handling?

Some platforms now provide hardware-signed attestations proving that data wasn't retained or tampered with during processing. This shifts the trust model from "read the audit report and hope" to "verify it mathematically with each interaction." Prem AI, for example, generates cryptographic proofs through stateless architecture and hardware attestations. If a vendor doesn't offer verifiable proof, you're relying entirely on their word and an annual audit.

8. How do you evaluate model accuracy and reliability?

A SOC 2 audit doesn't test whether AI outputs are accurate, biased, or hallucinating. Ask about the vendor's evaluation framework. Can you run custom evaluation metrics? Can you compare models side by side before deploying to production? For enterprise use cases, evaluation reliability determines whether AI outputs are trustworthy enough to act on.

The Compliance Stack That Goes Beyond SOC 2

SOC 2 Type II is the baseline. For enterprise AI, compliance needs to be layered. No single certification covers the full surface area.

The bottom row matters most and gets checked least. Policy says "we won't retain your data." Architecture makes it technically impossible. There's a meaningful difference between the two, and SOC 2 only evaluates the first.

Vendors like Prem AI stack SOC 2 + GDPR + HIPAA under Swiss FADP jurisdiction with stateless, zero-retention architecture. That combination addresses policy, legal, and technical layers simultaneously. During vendor evaluation, ask which layers your vendor covers and where they rely on policy alone.

A Quick Vendor Comparison Framework

When you're comparing AI platforms across compliance capabilities, this framework helps standardize the evaluation:

Print this out or put it in a spreadsheet. Run each vendor you're evaluating through it. The gaps show up fast.

FAQ

Is SOC 2 legally required for AI platforms?

No. SOC 2 is a voluntary standard. But IBM's 2025 data shows 46% of enterprise software buyers prioritize security certifications during vendor evaluation. In practice, most procurement processes won't move forward without it.

What's the difference between SOC 2 Type I and Type II?

Type I verifies that controls exist at a single point in time. Type II verifies they worked over a 3-12 month period. Enterprise buyers should require Type II. A snapshot audit tells you a vendor had controls on one day. It doesn't tell you whether those controls held up.

Does SOC 2 prevent AI vendors from training on my data?

No. SOC 2 evaluates whether data protection policies exist. Whether those policies prohibit training on customer data depends on the vendor's terms. Verify this in the data processing agreement, not the SOC 2 report.

Can AI models leak training data?

Yes. Research has shown that language models can memorize and reproduce fragments of their training data when prompted in specific ways. This is why PII redaction before fine-tuning matters, and why zero data retention after inference matters. Once data enters model weights, removing it is an unsolved technical problem.

How is a private AI platform different from a SOC 2 compliant one?

SOC 2 compliance means a vendor passed an audit on operational controls. A private AI platform enforces data isolation architecturally, with zero retention, dedicated infrastructure, and data sovereignty guarantees. They address different layers of risk. Ideally, you want both.

Conclusion

SOC 2 compliance proves that an AI vendor takes operational security seriously. It does not prove that your prompts won't be logged, your training data won't bleed into shared models, or your fine-tuned weights are isolated from other tenants on the same GPU cluster.

The enterprises getting this right are treating SOC 2 as one layer in a stack that includes jurisdictional protection, architectural enforcement, and contractual guarantees specific to AI workloads. They're asking vendors the eight questions listed above. They're running each platform through the green flag / red flag framework. And they're choosing vendors that can verify data handling cryptographically, not just describe it in an audit report.

If your team is starting this evaluation, Prem AI offers SOC 2, GDPR, and HIPAA compliance under Swiss FADP jurisdiction, with zero-retention architecture and cryptographic attestations you can verify independently. Book a walkthrough to see how the compliance stack works in practice.