What Is a Private AI Platform? A Guide for Enterprise Teams Meta

What is a private AI platform? Learn how enterprises deploy AI without exposing sensitive data, plus key features to evaluate before choosing.

Enterprise AI adoption is accelerating, but so are the concerns around it. According to Cisco's 2024 Data Privacy Benchmark Study, 48% of organizations have restricted use of generative AI due to data privacy and security risks. Zero trust.

The core issue: most AI services require sending data to external servers. For teams handling customer records, financial information, or health data, that's an issue. Legal won't sign off. Compliance can't make it work. The project stalls.

A private AI platform changes this equation. Instead of pushing data to a third party, you run AI models within your own infrastructure. On-premises, private cloud, or inside a secure VPC. Your data never leaves your environment. Your models stay under your control.

This guide breaks down what a private AI platform actually is, why enterprise teams are moving toward them, and the specific features to evaluate when choosing one. Written for decision-makers and technical leads who need AI capabilities without compromising on data security.

What Exactly Is a Private AI Platform?

A private AI platform is infrastructure that lets you deploy, run, and fine-tune AI models in an environment you control. That could be your own data center, a private cloud, or a virtual private cloud (VPC) with a provider like AWS or Azure.

The key difference from public AI services: your data never leaves your environment. When you use something like the ChatGPT API, your prompts and data pass through external servers. With a private AI platform, everything stays inside your walls.

You also get control over the models themselves.

Want to fine-tune an LLM on your proprietary data? You can. Need to audit how the model behaves? That's possible too.

No surprise policy changes from a vendor. No wondering what happens to your data after you hit send.

Public AI vs Private AI Platform

For teams handling sensitive information, this distinction matters. It's the difference between hoping a vendor protects your data and knowing exactly where it lives.

Okay… So, Why Enterprises Need Private AI?

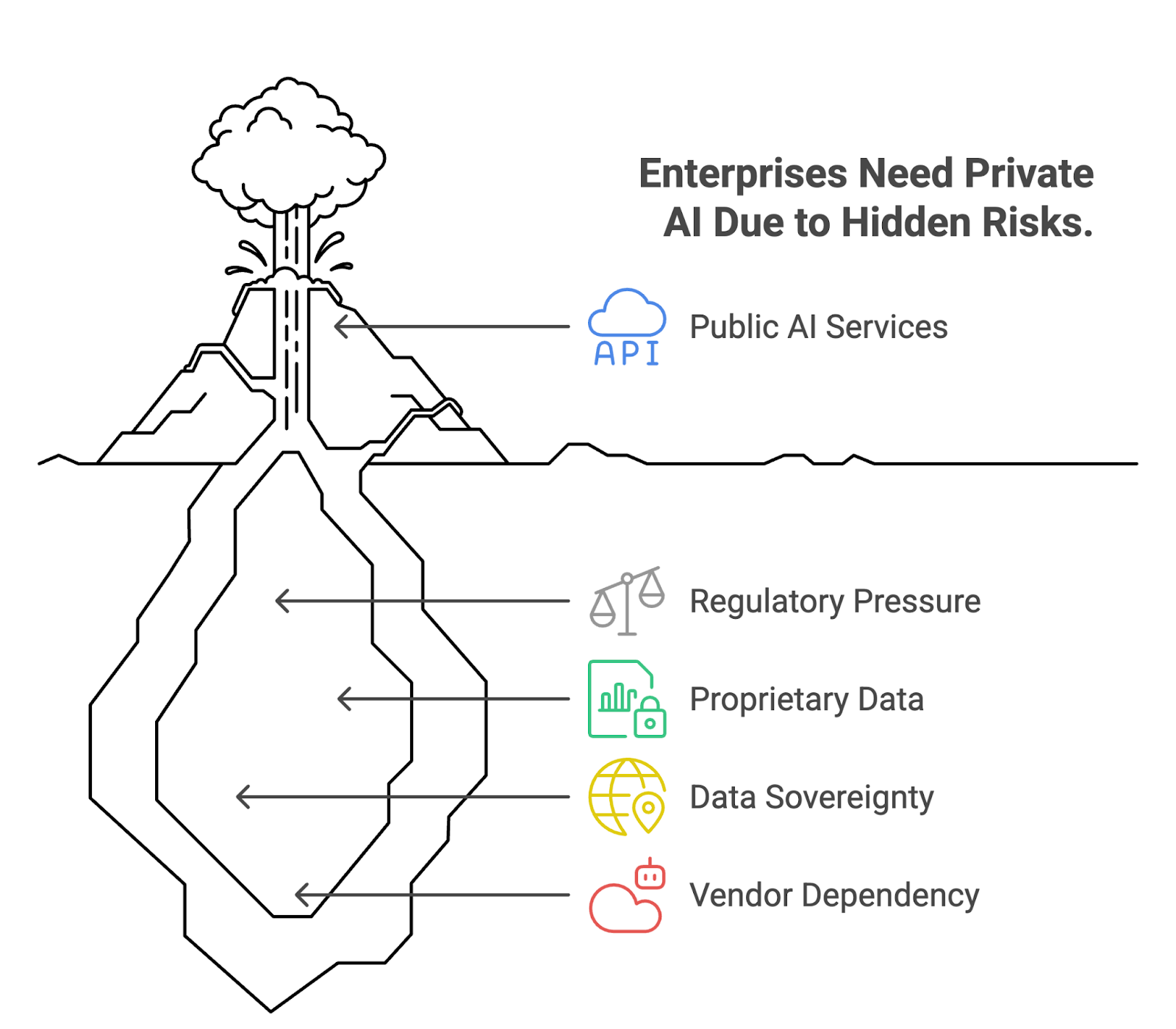

The interest in private AI comes down to one thing: risk.

Public AI services work fine for general tasks. But the moment you're dealing with customer records, financial data, legal documents, or health information, the calculus changes. Sending that data to an external provider opens up questions that most compliance teams don't want to answer.

1. Regulatory pressure becomes real.

GDPR, HIPAA, SOC 2, and industry-specific regulations all have requirements around how sensitive data is processed and stored. Using a public AI API can create gaps that auditors will find. Private AI gives you documentation and control that satisfies these requirements.

2. Proprietary data is a competitive asset.

If you're training models on internal research, customer insights, or operational data, you probably don't want that flowing through someone else's servers. One breach or one policy change from a vendor could expose what makes your business different.

3. Data sovereignty isn't optional for some industries.

Financial services, healthcare, government, and defense often have strict rules about where data can physically reside. Public AI providers can't always guarantee jurisdiction. Private deployment can.

4. Vendor dependency creates long-term risk.

Pricing changes, API deprecations, shifting terms of service. When you build on infrastructure you control, you're not waiting to see what a provider decides next quarter.

The pattern across all of these: enterprises want AI capabilities without giving up control. Private AI is how you get both.

Key Features to Evaluate in a Private AI Platform

Not every private AI platform works the same way. Before you commit, here's what to look at.

1. Deployment options matter first.

Some platforms only support cloud deployment. Others let you deploy on-premises or within your own VPC. Match the platform to your infrastructure requirements. If your security policy demands air-gapped environments, make sure that's actually possible.

2. Data sovereignty and retention policies.

Ask exactly where data goes during inference and training. A genuine private AI platform should offer zero data retention. Your prompts, outputs, and training data shouldn't persist anywhere outside your control.

3. Compliance certifications save time.

SOC 2 Type II, GDPR compliance, HIPAA readiness, ISO 27001. If a platform already has these, your audit process gets easier. If they don't, you'll be doing extra work to prove your setup is compliant.

4. Model flexibility prevents lock-in.

Can you use different LLMs? Mistral, LLaMA, Qwen? The ability to switch models or run multiple options matters as the space evolves. Avoid platforms that tie you to a single model provider.

5. Fine-tuning capabilities.

Training models on your proprietary data is where private AI creates real value. Look for platforms with built-in fine-tuning workflows that don't require a dedicated ML team to operate.

6. API and developer experience.

Your developers will live with this daily. OpenAI-compatible endpoints, solid API documentation, Python and JavaScript SDKs. If integration is painful, adoption stalls.

7. Security architecture.

Encryption at rest and in transit, role-based access controls, audit logs, firewall configurations. These aren't optional for enterprise AI deployment.

8. Scalability.

Can the platform handle increased workloads as you expand use cases? Check if self-hosting options allow you to scale on your own hardware.

The right platform balances security with usability. If it's too restrictive, developers will route around it. If it's too loose, compliance fails. Find the middle ground.

Common Use Cases for Private AI

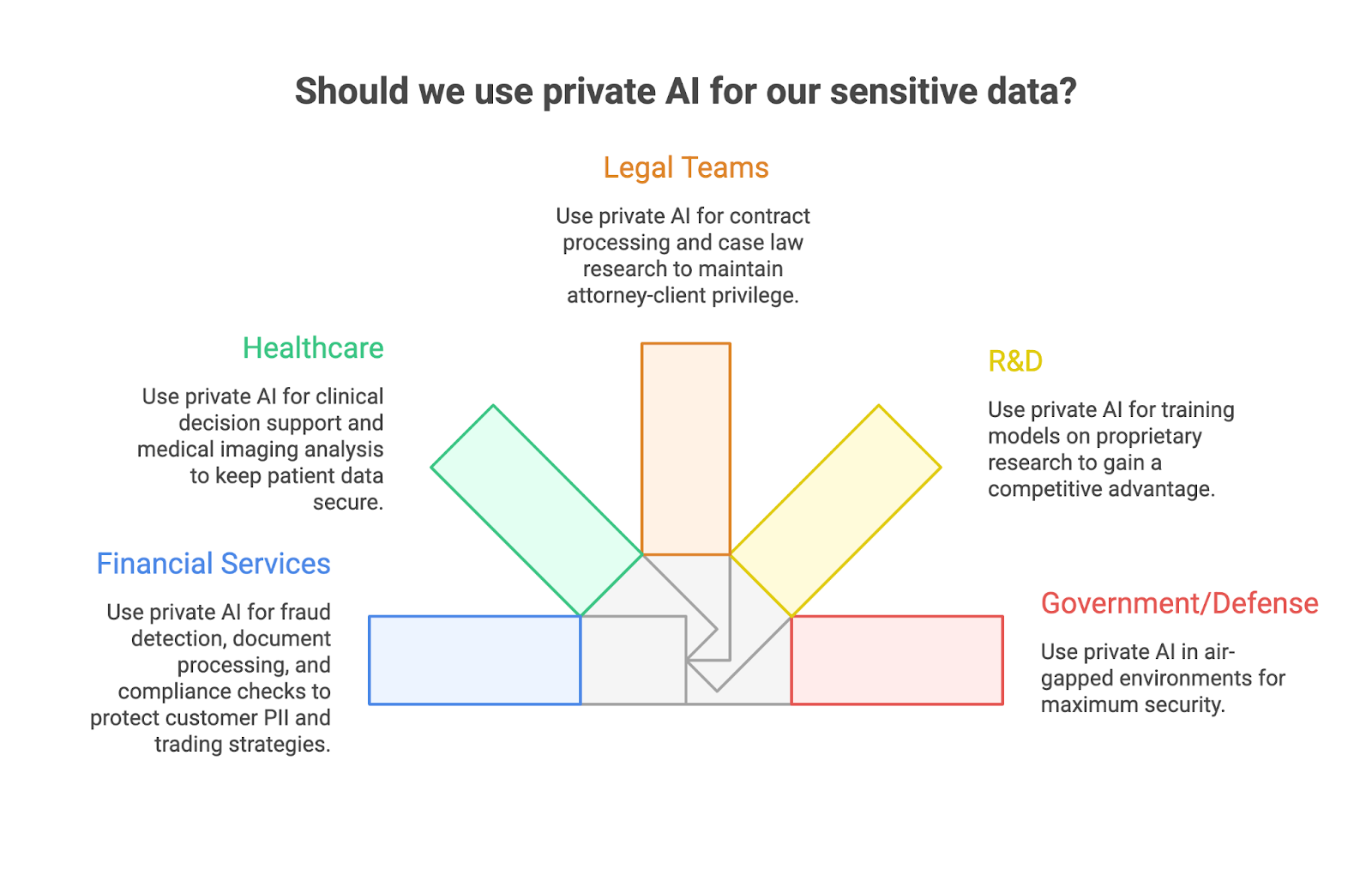

The pattern is predictable: wherever sensitive data meets AI, private deployment becomes the default.

A bank can't send customer transaction records through a public API. A hospital can't feed patient notes into an external model without violating HIPAA. A law firm won't risk client confidentiality for the convenience of a cloud service. These are the norm for enterprise teams handling high-stakes information.

Financial services firms use private AI for fraud detection, document processing, and compliance checks. The data involved, customer PII, transaction histories, trading strategies, would be a regulatory nightmare if it leaked.

In healthcare, the applications range from clinical decision support to medical imaging analysis. Patient data stays inside hospital infrastructure, where it belongs.

Legal teams process contracts, research case law, and summarize documents. Attorney-client privilege doesn't have an exception for AI vendors.

R&D departments are training models on proprietary research and internal knowledge bases. The whole point is to create competitive advantage, not hand it to a third party.

Government agencies and defense contractors often need air-gapped environments. Public cloud isn't even in the conversation.

If you're working with data you wouldn't attach to an email and send externally, private AI is likely the right fit.

Getting Started with Private AI

Before evaluating platforms, get clear on what you actually need.

Start by mapping where sensitive data already touches AI in your organization. Maybe it's a team using ChatGPT for internal docs. Maybe it's customer support drafting responses with an external tool. These are your compliance gaps and your first candidates for private deployment.

Next, define your requirements. What deployment model fits your infrastructure? On-premises, private cloud, hybrid? What compliance certifications does your industry require? What scale do you need to support?

From there, shortlist platforms that match. Look at options like Prem AI, Together AI, or self-hosted setups with vLLM. Each has tradeoffs around ease of use, flexibility, and control.

Don't try to boil the ocean. Pick one use case, run a pilot, and expand from there. Most teams start with something contained, like internal document Q&A or a support assistant, before rolling out broader.

If data sovereignty and compliance are priorities, Prem AI offers Swiss-jurisdiction hosting, zero data retention, and fine-tuning workflows built for enterprise teams. Worth a look if that fits your requirements.

Book a demo to see if it matches what you're building.

Frequently Asked Questions

1. Is there an AI that is private?

Yes. Private AI platforms let you run AI models inside your own infrastructure instead of sending data to external servers. Options include self-hosted solutions like vLLM and Ollama, or managed platforms like Prem AI that offer on-premises and private cloud deployment. The key difference from public AI: your data stays within your environment.

2. What is a private AI?

Private AI refers to artificial intelligence systems deployed in environments where the organization maintains full control over data, models, and outputs. Unlike public AI services where your prompts pass through third-party servers, private AI keeps everything internal. It's designed for enterprises that can't risk exposing sensitive information to external providers.

3. Which AI is 100% private?

No AI is automatically 100% private. Privacy depends entirely on how you deploy it. A model running on air-gapped infrastructure you own comes closest. Platforms like Prem AI offer zero data retention and Swiss-jurisdiction hosting, but achieving full privacy also requires proper security configuration on your end. The platform matters, but so does your setup.

4. What is the difference between private AI and public AI?

Public AI services like ChatGPT or Claude process your requests on the provider's servers. They may log interactions and use data for model improvements. Private AI runs within infrastructure you control. No data leaves your environment, you decide retention policies, and you can fine-tune models on proprietary information without sharing it externally.

5. Can I fine-tune models on a private AI platform?

Yes. Most private AI platforms support fine-tuning on your proprietary data. This is actually one of the main advantages. You can train models to understand your domain, your terminology, and your specific use cases without exposing that training data to third parties. The result is better accuracy on tasks that matter to your business.

6. Is private AI more expensive than using public APIs?

It depends on usage patterns. Public APIs charge per token, which adds up quickly at scale. Private AI involves infrastructure costs but offers predictable pricing. For high-volume workloads or sensitive data processing, private deployment often costs less over time while giving you more control. Run the numbers for your specific use case.

7. What compliance certifications should a private AI platform have?

Look for SOC 2 Type II, ISO 27001, GDPR compliance, and HIPAA readiness if you're in healthcare. These certifications mean the platform has been audited for security and data handling practices. Without them, you'll need to do more work internally to prove your AI deployment meets regulatory requirements.

8. How long does it take to deploy a private AI platform?

Simple deployments can be up and running in days. More complex setups involving on-premises infrastructure, custom integrations, and fine-tuning workflows might take a few weeks. Platforms with solid documentation and pre-built integrations speed things up considerably. Start with a pilot project rather than trying to deploy enterprise-wide from day one.

Conclusion

Private AI isn't a niche requirement anymore. As regulations tighten and data breaches get more expensive, the ability to run AI workloads without exposing sensitive information has become a baseline expectation for enterprise teams.

The good news: you don't have to choose between AI capabilities and data control. Private AI platforms give you both.

Start small. Pick a use case where data sensitivity is already a concern. Run a pilot. See how it fits your workflow and compliance requirements. Then scale from there.

If you're evaluating options and data sovereignty matters to your organization, Prem AI is built for exactly this. Zero data retention, Swiss jurisdiction, enterprise-grade security, and fine-tuning capabilities that don't require a dedicated ML team.

Book a demo and see if it fits what you're building.