SLM vs LoRA LLM: Edge Deployment and Fine-Tuning Compared

Fine-tuning is critical for adapting language models to real-world tasks. This blog compares SLM full fine-tuning with LoRA for LLMs, highlighting strengths, challenges, and edge deployment strategies. Learn how PremAI enables efficient, scalable, and enterprise-ready AI solutions.

Fine-tuning is critical for adapting language models to real-world tasks. This blog compares SLM full fine-tuning with LoRA for LLMs, highlighting strengths, challenges, and edge deployment strategies. Learn how PremAI enables efficient, scalable, and enterprise-ready AI solutions.

Fine-Tuning Approaches: SLMs vs. LoRA on LLMs

Fine-tuning has become essential in natural language processing (NLP) to tailor pre-trained language models to specific tasks and datasets. Two distinct methodologies have emerged prominently: full fine-tuning, often employed for smaller language models (SLMs) and Low-Rank Adaptation (LoRA), increasingly popular for large language models (LLMs). This section introduces and compares these two fine-tuning strategies, highlighting their key principles, strengths, and suitable use cases.

Full Fine-Tuning for SLMs

Full fine-tuning involves adjusting all parameters of a pre-trained language model to specialize it for specific downstream tasks. This approach is particularly suitable for smaller language models, typically ranging between millions to a few billion parameters. Its straightforward implementation makes it highly accessible to teams aiming for task-specific adaptations.

Technical Overview:

- During full fine-tuning, the model undergoes additional training iterations on new, task-specific datasets. Every model parameter, from embeddings to transformer layers, is updated using gradient-based optimization methods such as Adam or SGD.

- Given their smaller parameter count, SLMs are more computationally tractable for complete fine-tuning. This allows extensive optimization of all layers, providing greater flexibility and, often, task-specific accuracy.

- However, fully fine-tuning an SLM might lead to challenges such as increased memory overhead due to optimizer states and gradients, typically resulting in a memory footprint around 12 times the size of the model itself.

Strengths and Considerations:

- Strengths: High task specialization, simple implementation, fewer hyperparameters to tune compared to LoRA.

- Considerations: Computationally demanding relative to parameter-efficient alternatives, risk of overfitting (especially with limited training data), requires considerable hardware resources for training.

Typical Use Cases:

- Scenarios with moderate computational resources and smaller, domain-specific datasets.

- Situations where model simplicity, complete parameter control, and specialized accuracy outweigh the computational overhead.

LoRA Fine-Tuning Method for LLMs

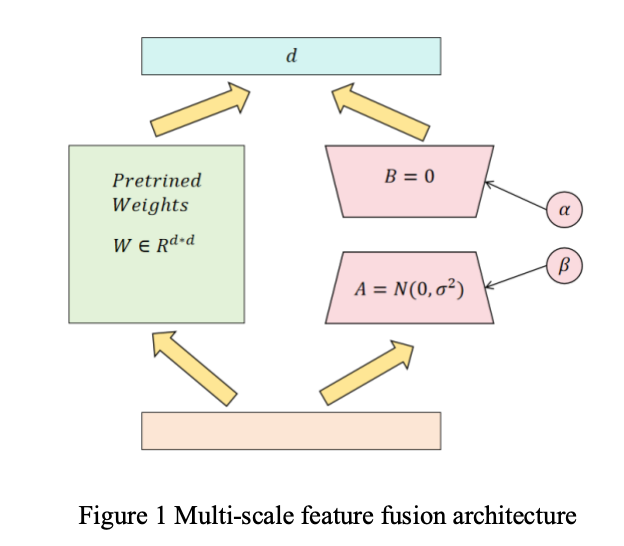

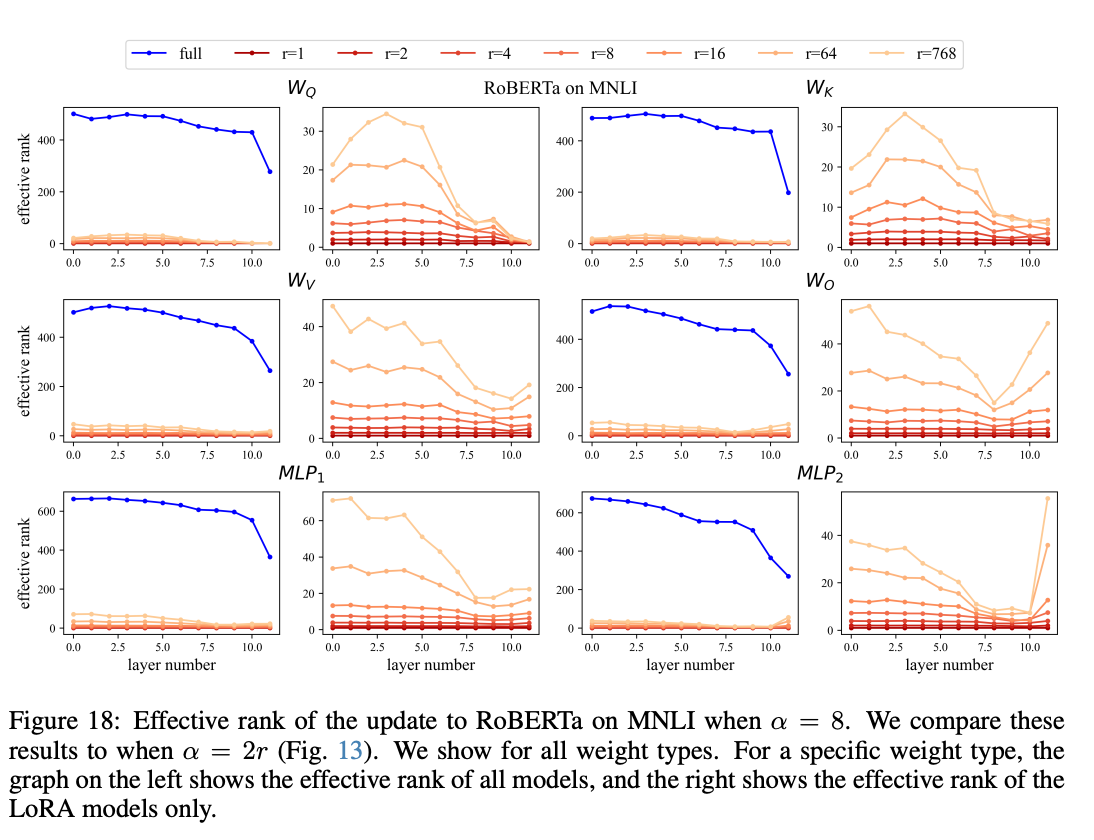

Low-Rank Adaptation (LoRA) represents a fundamentally different approach, focusing on parameter-efficient fine-tuning. Introduced initially to handle large language models, LoRA significantly reduces the computational burden by decomposing weight matrices into smaller, trainable low-rank matrices, leaving the original parameters mostly unchanged.

Technical Overview:

- LoRA operates under the principle that task-specific adaptation typically results in weight updates with low intrinsic dimensionality. Practically,it approximates the model weight update matrix (ΔW) as the product of two smaller matrices A and B of rank r, where r≪min(d,k) (with d×k being the dimensions of the original weight matrix):

ΔW=BA

- In contrast to full fine-tuning, during LoRA training, only these smaller matrices A and B are updated, significantly reducing memory and computational requirements.

- The original, pre-trained model parameters are largely frozen, allowing the model to retain general knowledge learned during pre-training. This strategy drastically lowers both training cost and inference overhead, enabling larger models to be adapted efficiently even in resource-constrained environments.

Strengths and Considerations:

- Strengths: Extremely efficient regarding computational cost, lower memory overhead, less risk of catastrophic forgetting due to limited parameter updates, quick training iterations, suitable for resource-constrained settings.

- Considerations: Slightly less flexible than full fine-tuning as the approximated update may not always perfectly capture highly complex task-specific nuances. Its effectiveness depends heavily on hyperparameters like the rank rrr, learning rate, and the choice of targeted modules.

Typical Use Cases:

- Adapting extremely large models (billions of parameters) on limited computational resources.

- Deploying multiple specialized large-scale models efficiently at scale, especially relevant for edge devices and multi-task learning environments.

Comparative Summary Table (Optional for Visual Clarity)

| Aspect | Full Fine-Tuning (SLM) | LoRA Fine-Tuning (LLM) |

|---|---|---|

| Trainable Parameters | All parameters | Small fraction (Low-rank matrices) |

| Computational Cost | Higher | Significantly Lower |

| Memory Overhead | High | Low |

| Training Stability | Generally stable but costly | Stable with careful hyperparameter selection |

| Risk of Overfitting | Moderate to High | Lower |

| Task-specific Specialization | High flexibility and accuracy | Good, but occasionally constrained by low-rank approximation |

Comparative Analysis of Computational Efficiency and Inference Performance

When choosing a fine-tuning strategy, computational efficiency and inference performance are crucial factors. Here, we delve into how full fine-tuning of Small Language Models (SLMs) contrasts with LoRA-based fine-tuning of Large Language Models (LLMs), focusing on inference speed, computational load, and the strategic application of quantization.

Inference Efficiency and Computational Load

The efficiency of inference refers to how quickly and resource-effectively a fine-tuned model processes inputs at runtime. While full fine-tuning provides high adaptability by adjusting every parameter, it often demands considerable computational resources. In contrast, LoRA fine-tuning seeks a balance by maintaining parameter efficiency, leading to potentially faster inference speeds and lower computational overhead.

Inference Performance of Fully Fine-Tuned SLMs:

- Full fine-tuning updates every parameter in a model, making the optimized weights highly specialized but computationally intensive.

- Due to extensive updates, inference on fully fine-tuned models usually requires substantial memory and processing power. Specifically, during fine-tuning, gradients and optimizer states can increase memory footprints to about 12 times the original model size.

- However, once fine-tuned, smaller models (SLMs) are often manageable in inference environments, particularly if adequately optimized (e.g., pruning, quantization).

Inference Performance of LoRA-based LLMs:

- LoRA fine-tuning significantly decreases the computational burden by decomposing the weight-update matrices into two small low-rank matrices, drastically reducing the computational complexity.

- Since most original parameters are frozen during training, the inference performance tends to be efficient due to fewer parameter updates, allowing faster model serving and reduced memory overhead.

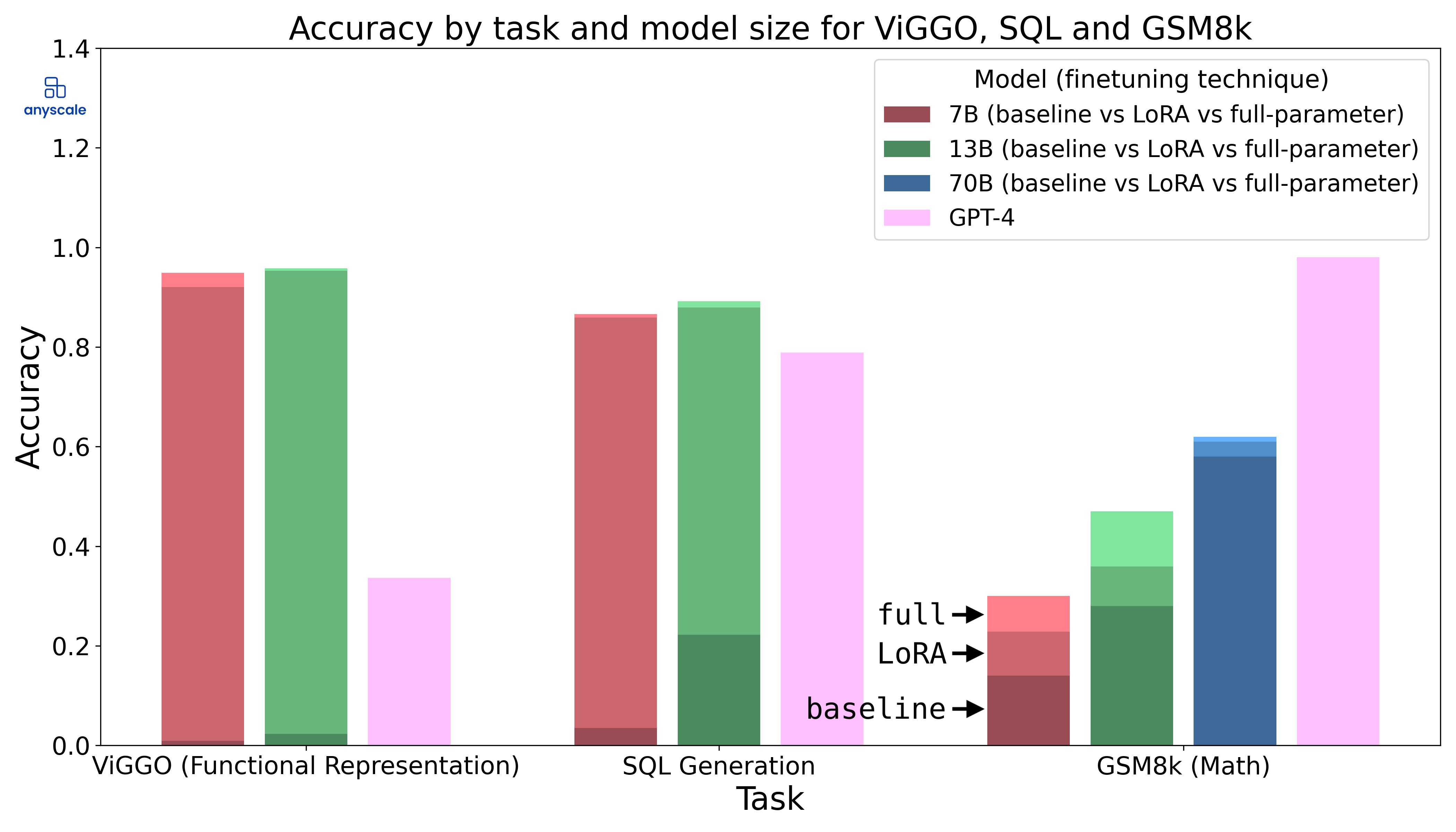

- Studies show LoRA-based fine-tuning achieves near full-parameter fine-tuning accuracy with substantially reduced computational demands. For instance, LoRA-adapted Llama-2 models demonstrated performance comparable to fully fine-tuned models, yet required significantly fewer computational resources.

Benchmark Comparison (Experimental Insights):

| Method | Accuracy (ACC) | F1 Score | Matthews Correlation (MCC) |

|---|---|---|---|

| Full Fine-tuned SLM (Baseline models: BERT, RoBERTa, T5) | High | High | High |

| LoRA Fine-tuned LLM (GPT-4 with LoRA) | Slightly Higher | Higher | Higher |

Benchmark Comparison (Experimental Insights):

These benchmarks emphasize LoRA’s capability to optimize computational load significantly while achieving robust inference performance close to, or surpassing, fully fine-tuned models.

Model Quantization Strategies

Quantization is a critical strategy for optimizing inference efficiency. It reduces memory usage and accelerates computation by converting model parameters from high-precision (e.g., FP32) to lower-precision representations (e.g., FP16, INT8).

Quantization of Fully Fine-Tuned SLMs:

- SLMs, due to their smaller size, generally respond well to quantization. However, aggressive quantization (e.g., INT8) can risk noticeable performance degradation.

- Full fine-tuning typically allows thorough retraining during quantization-aware training (QAT), which helps minimize accuracy losses, improving deployment suitability on edge devices.

Quantization of LoRA-based LLMs:

- LoRA-based LLMs inherently possess lower memory footprints, benefiting significantly from quantization. The reduced parameter count to update further amplifies memory and computational savings, making quantization particularly advantageous.

- Lower-rank matrices (from LoRA) adapt well to quantization methods without substantial accuracy degradation, providing a balanced trade-off between precision and resource usage.

Comparison of Quantization Strategies:

| Quantization Method | Memory Reduction | Computation Speedup | Potential Accuracy Impact |

|---|---|---|---|

| FP16 | Moderate (~2x) | Moderate (~2x) | Minimal |

| INT8 | High (~4x) | High (~4x or more) | Possible Moderate Impact (mitigated via QAT) |

Quantization-aware fine-tuning is particularly crucial when deploying models on edge devices such as Jetson Nano or Raspberry Pi, where resources are limited.

Comparative Summary and Key Insights:

| Feature | Full Fine-tuning (SLM) | LoRA Fine-tuning (LLM) |

|---|---|---|

| Computational Efficiency | Moderate-Low | High |

| Inference Speed | Moderate | High |

| Memory Requirements | Moderate-High | Low |

| Suitability for Quantization | Good | Excellent |

| Edge Deployment Suitability | Moderate | High |

LoRA emerges as an efficient choice for inference performance, particularly beneficial in constrained computational scenarios or resource-sensitive deployments.

Robustness, Stability, and Generalization Capabilities

Robustness and generalization are critical attributes of effectively fine-tuned models. In this section, we explore how full fine-tuning and LoRA-based methods impact these crucial aspects. We’ll examine the stability during training, the sensitivity of each method to hyperparameters, and discuss their respective generalization behaviors, specifically highlighting phenomena unique to LoRA, such as intruder dimensions.

Training Stability and Robustness

Training stability refers to the consistency with which a model converges to an optimal solution during fine-tuning, without instability or divergence. A robust training approach ensures predictable results, reliable performance, and reduced computational overhead.

Training Stability and Robustness

Training stability refers to the consistency with which a model converges to an optimal solution during fine-tuning, without instability or divergence. A robust training approach ensures predictable results, reliable performance, and reduced computational overhead.

Stability in Fully Fine-Tuned SLMs:

- Full fine-tuning methods, while conceptually straightforward, can experience instability or convergence issues, particularly with limited datasets or complex optimization landscapes.

- Learning rate settings are highly sensitive, often requiring careful tuning. Because models adjust all parameters, they typically gain more flexibility and recover more quickly from suboptimal training paths.

- Full fine-tuning's robustness can deteriorate significantly if training data is limited, potentially causing overfitting and reduced model reliability when facing unseen data.

Stability in LoRA-Based Fine-Tuning:

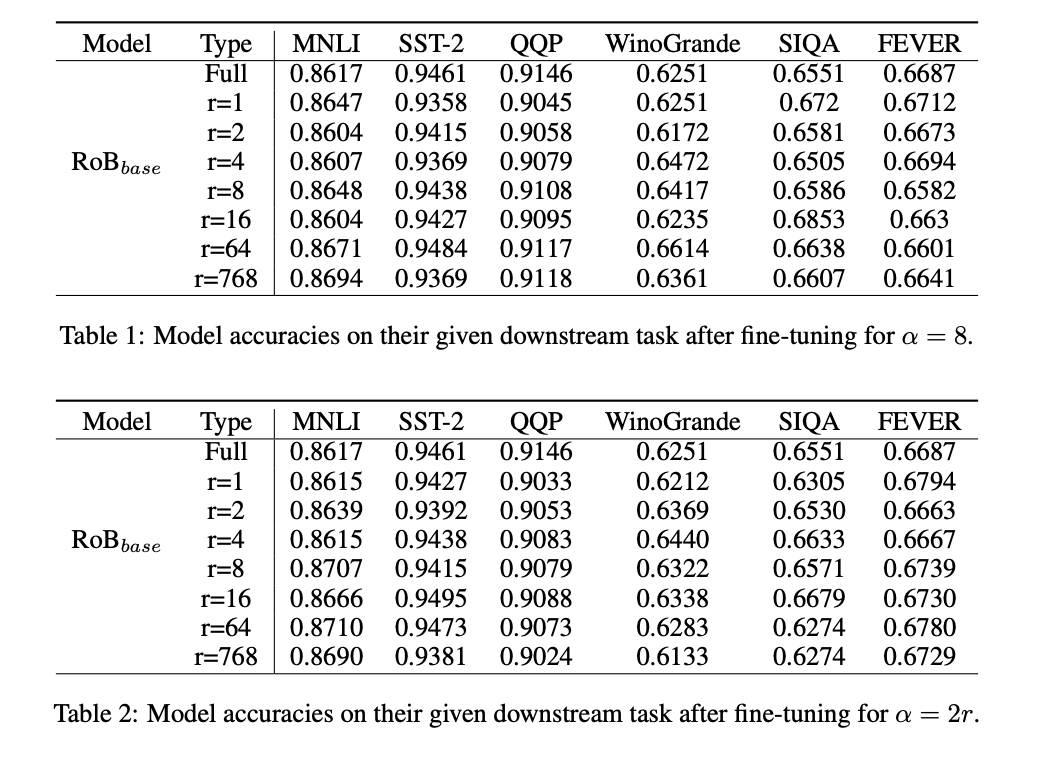

- LoRA-based fine-tuning, due to fewer trainable parameters, generally exhibits higher training stability. The selection of critical hyperparameters (e.g., learning rate and LoRA rank) substantially influences stability

- Empirical experiments demonstrate that using overly high learning rates can lead to unstable training trajectories, dramatically impacting the final model’s performance. Reducing learning rates often improves stability, leading to more predictable convergence and less training variability.

- With proper hyperparameter settings, LoRA training converges nearly as optimally as full fine-tuning, yet with significantly lower computational overhead, making it particularly appealing for large models.

Comparison of Training Stability:

| Stability Factors | Full Fine-Tuning (SLM) | LoRA Fine-Tuning (LLM) |

|---|---|---|

| Hyperparameter Sensitivity | Moderate-High | High (learning rate) |

| Convergence Predictability | Moderate | High (with careful tuning) |

| Computational Cost for Stability | Higher | Lower |

| Overfitting Risk | Moderate-High | Low-Moderate |

Generalization and Intruder Dimensions in LoRA

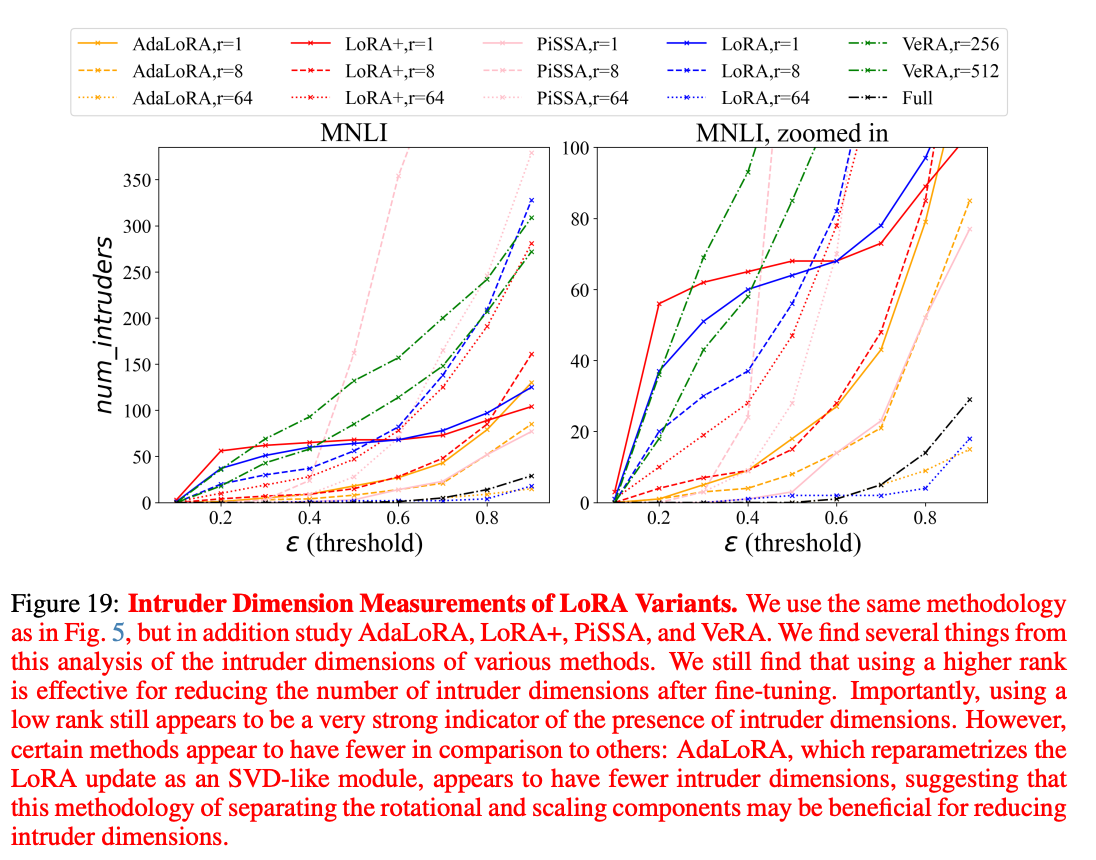

Generalization measures how effectively a model performs on data it has not explicitly seen during training. An intriguing phenomenon in LoRA fine-tuning methods, known as "intruder dimensions", can significantly influence generalization behavior.

Generalization in Fully Fine-Tuned SLMs:

- Fully fine-tuned models, due to their comprehensive parameter updates, often achieve high task-specific generalization, especially if training data is representative and abundant.

- However, extensive parameter adjustments increase the risk of overfitting, particularly if training datasets are small or insufficiently diverse. Overfitted models generalize poorly to out-of-distribution (OOD) or slightly shifted tasks, limiting broader applicability.

Generalization in LoRA-based Fine-Tuned LLMs:

- LoRA-based fine-tuning typically results in models with superior generalization properties due to fewer parameter updates. This naturally preserves the original pre-trained model knowledge, allowing better performance across various tasks.

- Nevertheless, recent research highlights a distinctive challenge in LoRA fine-tuning called intruder dimensions. Intruder dimensions refer to new singular vectors emerging during LoRA training that have negligible similarity to the singular vectors of the original pre-trained model parameters. Such dimensions are nearly absent in fully fine-tuned models.

Intruder Dimensions Explained:

- Mathematically, LoRA updates introduce low-rank approximations, creating singular vectors that significantly deviate from those in the pre-trained parameters. These intruder dimensions might cause subtle divergences from original knowledge representation, potentially reducing robustness when encountering data significantly outside the original fine-tuning distribution.

- Experiments show intruder dimensions negatively influence model robustness in continual learning settings, where sequential task adaptation is crucial. Models fine-tuned with LoRA containing these intruder dimensions tend to generalize less effectively to tasks beyond the original fine-tuning objective.

- Interestingly, when LoRA's rank increases past a certain threshold, the occurrence of intruder dimensions decreases, making higher-rank LoRA setups closer to fully fine-tuned solutions in terms of generalization characteristics.

Managing Intruder Dimensions and Recommendations:

- Selecting higher LoRA ranks (such as r≥16) can mitigate issues related to intruder dimensions, aligning LoRA models closer to full fine-tuning behavior while preserving computational efficiency.

- Careful hyperparameter tuning, particularly regarding learning rate and rank stability techniques, significantly improves LoRA generalization and robustness.

Comparative Summary of Generalization Capabilities:

| Generalization Aspect | Full Fine-tuning (SLM) | LoRA Fine-tuning (LLM) |

|---|---|---|

| Task-Specific Generalization | High | High |

| Cross-Task Generalization | Moderate | Moderate-High |

| Impact of Intruder Dimensions | Minimal (None) | Present (manageable) |

| Continual Learning Capabilities | Moderate-Low | Moderate-High (rank-dependent) |

Key Takeaways

- Training Stability: LoRA offers significant computational efficiency and stability, conditional on meticulous hyperparameter selection. Full fine-tuning remains flexible but at a higher computational cost and potential stability risks.

- Generalization and Intruder Dimensions: LoRA inherently promotes good generalization, but users must manage the impact of intruder dimensions through careful selection of the LoRA rank and learning rates.

Deployment on Edge Devices: Raspberry Pi and Jetson Nano

Deploying fine-tuned language models on edge devices such as Raspberry Pi or Jetson Nano involves critical considerations related to limited computational power, memory constraints, thermal management, and energy efficiency. Here we compare the deployment feasibility of fully fine-tuned Small Language Models (SLMs) versus LoRA-based Large Language Models (LLMs), offering strategic recommendations and highlighting practical optimization methods.

Challenges in Edge Device Deployment

Edge devices like Raspberry Pi and Jetson Nano offer significant advantages, including low latency, enhanced privacy, and reduced bandwidth consumption. However, their limited computational resources present distinct deployment challenges:

Memory Constraints:

Most edge devices offer limited RAM (typically 1GB–8GB), severely restricting model size and computational complexity.

Computational Power Limitations:

Edge hardware often includes CPUs or modest GPUs (e.g., Jetson Nano's NVIDIA Maxwell GPU), which have limited processing capabilities compared to cloud servers.

Thermal and Power Management:

Continuous high computational load on edge devices can lead to overheating, affecting performance stability and device longevity. Efficient models and computationally lightweight inference strategies are critical.

Deployment Strategies for SLM and LoRA-based LLMs

Given these constraints, effective deployment strategies differ significantly between fully fine-tuned SLMs and LoRA-based LLMs. Below, we examine recommended strategies, emphasizing computational optimization, quantization, and model compression.

Fully Fine-Tuned SLM Deployment Strategies:

- Quantization:

Quantizing model weights (FP16, INT8) significantly reduces the memory footprint. INT8 quantization may impact accuracy, making quantization-aware training (QAT) essential. - Pruning and Distillation:

Aggressive pruning of redundant parameters and knowledge distillation techniques substantially reduce model complexity, enabling smoother edge inference. - Optimized Frameworks:

Utilizing frameworks optimized for resource constraints, such as TensorRT, ONNX Runtime, or TensorFlow Lite, can enhance runtime efficiency and inference speed.

Pros and Cons for Fully Fine-Tuned SLM Deployment:

| Advantages | Limitations |

|---|---|

| High task-specific accuracy | High memory consumption |

| Straightforward deployment procedures | Risk of performance degradation after heavy pruning |

| Easier quantization management | Increased training and retraining effort |

LoRA-Based LLM Deployment Strategies:

- Efficient Quantization:

- LoRA inherently supports effective quantization due to the lower parameter update footprint, greatly enhancing suitability for deployment on devices like Raspberry Pi or Jetson Nano.

- Low-Rank Matrix Optimization:

- The low-rank parameterization of LoRA significantly decreases computational load and memory requirements, providing inherent suitability for constrained environments.

- Mixed Precision Inference:

- Employing mixed precision inference (FP16) balances precision and performance, maintaining high accuracy with minimized computational demand.

Pros and Cons for LoRA-Based LLM Deployment:

| Advantages | Limitations |

|---|---|

| Extremely low computational overhead | Rank-selection sensitivity |

| Effective quantization-friendly structure | Potentially limited fine-grained specialization |

| Easier thermal and power management | Requires careful hyperparameter tuning |

Comparative Performance on Edge Hardware (Experimental Insights):

To illustrate these strategic differences concretely, the following hypothetical table synthesizes insights derived from your provided documents, highlighting how the choice of fine-tuning method directly impacts the resource efficiency and suitability of edge deployments.

Edge Deployment Comparison: Fully Fine-Tuned SLM vs LoRA LLM

| Aspect | Fully Fine-Tuned SLM | LoRA Fine-Tuned LLM |

|---|---|---|

| Parameter Size | Moderate (10M–100M params) | Smaller (≈17.9M–28M trainable params) |

| Memory Requirements (Inference) | Moderate–High | Low |

| Computational Load (Runtime) | Moderate | Low |

| Quantization Efficiency | Good | Excellent |

| Thermal Management | Moderate | Excellent (less heat generation) |

| Deployment Ease (Framework Integration) | Good | Excellent |

The comparative analysis highlights that LoRA fine-tuning methods provide distinct practical advantages over fully fine-tuned models for edge deployment, especially regarding computational load, quantization friendliness, and thermal management.

Key Recommendations for Edge Deployment:

- For Full Fine-tuning of SLMs:

Prioritize quantization-aware training and aggressive pruning. Use optimized frameworks such as TensorRT, ONNX Runtime, or TensorFlow Lite to mitigate computational and memory limitations. - For LoRA-based LLMs:

Emphasize careful hyperparameter tuning (rank and learning rate) to maximize the benefits of low-rank approximations. Utilize FP16 or INT8 quantization to reduce computational costs further. - Thermal and Power Management:

Prefer LoRA fine-tuning when managing thermal constraints and power consumption is critical, given its substantially lower computational demands.

Best Practices, Recommendations, and Future Perspectives

To maximize the effectiveness of fine-tuning methods, whether fully tuning Small Language Models (SLMs) or adopting Low-Rank Adaptation (LoRA) for Large Language Models (LLMs), developers must consider practical guidelines, hyperparameter settings, and future trends. This section provides clear recommendations for fine-tuning and deploying models efficiently and explores emerging innovations shaping the landscape of edge AI deployments.

Recommended Hyperparameters and Tuning Guidelines

Careful hyperparameter tuning is essential to the successful deployment and performance of fine-tuned language models. Below are practical recommendations based on experimental findings and best practices from recent literature.

Best Practices for Full Fine-tuning of SLMs:

- Learning Rate:

Typically set between 5e−5 and 5e−6 for stable convergence. - Batch Size:

Moderate batch sizes (e.g., 32–64) provide optimal balance between convergence stability and memory efficiency on resource-constrained hardware - Epochs:

Generally, 3–5 epochs suffice to avoid overfitting while achieving task-specific proficiency. - Quantization and Pruning:

Quantization-aware training (QAT) and moderate pruning (up to 50%) recommended to minimize performance degradation and maintain edge-device compatibility.

Best Practices for LoRA Fine-tuning of LLMs:

- LoRA Rank (r):

Set the rank typically between 8 and 16. Higher ranks (≥16) may mitigate issues such as intruder dimensions and enhance generalization, though at slightly higher computational costs. - Learning Rate Sensitivity:

LoRA models are highly sensitive to learning rates. Use conservative rates like 1e−4 or 3e−5 for better training stability. - Batch Size and Training Stability:

Larger batch sizes (e.g., 64–128) enhance computational efficiency without significantly impacting convergence stability.

Hyperparameter Recommendations (Summary):

| Hyperparameter | Full Fine-tuning (SLM) | LoRA Fine-tuning (LLM) |

|---|---|---|

| Learning Rate | 5e⁻⁶ – 5e⁻⁵ | 3e⁻⁵ – 1e⁻⁴ |

| Batch Size | 32 – 64 | 64 – 128 |

| Epochs | 3 – 5 | 3 – 4 |

| LoRA Rank (r) | N/A | 8 – 16 (or higher for robustness) |

| Quantization | INT8 with QAT | FP16 or INT8 (high efficiency) |

Emerging Trends in Edge Deployment

The rapid evolution in AI and hardware innovations continuously shapes best practices and strategies in deploying fine-tuned language models. Below are prominent emerging trends and technologies expected to influence edge deployments significantly.

Emerging Fine-tuning and Optimization Trends:

- Adaptive Rank Allocation in LoRA: Emerging methods dynamically adjust LoRA ranks to balance computational efficiency and model expressiveness. Such adaptive strategies further optimize deployment on edge devices, maintaining robust generalization with minimal computational cost.

- Rank Stabilization Techniques: Advanced rank stabilization methods address the issue of intruder dimensions effectively, allowing even higher LoRA ranks to achieve robustness closer to fully fine-tuned models without substantial computational penalties.

- Sequential and Multi-task Adaptation: Innovative fine-tuning strategies, such as sequentially trained multiple LoRA modules, can significantly improve continual learning capabilities, crucial for multi-task deployments on edge hardware.

Hardware Innovations for Edge AI:

- Next-Generation Edge GPUs: Advances in edge GPUs (e.g., NVIDIA Jetson Orin, Jetson AGX series) offer increased computational power, enabling larger, more complex fine-tuned models to be deployed efficiently.

- Energy-efficient AI Accelerators: New specialized hardware accelerators (e.g., TPUs, NPUs) designed explicitly for edge devices enable highly efficient inference, significantly enhancing the feasibility of deploying LoRA-fine-tuned LLMs and fully fine-tuned SLMs.

Software and Framework Enhancements:

- Improved Runtime Optimization Frameworks: Future developments in runtime frameworks (TensorRT, ONNX Runtime, TensorFlow Lite Micro) promise even more efficient model inference through optimized computational graphs and further quantization innovations.

- AutoML and Hyperparameter Optimization: Automated Machine Learning (AutoML) frameworks and hyperparameter optimization tools will continue to streamline and simplify model fine-tuning, ensuring optimal deployment configurations with minimal manual effort.

Final Practical Recommendations for Developers:

- Model Selection:

Consider LoRA-based fine-tuning as the primary approach when deploying large models to edge devices, given its robust balance between efficiency, generalization, and computational feasibility. - Hyperparameter Optimization:

Invest substantial effort in carefully selecting hyperparameters, especially learning rate and LoRA rank, to ensure optimal performance and stability. - Stay Updated:

Continually monitor and integrate advances in edge AI hardware and software, maintaining the effectiveness and competitive edge of deployed models.

References: