Air-Gapped AI Solutions: 7 Platforms for Disconnected Enterprise Deployment (2026)

Deploy AI in air-gapped environments with zero internet dependency. Compare 7 enterprise platforms, learn deployment steps, and evaluate compliance for defense, finance, and healthcare.

The organizations that need AI most are the ones that can't plug into the cloud.

Defense agencies processing classified intel. Banks running fraud detection on transaction data that can't leave their network. Hospitals building diagnostic tools on patient records governed by strict privacy law. These teams sit behind networks with zero external connectivity, and most AI vendors don't build for them.

The enterprise AI market hit $98 billion in 2025, with 87% of large enterprises now running AI workloads. But a growing share of those workloads need to run in air-gapped environments where no data touches the public internet.

This guide covers what air-gapped AI actually means, which platforms support it, and how to get a model running in a disconnected environment.

What "Air-Gapped" Actually Means for AI

An air-gapped system is physically and electronically isolated from external networks. No inbound connections. No outbound connections. No internet, no cloud APIs, no telemetry phoning home.

For AI specifically, that means all model inference, fine-tuning, data processing, and updates happen entirely within your controlled perimeter. Many tools that call themselves "on-premise" or "private" still fail this test. A code assistant that checks for license validation against a remote server? Not air-gapped. An inference engine that sends anonymous usage metrics? Also disqualified. Even one outbound connection can make a tool unusable in classified or regulated environments.

Here's how common deployment models compare:

The distinction matters. If your security policy requires zero external connections, only the last row qualifies.

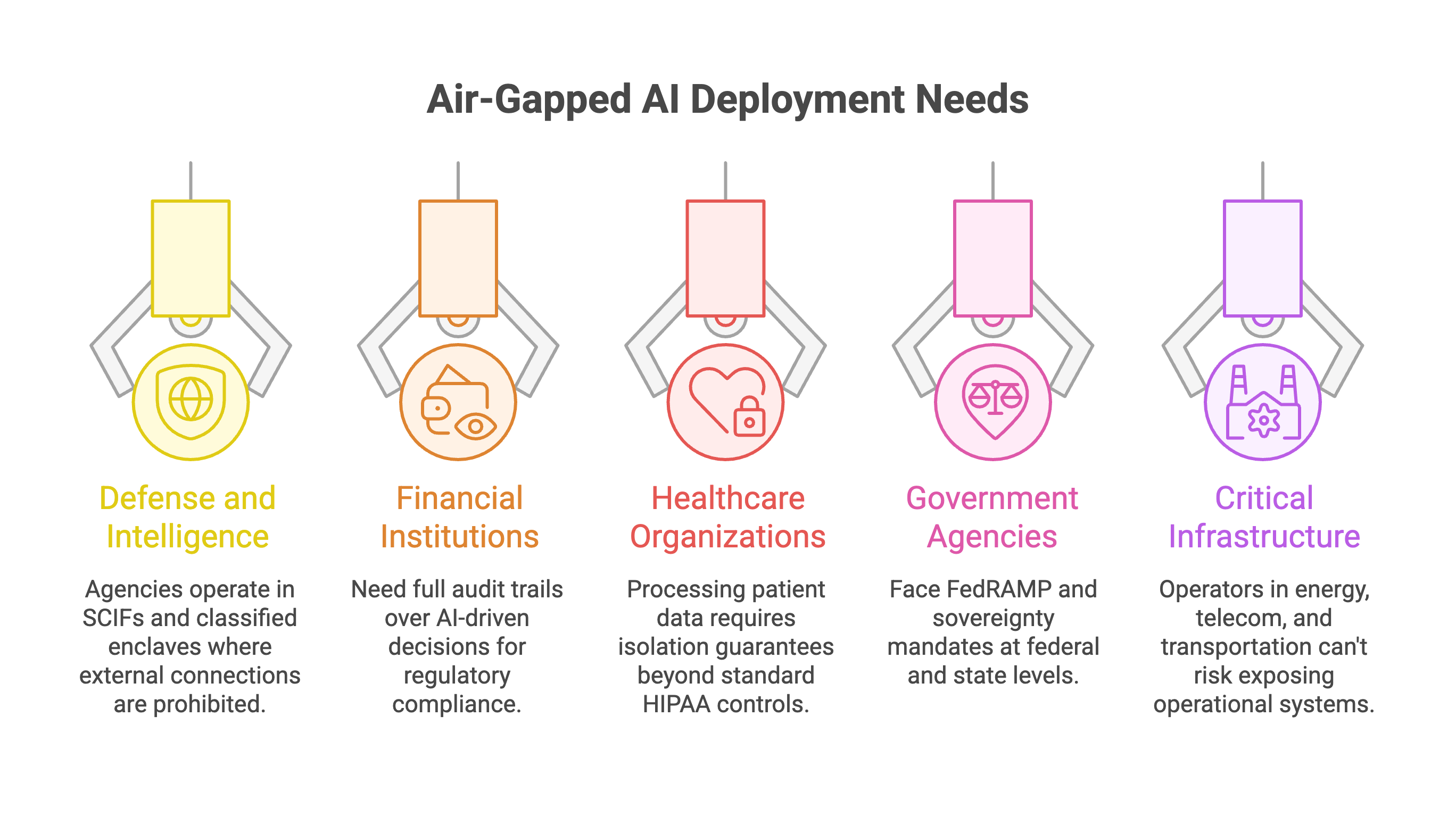

Who Needs Air-Gapped AI Deployment

The short list: anyone handling data that absolutely cannot leave a controlled network.

Defense and intelligence agencies operate in SCIFs and classified enclaves where a single external connection disqualifies a tool from use. Financial institutions need full audit trails over AI-driven decisions for regulatory compliance. Healthcare organizations processing patient data need isolation guarantees that go beyond standard HIPAA controls. Government agencies at federal and state levels face FedRAMP and sovereignty mandates. Critical infrastructure operators in energy, telecom, and transportation can't risk exposing operational systems.

With 78% of organizations now deploying AI in at least one business function, the demand for air-gapped deployment options is catching up fast. The sovereign AI market alone is projected to reach $600 billion by 2030.

7 Air-Gapped AI Solutions for Enterprise Teams

Not every platform handles disconnected deployment the same way. Some ship hardware appliances. Some offer container-based installs. Some give you the tools and expect your team to handle the rest. Here's what's available.

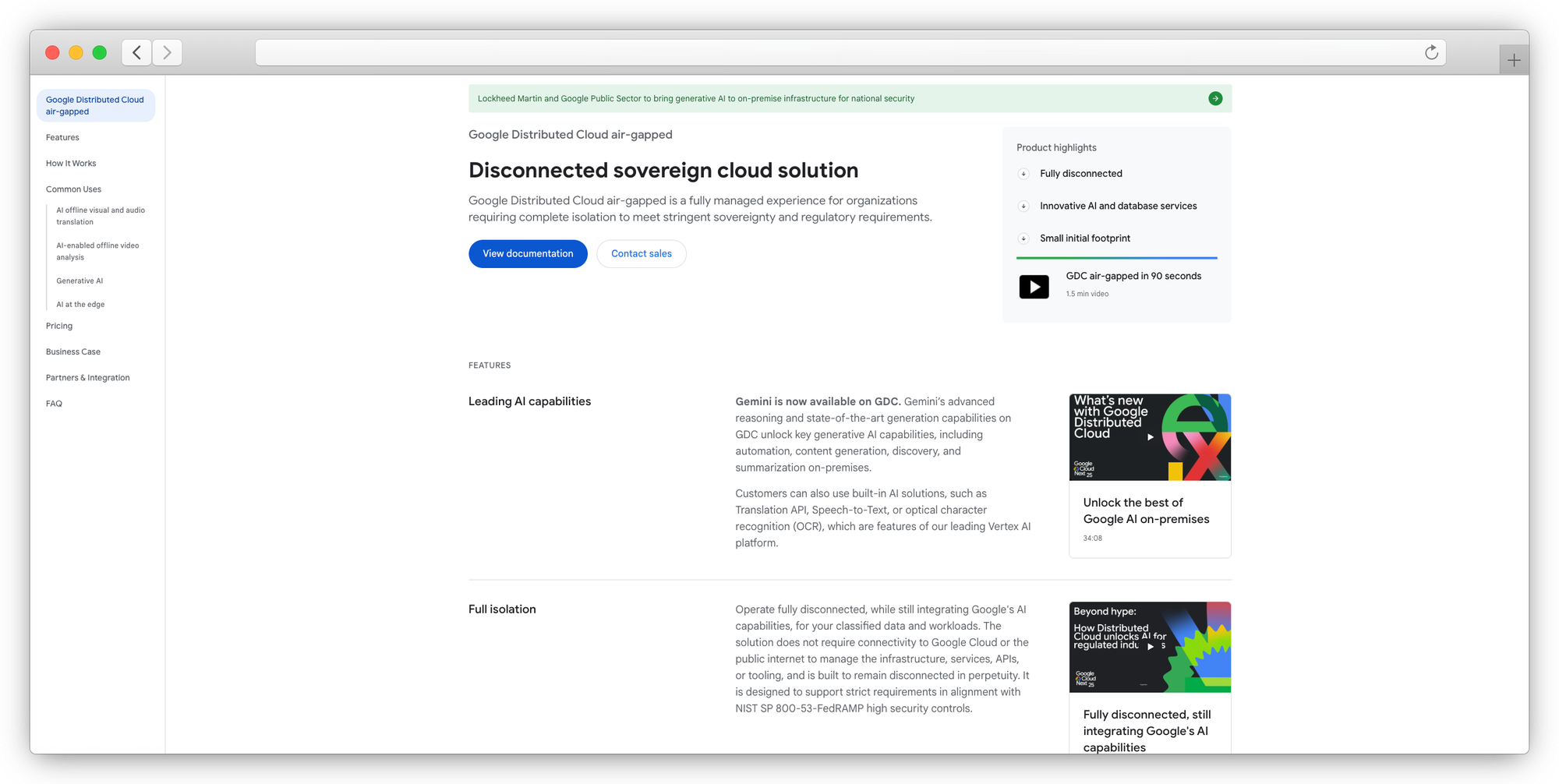

1. Google Distributed Cloud (GDC) Air-Gapped

Google's fully managed solution for organizations that need cloud-grade AI behind classified networks. GDC ships as integrated hardware and software, designed to stay disconnected in perpetuity. Gemini models run on-premises through Vertex AI integration, and air-gapped appliance configurations support tactical edge use cases like medical imaging and object detection. Google or a trusted partner handles operations, with customizable operator citizenship and clearances.

- Deployment: Managed hardware appliance (rack mount or rugged case)

- AI Capabilities: Gemini, Gemma 7B, Vertex AI services, Speech-to-Text, OCR

- Compliance: IL6, FedRAMP High, ISO 27001, SOC II, NIST, NATO D48

- Best for: Government and defense with budget for managed infrastructure

- Trade-off: Expensive. Requires significant hardware investment and long procurement cycles.

2. Prem AI

A self-hosted AI platform built around data sovereignty. What sets Prem AI apart from pure inference tools is the integrated fine-tuning pipeline. You can upload datasets, train custom models from 30+ base architectures (Mistral, LLaMA, Qwen, Gemma), evaluate results, and deploy to your own infrastructure without data ever leaving your environment. Swiss jurisdiction under the FADP adds a legal layer on top of the technical controls.

- Deployment: On-premise, AWS VPC, Kubernetes via Prem-Operator

- Fine-Tuning: 30+ base models, LoRA, knowledge distillation, autonomous fine-tuning

- Compliance: SOC 2, GDPR, HIPAA, Swiss FADP

- Best for: Teams that need to fine-tune and deploy custom models without cloud dependency

- Trade-off: Focused on LLM fine-tuning workflows. Not a general MLOps platform.

3. H2O.ai

H2O.ai combines predictive and generative AI on a single platform, with its h2oGPTe product specifically built for air-gapped deployment. NIH deployed it inside an air-gapped environment to power a policy and procurement assistant. The platform supports SLM distillation on private data and includes AutoML with built-in explainability. Commonwealth Bank of Australia cut scam losses by 70% using the platform, and Gartner named H2O.ai a Visionary in its 2025 Cloud AI Developer Services Magic Quadrant.

- Deployment: On-premise, cloud VPC, air-gapped via Replicated + Helm charts

- AI Capabilities: Generative AI (h2oGPTe), predictive AI, SLM distillation, AutoML

- Compliance: SOC 2, GDPR

- Best for: Enterprises that need both predictive and generative AI at scale

- Trade-off: Complex initial setup. Steep learning curve for teams new to the platform.

4. Cohere

Cohere builds enterprise NLP models with VPC and on-premise deployment options. Their Command models (including Command A, which the company claims processes 75% faster than GPT-4o) run on as few as 2 GPUs. The Embed and Rerank stack handles semantic search and RAG use cases. Customers include RBC, Dell, Oracle, and McKinsey. ARR grew from $13M in 2022 to $70M by early 2025.

- Deployment: On-premise, AWS/Azure/GCP VPC, hybrid, air-gapped

- AI Capabilities: Command models, Embed, Rerank, RAG pipeline

- Compliance: GDPR, SOC-2, ISO 27001

- Best for: Regulated industries needing strong NLP and data sovereignty

- Trade-off: Less multimodal capability than competitors. Enterprise pricing is opaque.

5. Red Hat OpenShift AI

A Kubernetes-native MLOps platform that extends OpenShift into AI workloads. Air-gapped installation uses the oc-mirror plugin and agent-based installer to deploy without internet access. GPU acceleration supports NVIDIA, AMD, and Intel hardware. The platform integrates with IBM watsonx.ai and partner tools like Anaconda, Intel OpenVINO, and NVIDIA AI Enterprise. Built on the upstream Open Data Hub project with 20+ open-source AI/ML components.

- Deployment: On-premise, hybrid cloud, disconnected/air-gapped

- AI Capabilities: Model serving, training, GPU-as-a-service, partner model ecosystem

- Compliance: Supports strict regulatory environments

- Best for: Infrastructure teams with existing Kubernetes expertise

- Trade-off: Air-gapped dependency management requires careful mirror configuration. Not plug-and-play.

6. Katonic AI

A sovereign AI platform designed for air-gapped operations from day one. Katonic uses a zero-egress architecture where nothing leaves the deployment perimeter. The platform supports open-weight models (Llama, Mistral, Falcon) with local fine-tuning and a full-stack agent architecture (Brain + Body + Guardrails). A SUSE partnership extends their reach into APAC/ANZ markets. Typical deployment timeline: 2 weeks to first app, 30-60 days for full platform rollout.

- Deployment: On-premise, VPC, air-gapped, hybrid

- AI Capabilities: Open-weight models, local fine-tuning, agentic AI framework

- Compliance: ISO 27001, SOC 2 Type II, GDPR, HIPAA

- Best for: Defense contractors and organizations prioritizing complete sovereignty

- Trade-off: Newer platform with less enterprise track record than established players.

7. Open-Source Stack: Ollama + vLLM

The DIY path for teams with strong infrastructure skills and zero licensing budget. Ollama handles model management with one-command downloads and GGUF quantization for running smaller models on limited hardware. vLLM handles production inference with PagedAttention, delivering 2-4x throughput over standard serving. Use Harbor or a self-hosted Docker registry to manage container images offline.

Real-world performance varies by use case. In developer communities, Ollama gets praise for easy single-user setups but struggles with concurrent requests. vLLM handles multi-user production loads far better, with benchmarks showing up to 793 tokens per second versus Ollama's 41 TPS at peak concurrency.

- Deployment: Docker/Podman, Kubernetes (k3s/MicroK8s for smaller environments)

- AI Capabilities: Any open-weight model, manual fine-tuning, custom pipelines

- Compliance: N/A (you manage everything)

- Best for: Cost-conscious teams with in-house ML engineering talent

- Trade-off: No vendor support. Manual updates. You own every failure mode.

Quick Comparison

How to Deploy AI in an Air-Gapped Environment

Getting AI running behind an air gap isn't just installing software. Every dependency, every model weight, every container image needs to be packaged and transferred before deployment begins.

1. Audit and package everything offline.

Catalog every dependency your AI stack needs: model weights (often 10-70GB per model), container images, GPU drivers, CUDA libraries, Helm charts, Python packages. Download them on a connected system, verify checksums, and transfer via approved media (USB, internal FTP, or physical drives depending on your security policy).

2. Stand up an internal container registry.

Harbor is the go-to for air-gapped container management. It handles image storage, vulnerability scanning, and access control without needing internet. A self-hosted Docker registry works for simpler setups.

3. Configure compute infrastructure.

GPU selection depends on your models. A 7B parameter model runs on a single A10 or L4. Anything above 30B needs multi-GPU setups (A100, H100). Plan for storage (models + data + logs), networking between nodes, and internal DNS if running Kubernetes.

4. Deploy via Kubernetes or Docker.

For production, Kubernetes with k3s or MicroK8s works for smaller clusters. Larger environments use full OpenShift or vanilla Kubernetes. For prototyping, Docker Compose gets a model serving in hours instead of days. Tools like Prem-Operator automate Kubernetes-based AI model deployment if you're using that stack.

5. Build offline update workflows.

Models don't stay current on their own. Establish a process for periodically bringing in updated weights, security patches, and new model versions through your transfer pipeline. Version-lock models so you can trace exactly which version produced any given output.

6. Set up local monitoring and audit logging.

Prometheus and Grafana handle metrics. OpenTelemetry captures traces. Every prompt, response, and model version should be logged locally for compliance. This isn't optional in regulated environments; auditors will ask for it.

What to Look for When Evaluating Air-Gapped AI Platforms

Before signing a contract, verify these six things:

- True zero connectivity. Does the platform work with absolutely no internet? Check for hidden telemetry, license validation calls, and update checks. Ask vendors directly: "Does any component make outbound network requests?"

- Offline model updates. Can you update models and software without connecting to the internet? What's the process? How large are the update packages?

- Compliance certifications. Match certifications to your industry. Defense needs FedRAMP/IL levels. Healthcare needs HIPAA. Finance needs SOC 2. European operations need GDPR compliance. Get the actual audit reports, not just marketing claims.

- Fine-tuning capability. If you need custom models trained on your data, verify the platform supports fine-tuning in the air-gapped environment itself. Sending data out for training defeats the purpose. Platforms like Prem AI handle the full lifecycle, from dataset preparation through evaluation, entirely on-premise.

- Hardware requirements. Get specific numbers. How many GPUs? What VRAM? How much storage? What's the minimum viable configuration versus the recommended production setup? Check whether the platform supports running on modest hardware or demands enterprise-grade clusters.

- Vendor support model. What happens when something breaks at 2 AM? Some vendors offer on-site support. Others give you documentation and a ticket queue. For air-gapped deployments, remote debugging isn't always possible.

FAQ

Can you run LLMs in a completely air-gapped environment?

Yes. Open-weight models like Llama, Mistral, and Qwen run entirely locally once the weights are transferred in. You need sufficient GPU resources, but no internet connection is required for inference or fine-tuning after initial setup.

What's the difference between air-gapped and on-premise deployment?

On-premise means the hardware is in your data center, but it may still have internet access for updates, licensing, or management. Air-gapped means the system has zero external network connections. Many "on-premise" solutions still require occasional connectivity, which disqualifies them from true air-gapped environments.

How do you update AI models in an air-gapped environment?

Through a controlled transfer process. Download updates on a connected system, verify integrity with checksums, transfer via approved media (USB drives, internal network bridges, or physical transport), and apply them inside the air-gapped perimeter. Version control is critical for audit compliance.

What hardware do you need for air-gapped AI?

It depends on the model size. A 7B parameter model runs on a single GPU with 24GB VRAM. Models in the 30-70B range need multi-GPU configurations. For production workloads serving multiple users, plan for dedicated inference GPUs, at least 1TB of fast storage, and internal networking between nodes. Smaller models can run on surprisingly modest hardware if you apply quantization techniques.

Conclusion

Air-gapped AI deployment adds complexity. There's no way around that. But for organizations handling classified, regulated, or sensitive data, it's the only path that satisfies both the security team and the AI team.

The good news: the tooling has caught up. You can choose a fully managed appliance from Google, a fine-tuning platform like Prem AI that gives you model customization with full data sovereignty, or a pure open-source stack if your team has the expertise to maintain it.

Start by mapping your compliance requirements to the comparison table above. That'll narrow the field fast. Then run a proof-of-concept in a test environment before committing to production deployment.